Welcome back, let’s dive into Chapter 50 of this insightful series!

Graph retrieval-augmented generation (GraphRAG) is a powerful RAG paradigm, which I've covered extensively before (AI Exploration Journey: Graph RAG).

By using graphs to capture the relationships between specific concepts, GraphRAG helps organize knowledge in a more structured way — making retrieval more coherent and reasoning more accurate.

But while the idea sounds compelling, but here's something worth thinking about: Is GraphRAG really effective, and in which scenarios do graph structures provide measurable benefits for RAG systems?

To answer this question, "When to use Graphs in RAG" introduces GraphRAG-Bench, a comprehensive benchmark designed to evaluate GraphRAG models on deep reasoning.

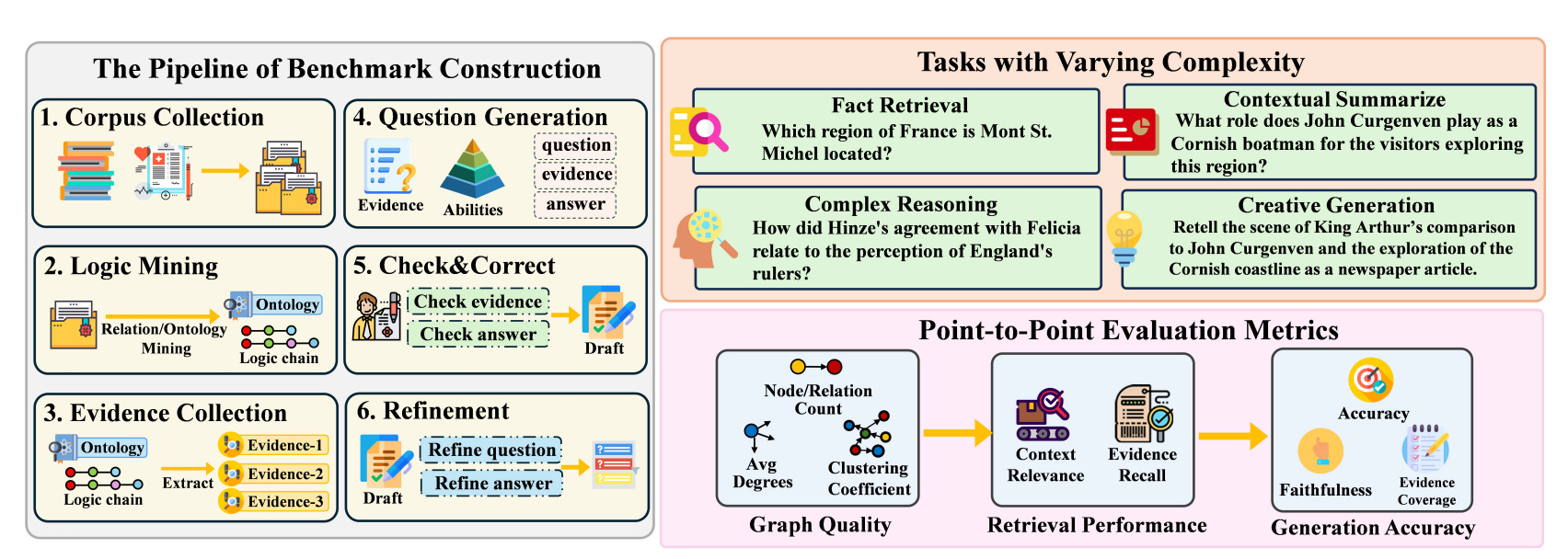

Figure 2 breaks down GraphRAG-Bench into three core components:

The benchmark construction pipeline (left).

Task classification by difficulty (top right).

A multi-stage evaluation framework that covers indexing, retrieval, and generation (bottom right).

Key Findings

We can skip showing the experimental results tables. Let's jump straight into the important findings.

How does GraphRAG Perform Compared to RAG on the Benchmark? (Generation Accuracy)

Basic RAG matches GraphRAG in simple fact retrieval task: When it comes to simple fact retrieval, basic RAG is comparable to or outperforms GraphRAG when complex reasoning across connected concepts isn't needed. In simpler tasks, extra graph processing may add redundant or noisy information, degrading answer quality. We've seen a similar takeaway in my earlier article as well (Can GraphRAG Replace Traditional RAG?).

GraphRAG excels in complex tasks: GraphRAG stands out in tasks like complex reasoning, contextual summarization, and creative generation — all of which rely on navigating relationships between multiple concepts, something graphs are inherently well-suited for.

GraphRAG ensures greater factual reliability in creative tasks: RAPTOR (Advanced RAG 12: Enhancing Global Understanding) ranks highest in faithfulness, scoring 70.9% on the Novel dataset. RAG, on the other hand, covers more evidence—40.0% to be exact. That gap likely comes down to how GraphRAG retrieves information. GraphRAG's more fragmented approach can make it harder to generate broad, comprehensive answers. In the end, it's a classic trade-off: GraphRAG excels at precision, but that focus comes at the cost of wider coverage.

Does GraphRAG Retrieve Higher-Quality and Less Redundant Information in the Retrieval Process? (Retrieval Performance)

RAG does a great job retrieving discrete facts for simple questions that don't require much reasoning, hitting 83.2% Context Recall on the novel dataset—outperforming even HippoRAG2's (AI Innovations and Insights 31: olmOCR , HippoRAG 2, and RAG Web UI) top score on Context Relevance. We see the same pattern on the medical dataset: for simpler Level 1 questions, the right answer is usually found in just one passage. In these cases, GraphRAG's use of a knowledge graph tends to bring in extra, logically related info that isn't really needed.

GraphRAG really starts to shine when the questions get more complex. On Level 2 and 3 questions in the novel dataset, HippoRAG (Advanced RAG 12: Enhancing Global Understanding) delivers impressive Evidence Recall (87.9–90.9%), while HippoRAG2 (AI Innovations and Insights 31: olmOCR , HippoRAG 2, and RAG Web UI) edges ahead on Context Relevance (85.8–87.8%). We see the same trend in the medical dataset, where GraphRAG's strength becomes even more clear—it's especially good at linking information scattered across different parts of the text, which is key for multi-hop reasoning and comprehensive summarization.

RAG and GraphRAG each have their strengths when it comes to creative tasks that call for broad knowledge synthesis. Global-GraphRAG stands out with higher Evidence Recall (83.1%), while RAG keeps the edge in Context Relevance (78.8%). GraphRAG tends to surface more relevant information overall, but its retrieval method also brings in a bit more redundancy—whereas RAG stays tighter and more focused.

Does the constructed graph correctly organize the underlying knowledge? (Graph complexity)

Different versions of GraphRAG build index graphs with noticeably different structures. HippoRAG2 (AI Innovations and Insights 31: olmOCR , HippoRAG 2, and RAG Web UI) generates much denser graphs—both in terms of nodes and edges—compared to other frameworks. On the novel dataset, it averages 2,310 edges and 523 nodes; on the medical dataset, those numbers jump to 3,979 edges and 598 nodes. This extra density boosts how well information is connected and covered, which in turn helps HippoRAG2 retrieve and generate content more effectively.

Does GraphRAG introduce significant token overhead during retrieval? (Efficiency)

GraphRAG tends to produce much longer prompts than vanilla RAG, thanks to its extra steps in retrieval and graph-based aggregation. Global-GraphRAG (Advanced RAG 12: Enhancing Global Understanding), which includes a community summarization step, can push prompt length up to around 40,000 tokens. LightRAG (AI Innovations and Trends 03: LightRAG, Docling, DRIFT, and More) also generates fairly long prompts—about 10,000 tokens. In contrast, HippoRAG2 (AI Innovations and Insights 31: olmOCR , HippoRAG 2, and RAG Web UI) keeps things much more compact at around 1,000 tokens, making it notably more efficient. The takeaway? GraphRAG's structured pipeline comes with a real token cost.

As tasks get more complex and require more knowledge, GraphRAG's prompt length grows noticeably. For example, Global-GraphRAG's prompt size jumps from 7,800 to 40,000 tokens as task difficulty increases. But with that growth comes a downside—more tokens often mean more redundancy, which can hurt context relevance during retrieval. It's a classic trade-off: GraphRAG brings better structure and broader coverage, but it may also lead to inefficiencies due to prompt inflation, especially in complex tasks.

Final Thoughts

In recent years, when it comes to complex reasoning and knowledge-heavy tasks, it’s been unclear whether GraphRAG truly offers an advantage—mainly because there hasn’t been a systematic way to evaluate it. That’s why the introduction of a targeted benchmark to directly address this gap is a valuable task.

Overall, GraphRAG tends to underperform traditional RAG on simpler tasks, often due to redundant information cluttering the prompt. But when things get more complex—multi-hop reasoning, deeper context integration—it shows a clear advantage. That said, not all GraphRAG models are created equal. The density and quality of their graph structures vary significantly, impacting retrieval coverage, generation accuracy, and how well they adapt to different tasks.

While graph-based enhancements do improve information organization and reasoning, they also come with a heavy token cost. In the end, it’s a balancing act between performance and efficiency.