Welcome back, let’s dive into Chapter 49 of this insightful series!

Currently, there’s still no effective way to turn long, complex, and multimodal academic papers into clear, visually engaging posters. Existing models struggle with the layout, visual design, and deciding what information matters most.

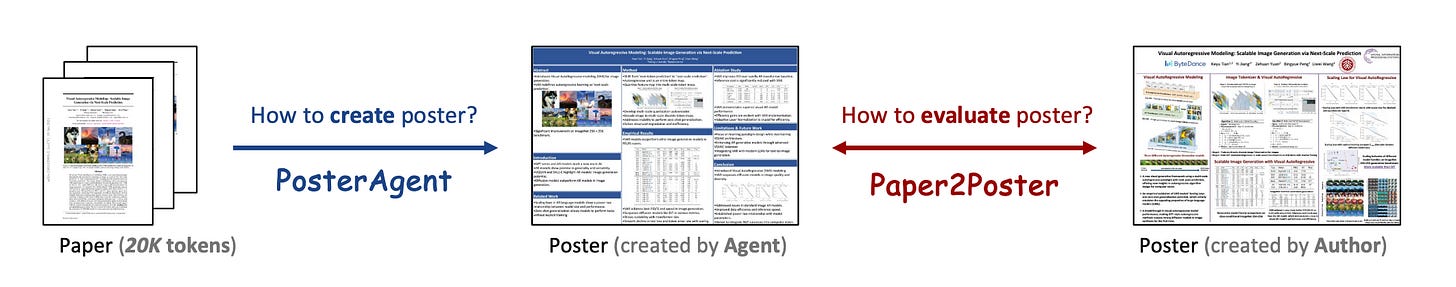

To tackle this, as shown in Figure 1, Paper2Poster proposes an automated framework (open-source code: https://github.com/Paper2Poster/Paper2Poster) — along with a benchmark—to improve both the quality and efficiency of poster generation.

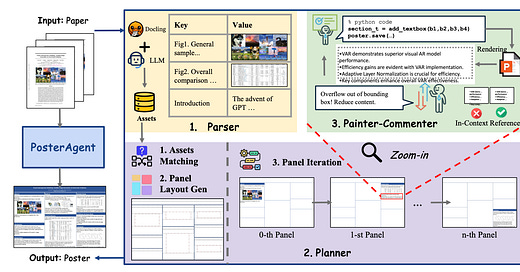

Figure 2 illustrates the core architecture of the PosterAgent system, which converts a full scientific paper into a structured academic poster through a three-stage pipeline: Parser, Planner, and Painter–Commenter.

Parser – Organizing the Raw Content: The pipeline starts by parsing the PDF using tools like marker (Demystifying PDF Parsing 02: Pipeline-Based Method) and Docling (AI Innovations and Trends 03: LightRAG, Docling, DRIFT, and More), which convert each page into structured Markdown. Then, an LLM summarizes each section, while figures and tables are extracted with their captions, forming a structured, JSON-like outline. These are organized into a clean, reusable asset library that captures both textual and visual elements of the paper.

Planner – Structuring the Layout: Next, the planner matches text sections with relevant visuals and arranges them into a binary-tree layout. The layout preserves logical reading order and maintains visual balance, with panels sized proportionally based on content length.

Painter–Commenter – Rendering with Feedback: The painter transforms each (section, figure) pair into draft panel images by summarizing content with an LLM and generating presentation code using python-pptx, which is executed to render the visuals. Then, a vision-language model (VLM) acts as a commenter to review the output, checking for issues like text overflow or poor spacing. With targeted visual feedback, the layout is refined until it meets quality standards.

So how do we evaluate the quality of a generated poster?

As shown in Figure 3, Paper2Poster introduces four key metrics:

Visual Quality: It looks at how visually appealing and well-structured the poster is. Two sub-metrics are used:

Visual Similarity: Measures how close the generated poster is to a human-designed one using CLIP embeddings.

Figure Relevance: Evaluates whether each image semantically matches its related text section, again using CLIP. If no image is present, the score is zero.

Textual Coherence: It uses the LLaMA-2-7B model to calculate perplexity (PPL). A lower PPL means the poster language reads more naturally and smoothly.

Holistic Assessment: Here, a vision-language model (like GPT-4o) acts as an automated judge, scoring the poster across six dimensions to provide an overall quality rating.

PaperQuiz: Arguably the most creative metric. Imagine a reader trying to answer questions based on just the poster. The system generates 100 multiple-choice questions (50 on basic understanding, 50 on deeper reasoning) directly from the original paper. Then, various VLMs—ranging from casual to expert level—attempt to answer using only the poster. Final scores are weighted by both accuracy and text conciseness. PaperQuiz directly tests whether the poster truly captures the core ideas of the paper.

Final Thoughts

Automatically generating academic posters is a highly practical but long-overlooked use case—especially in the context of research conferences, where posters are everywhere but still crafted mostly by hand.

Compared to tasks like auto-generating slide decks, poster generation is much more challenging: it requires tighter information compression, stricter spatial constraints, and more complex visual layouts. That’s why previous work has largely remained exploratory.

Paper2Poster takes a meaningful step forward by offering a well-defined task setup, a benchmark dataset, and a clear set of evaluation metrics—laying solid groundwork for future research in this space.

Document parsing is at the heart of so many applications—from building knowledge graphs to powering RAG systems, from PDF translation (Translating PDFs Without Breaking Layout: Is It Really Possible?) to poster generation — document parsing is everywhere.

In my view, a solid document parsing tool doesn’t just make things easier—it can define the ceiling of what your intelligent system is capable of!