As RAG systems become increasingly modular, understanding how different components and configurations impact performance is more important than ever.

We've discussed the best practices of RAG in the previous article (The Best Practices of RAG).

In this post, we introduce "Enhancing Retrieval-Augmented Generation: A Study of Best Practices", which explores key factors affecting system performance, including model size, prompt design, and knowledge base size, among others.

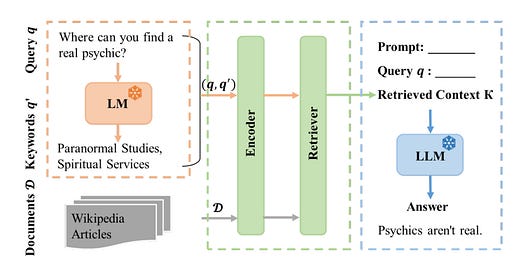

To explore these questions, as shown in Figure 1, a RAG system is designed and tested with different configurations.

The system consists of three main components: a query expansion module, a retrieval module, and a text generation module. Given a query q, the LLM expands it to generate relevant keywords q'. The retriever then selects relevant contexts K by comparing the embeddings of D with (q, q'). Finally, the generative LLM uses the original query q, a prompt, and the retrieved contexts K to generate the final response.

Through systematic experiments and analysis, it identifies these critical elements and proposes new configuration methods to optimize performance.

Let’s go straight to the key findings:

Contrastive In-Context Learning RAG outperforms all other RAG variants, especially on the MMLU dataset, where deeper domain knowledge is required. In other words, incorporating both correct and incorrect examples during retrieval helps the model distinguish between factual and misleading information during generation, improving accuracy and factual consistency.

Focus Mode RAG ranks second and significantly outperforms other baseline models, highlighting the importance of prompting models with high-precision yet concise retrieved documents. This means that selecting key sentences instead of retrieving full documents can reduce irrelevant information, leading to more relevant and accurate generated content.

The size of the RAG knowledge base is not a critical factor; what matters more is the quality and relevance of the retrieved documents.

Factors like query expansion, multilingual representations, chunk size variations, and retrieval stride did not lead to meaningful improvements in performance metrics.

In terms of factuality performance, Contrastive In-Context Learning RAG and Focus Mode RAG remain the top models. However, the Query Expansion approach ranked second on the TruthfulQA dataset.

Even within a RAG framework, prompt formulation remains crucial for overall performance.

Increasing model size does improve generation quality, but the gains diminish as the number of parameters grows. Experiments show that while larger models (e.g., Mistral-45B vs. Mistral-7B) perform better on the TruthfulQA dataset, the improvement on MMLU is limited. Moreover, increasing model size is less effective than optimizing retrieval strategies, such as In-Context Learning and Focus Mode.

Frequent updates to the retrieval context (Stride 1) can reduce coherence, while a larger stride (Stride 5) helps maintain stable retrieval quality. In other words, a larger retrieval stride generally improves response coherence and relevance, while too frequent retrieval updates may disrupt context stability.

Thoughts and Insights

Overall, optimizing RAG isn’t just about using a larger knowledge base or a more powerful model—it depends more on refining core components like retrieval strategies, prompt design, and information filtering mechanisms.

The impact of query expansion on improving generation quality is quite limited, which honestly surprised me. This may be because highly relevant documents are often already retrievable with the original query, and expanding it can introduce unnecessary noise.

One important problem not covered in this survey is latency. A key challenge in real-world applications is how to run RAG efficiently in high-throughput, low-latency settings.

In addition, the survey mainly compares 7B and 45B models, but industry-standard models today also include 13B, 32B, and 72B. Do these models have different levels of dependence on RAG?