Since January 2025, the DeepSeek-R1 model has been in the spotlight.

This week, a new reasoning LLM called s1 emerged, it is said to use only 1k data and match the performance of top-tier models like OpenAI's o1 and DeepSeek-R1, costing only $50.

Curious about its capabilities, I took a deep dive into the s1 research paper. Here’s what I found, in a simple Q&A format—along with some thoughts and insights.

Does s1 really match or even surpass OpenAI o1 and DeepSeek-R1?

Not quite.

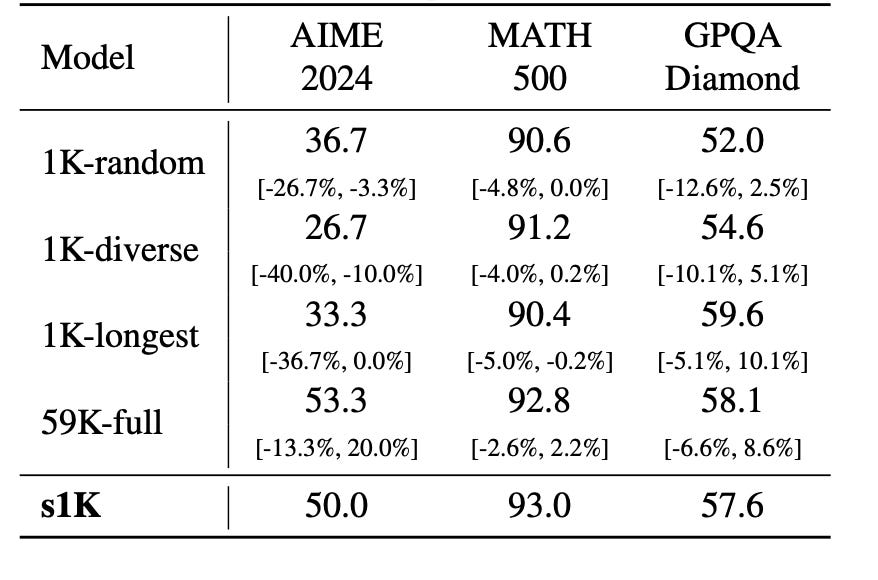

As shown in Figure 1, s1 doesn’t surpass o1 or even o1-mini.

As for DeepSeek-R1, s1 falls short—especially compared to DeepSeek-R1's 32B model distilled from 800K data, where the gap is significant.

So, it’s not exactly a fair comparison to say s1 "rivals" these top models.

So where does s1 perform well?

As shown in Figure 1, s1 overall performs better than o1-preview and Sky-T1. It also surpasses QWQ-32B on AIME and MATH, and outperforms Bespoke-32B on GPQA.

Moreover, the budget forcing method proves to be highly effective, boosting s1 performance on AIME by an impressive 6.7%.

Did s1 Really Achieve This With Just 1K Data?

Not exactly.

The 1K dataset was carefully selected from a larger 59K dataset to save training time.

In fact, training on the full 59K examples—which includes everything in the 1K subset—doesn’t provide significant improvements over using just the selected 1K.

How to Construct the 59K Dataset

Three guiding principles followed:

Quality: Ensured high-quality data by removing formatting errors and irrelevant content.

Difficulty: Focused on more challenging problems to strengthen the model’s reasoning ability.

Diversity: Included questions from various fields like math, physics, and biology to improve generalization.

The process started with collecting 59,029 reasoning problems from 16 different sources, including math competition questions, science problems, and so on...

To sharpen reasoning skills, two new datasets were introduced: s1-prob, featuring PhD-level probability exam questions from Stanford, and s1-teasers, a collection of challenging logic puzzles.

Each problem was enriched with reasoning traces and response generated using the Google Gemini Flash Thinking API, forming a (question, generated reasoning trace, and generated solution) triplet. Additionally, duplicates were removed.

How to Construct the 1k Dataset?

The process started with cleaning the 59K dataset, removing formatting errors and failed API generations, leaving 51,581 high-quality samples.

To filter for difficulty, the dataset was evaluated using Qwen2.5-7B and Qwen2.5-32B. Any problem that both models could solve easily was discarded, reducing the set to 24,496 harder questions.

Next, Claude 3.5 Sonnet categorized the questions to ensure coverage across 50 subjects, including math, physics, and computer science.

Within each subject, questions were randomly selected, with a preference for those requiring longer reasoning chains to enhance complexity and diversity. This process continued until 1,000 representative questions were chosen, forming the final s1K dataset.

The strength of this dataset lies in its small but high-quality design—sourced from diverse datasets, rigorously filtered, and optimized for efficient training of strong reasoning models.

How to Train s1?

Here is the training process of s1:

Choosing a Base Model: Selected Qwen2.5-32B-Instruct as the foundation, a model already equipped with solid reasoning capabilities.

Preparing Training Data: The previous s1K dataset was used for fine-tuning.

Supervised Fine-Tuning (SFT): The model was trained on s1K dataset, learning to follow structured reasoning paths step by step.

Training Setup: Using PyTorch FSDP for distributed training, the process ran on 16 NVIDIA H100 GPUs and finished in just 26 minutes.

Does s1 Really Cost Just $50?

If we’re only counting the GPU time used for the final fine-tuning step, then yes—or even less. s1-32B only required 7 H100 GPU hours.

This $50 figure doesn’t include the cost of:

Data collection

Testing and evaluation

Human labor

Pre-training Qwen2.5-32B

So while the fine-tuning s1 is incredibly cheap, the full cost of developing s1 is much higher.

What is Budget Forcing?

At reasoning stage, s1 introduces a budget forcing method to control reasoning time and computation. It is a simple decoding-time intervention controls the number of thinking tokens:

To enforce a maximum, the end-of-thinking token and “Final Answer:” are appended to force early exit and prompt the model to provide its best answer.

To enforce a minimum, the end-of-thinking token is suppressed, and “Wait” may be added to the reasoning path, encouraging further reflection.

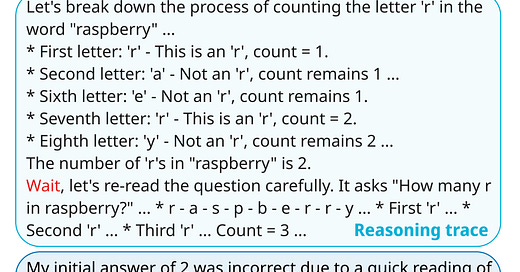

Take the question: "How many 'r's are in 'raspberry'?"

Initial Reasoning: The model counts the ‘r’s one by one: 1st 'r' → Count = 1, …, 7th 'r' → Count = 2.

It stops at the 8th step and gives a wrong answer: 2 (The correct answer is 3).

Normally, the model would stop, assuming its first attempt was correct. But here, budget forcing intervenes:

The system blocks the stop signal and adds "Wait" at the end of the reasoning path, forcing the model to rethink.

The model re-examines the word, realizes it missed an ‘r’, and corrects itself to 3.

By forcing a second look, it self-corrects and improves accuracy. This reminds me of Marco-o1 was introduced earlier. A reflection mechanism was introduced by adding the phrase "Wait! Maybe I made some mistakes! I need to rethink from scratch." at the end of each thought process.

Commentary

After reading the entire paper, I found that s1 is somewhat similar to the cold-start phase of the DeepSeek-R1. DeepSeek-R1 uses much longer reasoning chains (thousands of steps), and after the base model gains basic reasoning capabilities, it undergoes reinforcement learning.

You could also view s1 as essentially reproducing the process of distilling the DeepSeek-R1 671B model down to a 32B version.

In my view, the key contribution of s1 lies in the open-sourcing of its training data. Perhaps "small SFT data + test-time scaling" could emerge as a new paradigm for enhancing LLM reasoning abilities.

In addition, I have a few concerns.

As we discussed earlier, this reflection approach is still too simplistic and lacks finer control. Additionally, it hasn't been fully tested to determine whether the regenerated paths are diverse enough or if they can effectively avoid repeating past mistakes.

There’s no testing related to programming, and the benchmarks don't cover any programming tasks.

It doesn’t fully address which method is more effective between supervised fine-tuning (SFT) and budgeted forcing (BF). Figure 1 shows the results of SFT without BF (s1 w/o BF), but it doesn't include results for Qwen2.5-32B without SFT, where thinking is extended directly through BF.

The following report provides insights into s1 and DeepSeek-R1 that you may find valuable:

From Brute Force to Brain Power: How Stanford's s1 Surpasses DeepSeek-R1

https://papers.ssrn.com/sol3/papers.cfm?abstract_id=5130864