I still remember writing about key advances in multimodal RAG (MultiModal RAG Unveiled: A Deep Dive into Cutting-Edge Advancements).

Recently, I came across a survey on multimodal RAG. Here’s what I found.

As shown in Figure 1, the pipeline of multimodal RAG is clearly laid out.

The process starts with query preprocessing, where user queries are refined and mapped into a shared embedding space alongside a multimodal database. Retrieval strategies—such as modality-specific searches, similarity-based retrieval, and re-ranking—help identify the most relevant documents. To integrate data from different modalities, fusion techniques like score fusion and attention-based methods ensure alignment.

Augmentation techniques, including iterative retrieval with feedback, further refine the selected documents before they are fed into the multimodal language model.

During generation, advanced methods like Chain-of-Thought reasoning and source attribution enhance the quality and reliability of the output.

Optimization relies on a combination of alignment loss and generation loss to fine-tune both retrieval and generation. Additionally, noise management techniques improve training stability and overall robustness.

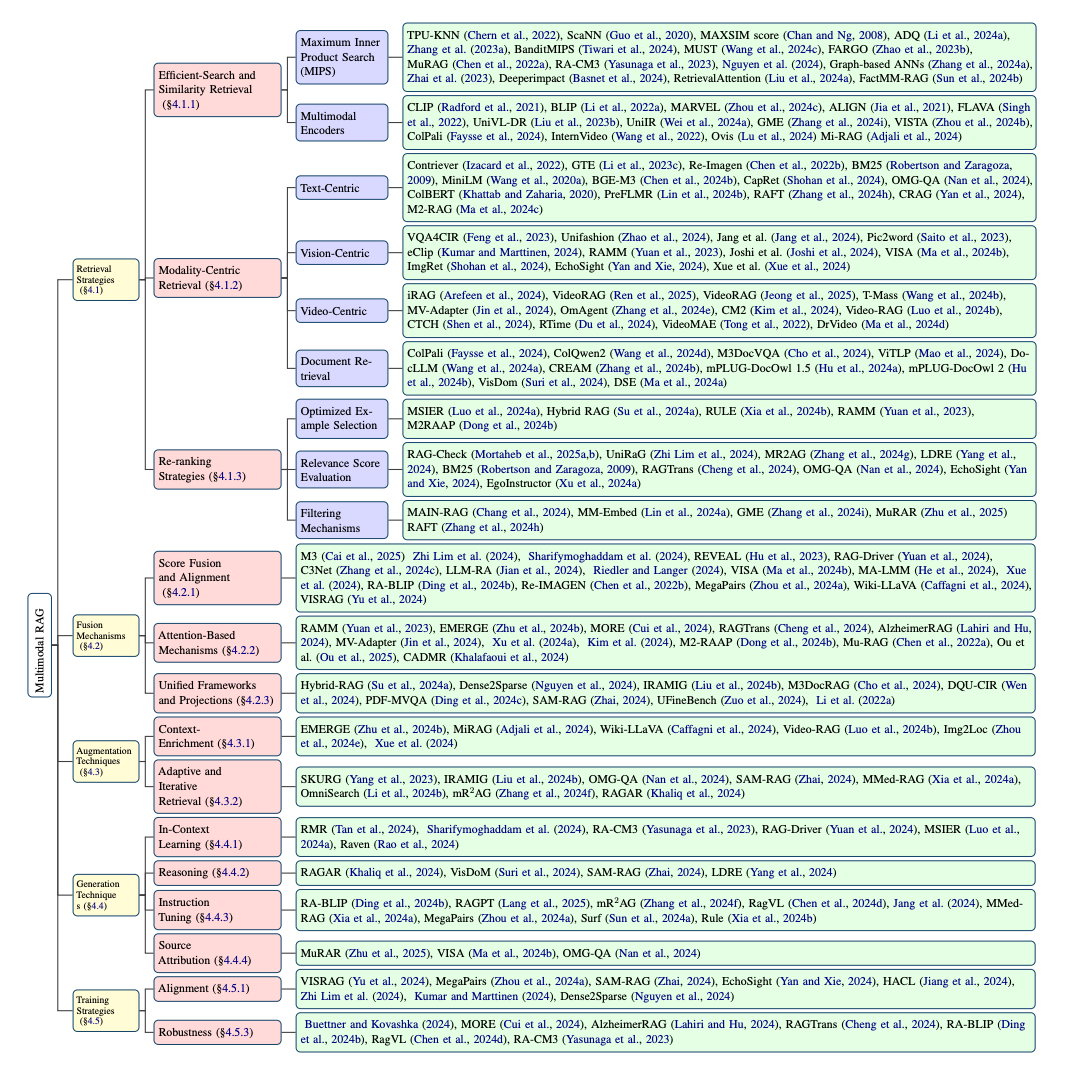

Figure 2 provides an overview of recent advances in multimodal RAG systems, organized into several key areas:

Retrieval Strategies focus on efficient search and similarity-based methods, including MIPS variants and multimodal encoders. Modality-specific approaches cater to text, vision, and video-based retrieval, while re-ranking techniques refine results through optimized selection, relevance scoring, and filtering.

Fusion Mechanisms integrate multimodal information using score fusion and alignment, attention-based techniques, and unified frameworks that project multimodal information into common representations.

Augmentation Techniques enhance retrieval through context enrichment and adaptive, iterative retrieval processes.

Generation Methods incorporate in-context learning, reasoning, instruction tuning, and source attribution to improve output quality and reliability.

Training Strategies focus on optimizing alignment and robustness to ensure stable and effective model performance.

Figure 3 illustrates the key application domains of multimodal RAG systems, covering healthcare and medicine, software engineering, fashion and e-commerce, entertainment and social computing, and emerging applications.

Overall, this survey presents a comprehensive multimodal RAG framework, covering retrieval, modality alignment, fusion, augmentation, generation, and evaluation.

But in my view, it lacks a deep exploration of key challenges, such as:

Cross-modal alignment: How can representations of text, images, and videos be unified? What strategies can improve retrieval efficiency across different modalities?

Computational cost: Multimodal RAG requires significant computation, especially for video and long-text retrieval. How can we reduce computational overhead?