O1 Replication Journey Part 1: From Shortcut Hunters to True Explorers

Mastering the LLM Reasoning Exploration Journey, Not Just the Answer

OpenAI's o1 is known worldwide for its remarkable reasoning abilities, sparking a surge of interest in reasoning LLM research.

Among those working to replicate o1’s breakthroughs, o1 Replication Journey stands out as a significant effort in the field. As of early March 2025, the project is structured into three parts:

In this article, we’ll talk about Part 1: A Strategic Progress Report. Whether you're simply curious about the principles behind OpenAI o1 or a technical expert looking to replicate it, you'll find this guide helpful.

Vivid Description

Imagine you’re playing a treasure hunt game.

Shortcut learning is like someone directly telling you where the treasure is—you just run straight there and grab it. It’s quick, but if the treasure moves to a new location next time, you’ll have no idea how to find it.

Journey learning, on the other hand, is like navigating with a map, step by step. Even if you take a wrong turn, you learn why it was wrong and won’t make the same mistake again. Over time, even without a map, you’ll develop the skills to find the treasure in completely new places.

That’s the essence of journey learning—it’s not just about solving a problem quickly but about understanding the entire exploration process, including trial and error, reflection, and correction.

Like a true adventurer, you don’t just focus on reaching the destination; you learn from the journey itself—the landscapes you pass through and the challenges you overcome. This way, your knowledge becomes deeper, and you’re better prepared for new challenges ahead.

This is actually quite similar to the name of this newsletter, "AI Exploration Journey", capturing the same spirit of discovery and progress.

Overview

Most existing machine learning and LLM training methods, such as supervised fine-tuning, fall under shortcut learning. These approaches heavily rely on data, have limited generalization, and lack self-correction capabilities.

O1 Replication Journey Part 1 introduces a new paradigm called journey learning, which contrasts with traditional shortcut learning.

As shown in Figure 1, shortcut learning focused on supervised training of a direct root-to-leaf shortcut path. Instead of focusing solely on the final result, journey learning explores supervised learning of the entire exploration path—trial, error, reflection, and correction, mirroring how humans solve problems step by step.

Figure 2 illustrates the overall process of Journey Learning, which consists of four steps:

Exploring solution paths using tree-search algorithms (e.g., Monte Carlo Tree Search (MCTS)).

Selecting strategic nodes to build promising exploration paths.

Leveraging LLMs to analyze previous steps, identify reasoning errors, and refine the trajectories.

Collecting trajectories, including both reflection and correction steps to fine-tune the LLMs. The improved models are then used for future training iterations.

In my view, any kind of learning comes down to two key parts: training data (steps 1-3) and training methods (step 4). We will explain them one by one.

Training Data

Since the goal is to replicate OpenAI o1, it’s natural to study how o1 thinks during reasoning.

Characteristics of Training Data

O1 Replication Journey Part 1 has extracted the o1's underlying long thoughts when solving complex equations, as shown in Figure 2.

After these explorations, the long thought training data that needs to be constructed should have the following characteristics:

Iterative Problem-Solving: It begins by defining functions and then gradually explores related expressions, breaking down complex equations into simpler parts, reflecting a structured and methodical approach.

Key Thought Indicators: Terms like "Therefore" for conclusions, "Alternatively" for exploring different options, "Wait" for pausing to reflect, and "Let me compute" for shifting into calculations help highlight different stages of reasoning.

Recursive and Reflective Approach: The model consistently checks and refines intermediate results, using a recursive structure to maintain consistency—just like in rigorous mathematical reasoning.

Exploration of Hypotheses: The model explores different hypotheses, refining its approach as it gathers more information, showing flexibility in its reasoning.

Conclusion and Verification: In the end, it solves the equations and verifies the results.

How to Obtain Training Data

The core idea behind journey learning is to construct long thoughts with actions like reflection and backtracking.

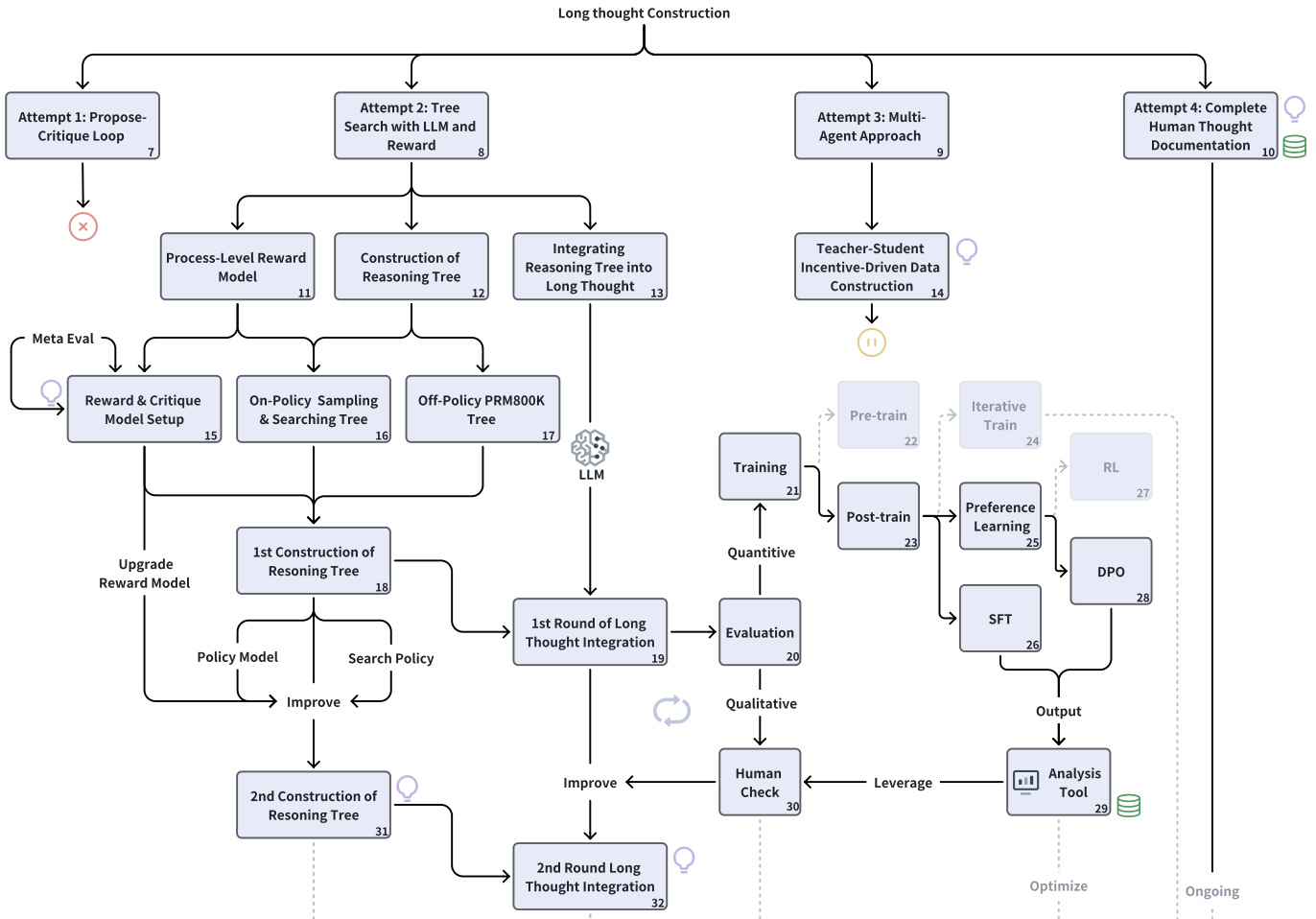

As shown in Figure 3, several approaches were explored:

Tree Search with LLM and Reward: Reasoning is structured like a tree where the root node represents the problem, and each other node represents a reasoning step. Backtracking, reflection, and a process-level reward model help refine the path to the correct solution.

Propose-Critique Loop: Unlike tree search, this method lets the model decide its next action (e.g., continue, backtrack, reflect, or terminate). If the model reaches an incorrect conclusion, it receives feedback, guiding it to reflect and correct its approach.

Multi-Agent Approach: Introduces a policy model to generate reasoning steps and a critique model to evaluate reasoning and suggest actions, creating long thought through their interaction.

Complete Human Thought Process Annotation: Documents human reasoning, which naturally involves reflection and revision, provides a high-quality dataset for training.

Among these four methods, tree search is the most important approach.

Another mainstream approach that hasn't been mentioned is distillation from advanced models—A shortcut approach that distills data from advanced reasoning LLMs (like o1) and fine-tunes base models (such as Qwen2.5-32B-Instruct) to mimic their reasoning style.

Policy Model and Process-Level Reward Model

In the process of building training data, two key models play a crucial role:

Policy Model: Driving the Reasoning Process

The policy model handles step-by-step reasoning. It starts with the given problem (the root node) and continuously adds reasoning steps as child nodes, constructing a reasoning tree. At each step, it generates multiple possible next moves, which are then considered as new branches in the tree.

O1 Replication Journey Part 1 fine-tuned DeepSeekMath-7B-Base on the Abel dataset, which is particularly useful for controlling the generation of individual reasoning steps.

Process-Level Reward Model: Guiding the Search

This model evaluates the correctness of each reasoning step (e.g., steps by line numbers), helping to streamline the search process by pruning unpromising branches and reducing computational load. In each iteration of reasoning tree expansion, the reward model assigns scores to all candidate steps and selects the top K for the next round.

O1 Replication Journey Part 1 experimented with different reward models, including math-shepherd and o1-mini. Ultimately, o1-mini was chosen because it provided the most accurate assessment of whether each step was correct.

Each node in the reasoning tree is labeled by the reward model, indicating whether the step is correct or incorrect.

Overall, policy model generates reasoning steps, and process-level reward model evaluates their quality. Together, they create a trial-and-error reasoning framework.

Deriving a Long Thought from Reasoning Tree

Once the reasoning tree is built, the next step is to derive a detailed reasoning path that captures the trial-and-error process.

Using depth-first search (DFS), the tree is traversed step by step. When encountering a node that is on the correct path, a random child node that leads to an incorrect outcome may be selected for further exploration.

To ensure a smooth and coherent thought process, GPT-4o is used to enhances the thought process while keeping all steps—including incorrect steps, reflections, and corrections. This preserves the full learning journey, making the reasoning process more transparent and natural.

Training Method

The training process consists of two main stages (for experiments, deepseek-math-7b-base is used).

Supervised Fine-Tuning (SFT):

ShortCut Learning: Focuses on fine-tuning using only correct intermediate steps and final answers. The training data includes the Abel dataset (120K examples) and the PRM800K dataset (6,998 examples).

Journey Learning: Introduces more complex reasoning by incorporating long thoughts that were previously constructed (327 examples).

Direct Preference Optimization (DPO): The model generates 20 candidate responses from the MATH Train dataset and is trained using five sets of preference pairs to refine its decision-making.

It's worth mentioning that the training process does not rely on reinforcement learning (RL).

Commentary

Overall, o1 Replication Journey Part 1 depicts four key stages: Initial Assessment, Multi-path Exploration, Iterative Improvement, and Current Results. With only 327 training samples, it surpassed shortcut learning by 8.4% and 8.0% respectively on the MATH500.

After going through this study, I have a few thoughts to share for your reference.

I find it a bit disappointing that it doesn’t disclose the details of how the thought structure of OpenAI o1 (shown in Figure 2) was obtained. Of course, this is understandable given OpenAI’s copyright restrictions.

Accuracy of the Reward Model: We know that the effectiveness of journey learning depends heavily on how accurate the reward model is. While the experiment states that o1-mini performs best across different datasets, it remains a closed-source model. This raises an important question: How can we develop a more reliable and transparent reward model to further improve Journey Learning?

Efficiency of Long Thought Reasoning: Various approaches have been explored for building long thoughts, including tree search, proposal-review loops, and multi-agent methods. All of these involve significant computation and search overhead. In my view, optimizing these algorithms to improve efficiency is a valuable direction for further research.