Welcome back, we’re now at Chapter 42 of this ongoing journey.

Traditional RAG methods and LLM-based reasoning face three major challenges:

A disconnect between retrieval and reasoning: In most RAG setups, retrieval is a static, one-off step. It doesn’t adapt based on how the reasoning unfolds, nor can it incorporate feedback from the reasoning process. As a result, knowledge integration tends to be inefficient — and hallucinations are more likely.

Limited reasoning ability in small models: While smaller language models (i.e., models with up to 7B parameters) are more efficient, they struggle with knowledge-heavy tasks. When it comes to complex reasoning or integrating information across documents, they simply don’t match the performance of large models like GPT-4o.

Structured reasoning lacks external knowledge support: Frameworks like rStar or Tree-of-Thought can guide more reasoning paths, but they rely heavily on internal model knowledge. That becomes a problem for fact-based questions or tasks that need up-to-date information from the outside world.

MCTS-RAG (open-source code: https://github.com/yale-nlp/MCTS-RAG) is a reasoning framework that combines Monte Carlo Tree Search (MCTS) with RAG. Its goal is to boost the reasoning capabilities of small language models (SLMs) on knowledge-intensive tasks without increasing model size.

Unlike traditional RAG, which separates retrieval from reasoning, MCTS-RAG weaves them together, dynamically triggering retrieval during the reasoning process.

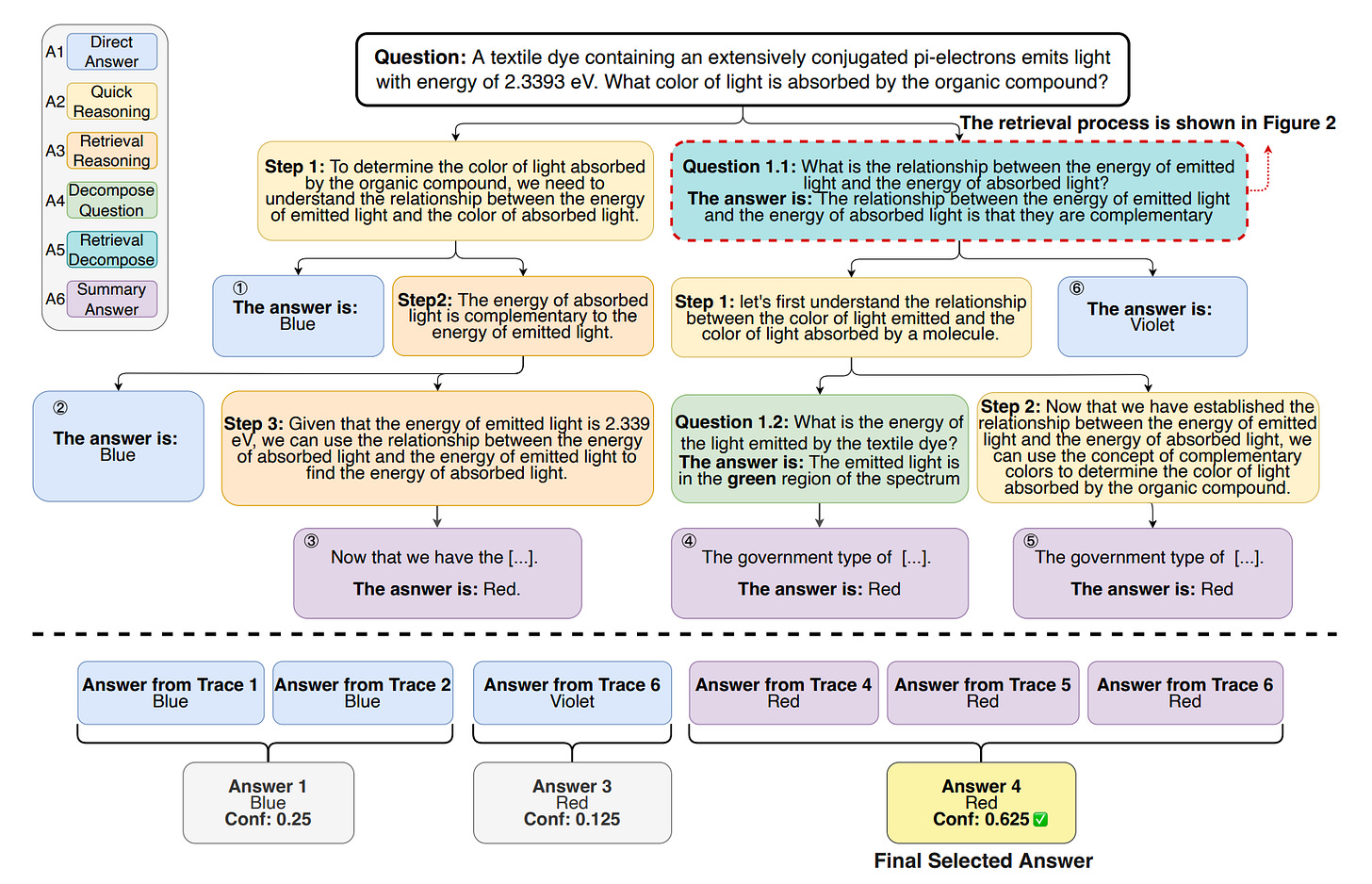

Figure 1 illustrates how MCTS-RAG tackles a multi-hop reasoning question — "A textile dye containing an extensively conjugated pi-electrons emits light with energy of 2.3393 eV. What color of light is absorbed by the organic compound?" — by tightly integrating retrieval and reasoning to explore multiple paths and select the best answer.

To answer the question, it’s important to understand the complementary relationship between emitted and absorbed light.

It starts by breaking the question down into smaller sub-questions (e.g., mapping energy levels to colors).

Different reasoning paths take different actions. Some rely on quick inference, others on retrieval or further decomposition.

When a retrieval action is triggered (Figure 2), MCTS-RAG queries external knowledge sources to support color identification.

Each reasoning path (Trace 1–6) produces a candidate answer — such as blue, red, or violet — along with a confidence score. These scores reflect how trustworthy each path’s answer is.

In the end, MCTS-RAG uses a weighted voting mechanism to pick the final answer — the one with the highest confidence and consistent semantics across paths.

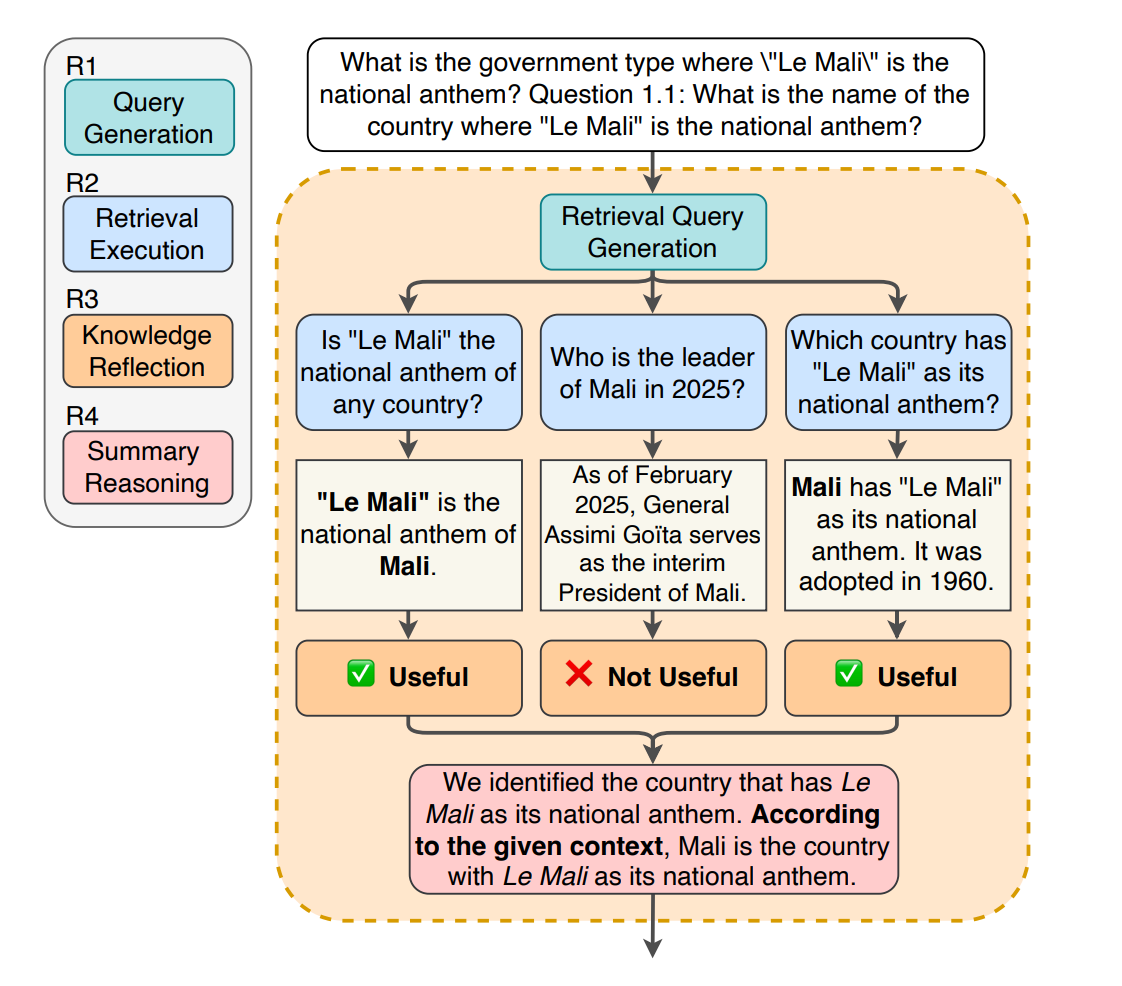

Figure 2 breaks down how MCTS-RAG carries out a “Retrieval + Decompose” action step by step. The process unfolds in four stages:

R1: Query Generation: A focused search query based on the current sub-question is formulated.

R2: Retrieval Execution: It then calls an external retriever to gather relevant information.

R3: Knowledge Reflection: The retrieved content is evaluated for relevance and consistency to the question at hand.

R4: Summary Reasoning: Useful knowledge is integrated into the ongoing reasoning path to help move the answer forward.

Thoughts and Insights

Unlike the static pipeline of traditional RAG, MCTS-RAG introduces a new paradigm of "reasoning-driven knowledge augmentation." It offers more flexibility and control than CoT, and could be a key step toward the next generation of intelligent Q&A systems.

That said, I have a few thoughts.

While it’s more efficient than using LLMs end-to-end, the rollout phase in MCTS still introduces a non-negligible computation cost.

Also, the reward is defined as the product of action scores along the reasoning path, but it’s worth questioning whether this fully captures the "correctness" of a reasoning trajectory.

All in all, MCTS-RAG offers a meaningful methodological shift. In my view, it tries to bridge the gap between structured algorithms and unstructured language reasoning — essentially steering language generation through a discrete control flow. That’s where its promise lies, but also its biggest risk.