Welcome to the 34th installment of this glamorous series. In today’s article, we’ll explore two enchanting topics:

SmolDocling: Turning PDFs into Structured Gold with a Tiny Model

Know the Story, Not Just the Words: Upgrading RAG with Causal Graphs

SmolDocling: Turning PDFs into Structured Gold with a Tiny Model

Model: https://hf-mirror.com/ds4sd/SmolDocling-256M-preview

In previous articles, we’ve explored various advances in PDF parsing and document intelligence.

Today, let’s take a look at SmolDocling — a compact vision-language model with just 256 million parameters, built specifically for structured document understanding and end-to-end document conversion. It is built on top of HuggingFace’s SmolVLM-256M.

(For more on Docling, see: AI Innovations and Trends 03: LightRAG, Docling, DRIFT, and More).

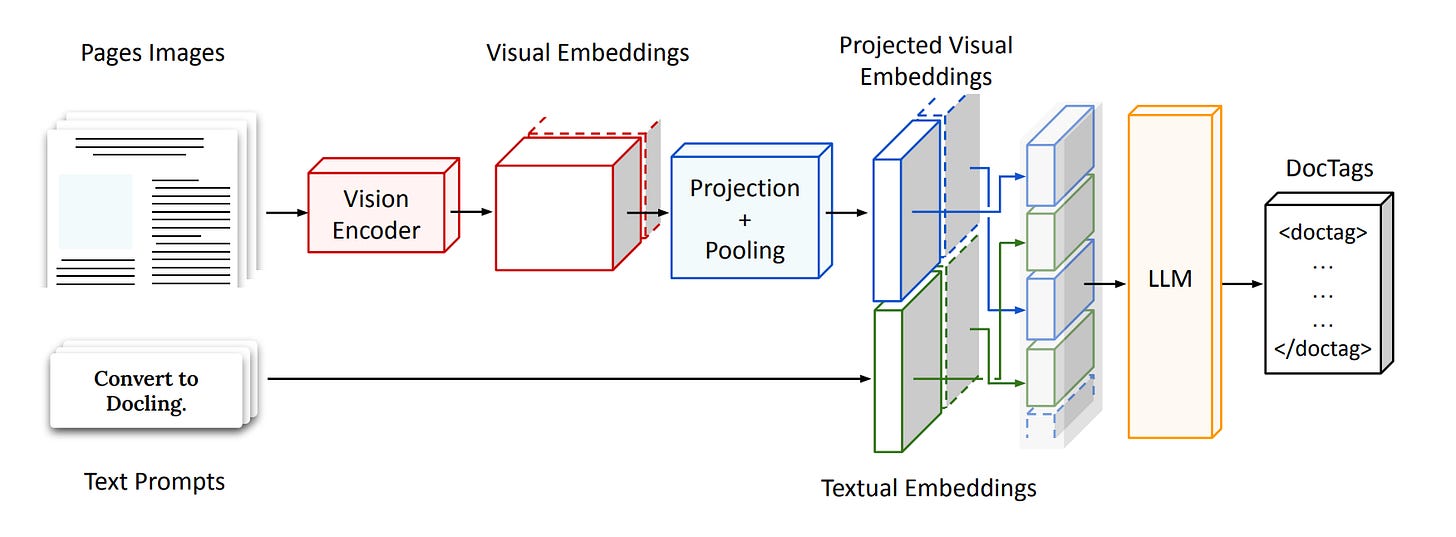

As shown in Figure 1, SmolDocling is a lightweight model that combines a vision encoder with a language model to process document images, turning them into structured DocTags sequences.

It starts by encoding the image with a vision encoder, then reshapes the output using projection and pooling. These visual features are combined with the text from the user’s prompt—sometimes interleaved—before being fed into an LLM that generates the DocTags sequence step by step.

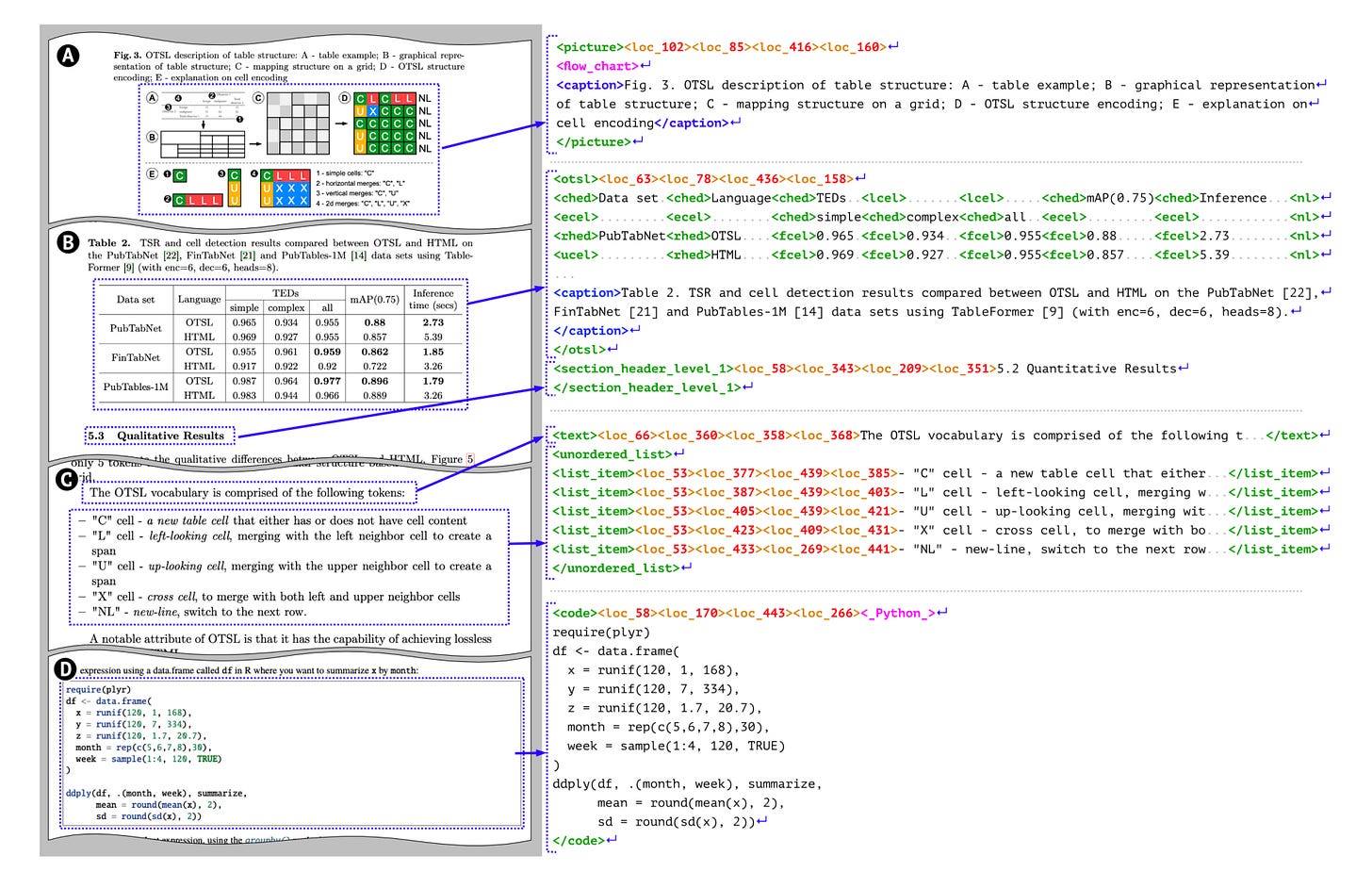

As shown in Figure 2, DocTags is a lightweight, structured markup language introduced by SmolDocling to represent elements on a document page — including their layout, semantic roles, and content. Using an XML-like tag format, it's specifically designed to make document understanding tasks more efficient and accurate.

Thoughts and Insights

While tech giants like OpenAI, Google, and Anthropic are racing toward the limits of model capability, the real, pressing needs for smaller companies, government agencies, and industry-specific applications lie elsewhere — in resource efficiency, on-premise deployment, and data security. And that’s exactly where SmolDocling fits in.

That said, I have a few concerns about SmolDocling.

One of them is the risk of information loss due to aggressive compression of visual features. SmolDocling maps each 512×512 image patch into just 64 visual tokens — meaning each token effectively represents 4,096 pixels. Even for document images, that’s a pretty low resolution. Important fine-grained details like table borders, small annotations, or inline mathematical symbols could easily get lost in the process.

The second concern is this: is DocTags really a better training target than natural language or HTML?

As the core of SmolDocling’s design, DocTags is a handcrafted annotation format aimed at reducing redundancy and improving structural clarity. In theory, that sounds great — but in practice, it may come with trade-offs.

Unlike Markdown or HTML, which have some built-in redundancy thanks to their natural-language-like structure, DocTags may be more fragile. A single missing or incorrect tag could cause the entire output to fail, while HTML or natural language formats are often more forgiving — both humans and parsers can still infer meaning even when parts are broken or missing.

SmolDocling doesn’t evaluate how DocTags stacks up against HTML or Markdown when used as training targets under the same architecture. Without this kind of comparative experiments, it’s hard to say how DocTags performs in terms of robustness, generalization, or usability.

One last concern: although the training dataset is large, it relies heavily on synthetic or rule-based sources like SynthCodeNet, SynthFormulaNet, and SynthDocNet — which may not fully reflect real-world distributions.

Take code recognition, for example: the training data is rendered using Pygments, but in practice, code snippets appear in a wide range of formats — from IDE screenshots to scanned documents and printed books. That raises questions about how well the model can generalize beyond its synthetic training set.

Know the Story, Not Just the Words: Upgrading RAG with Causal Graphs

Vivid Description

Traditional RAG is like looking up a word in the dictionary — you get the meaning, but nothing more.

CausalRAG, on the other hand, is like reading a novel — you follow the story, understand the context, and see how everything connects. You don’t just know what happens, but also why.

Overview

As shown in Figure 3(a), traditional RAG systems face three major issues:

Breaking the linguistic and logical connections: To enable retrieval, documents are split into chunks and stored as embeddings. But this process often cuts through the logic of the text, losing important context and cause-effect relationships.

Semantics ≠ relevance: Semantic search matches based on surface-level similarity. But in many cases, what really matters isn’t what sounds similar — it’s what’s causally connected.

Small mistakes, big problems: When the model leans too heavily on retrieval, even a slightly off-target result can snowball into hallucinations or completely irrelevant answers.

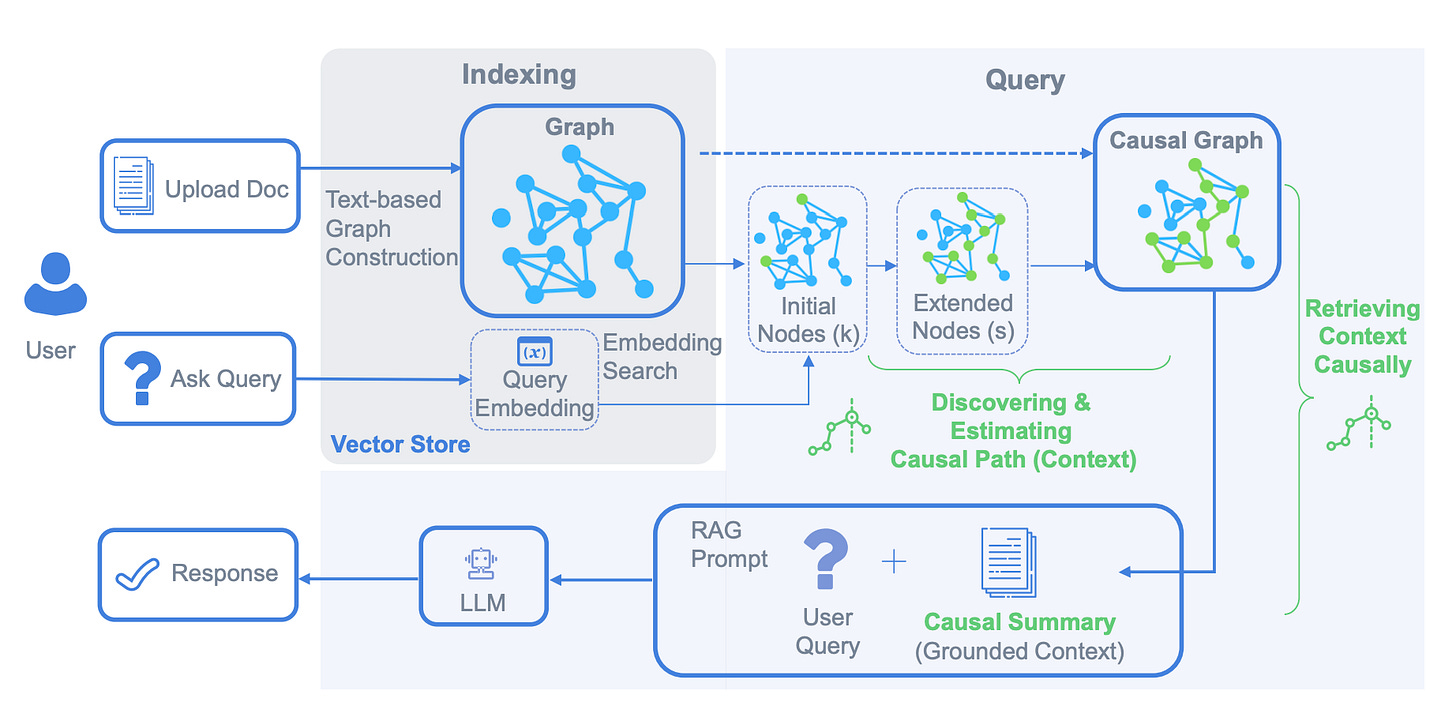

As shown in Figure 4, the process of CausalRAG unfolds in three key stages:

Document processing: The uploaded document is turned into a causal graph, preserving the “what happened and why” relationships.

Query handling: Starting from the user’s question, the system pinpoints relevant nodes in the graph and traces along causal paths to gather the most meaningful context.

Answer generation: The model then combines this causal chain with the original question to craft a clear, well-reasoned response that goes beyond surface-level answers.

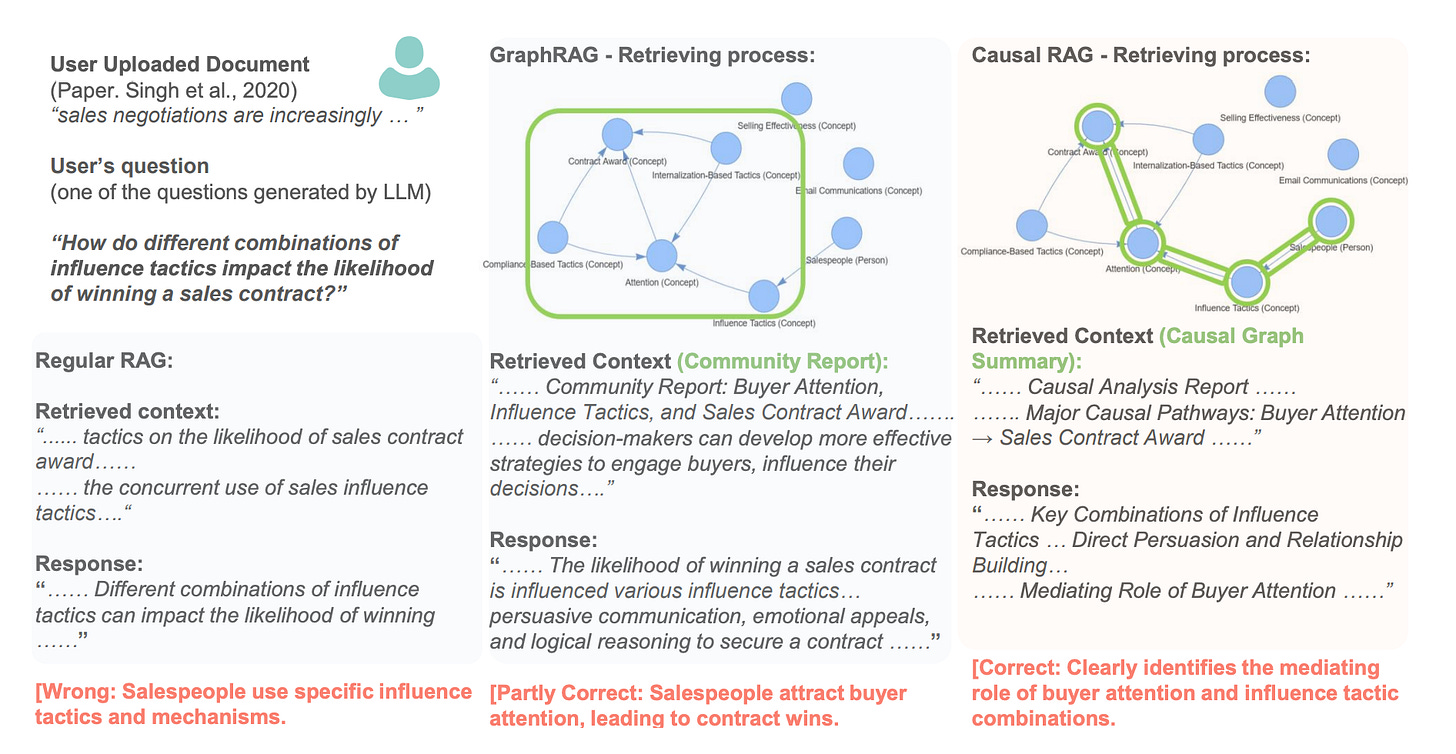

Figure 5 uses a question from the sales domain to highlight how Regular RAG, GraphRAG, and CausalRAG differ in both what they retrieve and how well they answer.

Regular RAG pulls in content that’s semantically similar, but it misses the core information — so the final answer ends up vague and not very helpful.

GraphRAG does a better job by retrieving relevant nodes like "attention," but its community-based expansion adds too many loosely related nodes, which waters down the signal and introduces bias, even though the facts are technically correct.

CausalRAG, on the other hand, starts with the right nodes and builds outward along causal links. The result? A focused, well-reasoned answer that actually addresses the heart of the question.

Thoughts and Insights

I see CausalRAG as a promising step forward in the shift from LLMs to true reasoning engines. It offers a concrete, structured approach to rethink how we use retrieval—not just to find more, but to understand better.

That said, since it relies on LLMs like GPT to extract causal nodes and links from unstructured text, in my view, a few potential issues stand out:

No clear ground truth for causality: How does the model know that A causes B—and not just that A happens before B, or that they often show up together? Right now, there's no strong validation mechanism to back up these judgments.

Inconsistency risks: LLMs can generate slightly different causal paths across runs, especially when dealing with long or ambiguous texts. That variability could pose challenges for reproducibility and model stability.

Struggles with implicit causality: Some of the most important causal links aren’t spelled out—they span across paragraphs, or even across documents. Current approaches mainly rely on explicit textual cues, and still fall short when deeper, cross-text reasoning is needed.