AI Innovations and Insights 12: LongCite and RAG Optimization

You can watch the video:

This article is the 12th in this promising series. Today, we will explore two exciting topics in AI, which are:

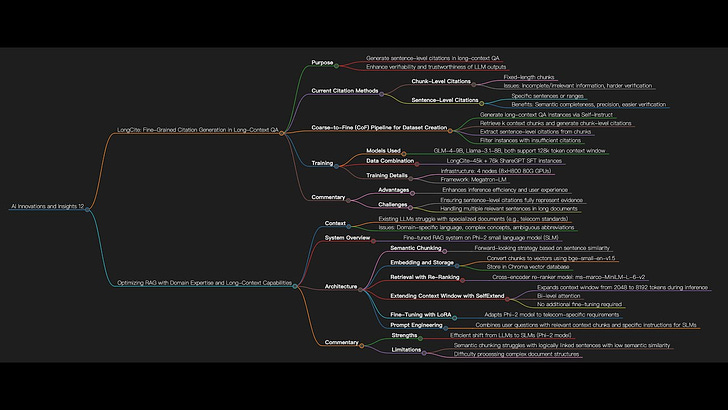

LongCite: Fine-Grained Citation Generation in Long-Context QA

Optimizing RAG with Domain Expertise and Long-Context Capabilities

LongCite: Fine-Grained Citation Generation in Long-Context QA

Open-source code: https://github.com/THUDM/LongCite

When LLMs answer questions based on long contexts, they should cite the source of each statement in the original text, ideally with fine-grained sentence-level citations. This is a common requirement in current RAG applications, and below we'll look at an implementation method.

Figure 1 compares chunk-level citations and sentence-level citations, highlighting their differences in granularity and user experience.

Chunk-level citations divide the context into fixed-length chunks, referencing entire chunks, but they often contain incomplete or irrelevant information, making verification harder for users.

Sentence-level citations directly point to specific sentences or sentence ranges, ensuring semantic completeness and precision, which makes it easier for users to verify and locate evidence.

The examples clearly demonstrate that sentence-level citations are more helpful, offer a better user experience, and improve verification efficiency compared to chunk-level citations. Thus, LongCite mainly focuses on sentence-level citations.

LongCite

Current LLMs fail to indicate the source of each statement in their responses within the original text. Furthermore, the model's output often contains hallucinations and deviates from the original text, severely undermining the model's credibility.

While RAG and post-hoc methods add citation capabilities, RAG loses accuracy in long-text QA, and post-hoc methods introduce delays with complex pipelines.

LongCite focuses on addressing the lack of fine-grained citations in long-context LLMs by generating sentence-level citations to enhance the verifiability and trustworthiness of their outputs. It introduces an automated evaluation benchmark (LongBench-Cite), a novel pipeline (CoF), and a high-quality long-context QA dataset (LongCite-45k). This approach reduces hallucinations, improves accuracy, and delivers fine-grained citations, offering a robust solution for long-text QA.

LongCite: Dataset Creation

Figure 2 illustrates the CoF (Coarse-to-Fine) pipeline for generating long-context QA instances with precise sentence-level citations. It consists of four steps:

Generating long-context QA instance via Self-Instruct;

Using the answer to retrieve k context chunks and generating chunk-level citations;

Extracting sentence-level citations for each statement from the cited chunks.

Instances with too few citations are filtered out.

This CoF process enables the generation of LongCite-45k, a large-scale dataset optimized for training LLMs in long-context QA with precise citations.

LongCite: Training

The training utilized GLM-4-9B and Llama-3.1-8B, two open-source base models supporting a 128k token context window, ideal for fine-tuning on long-context QA with citations (LQAC).

The dataset combined LongCite-45k with 76k general SFT instances from ShareGPT to ensure the models retained both citation generation and general QA capabilities.

Training was conducted on 4 nodes (8×H800 80G GPUs) using Megatron-LM for parallel optimization, with a batch size of 8, learning rate of 1e-5, and a total of 4000 steps (~18 hours).

Commentary

Overall, LongCite offers a fine-tuning-based solution, with its core innovation centered on data construction.

By enhancing inference efficiency and improving user experience, LongCite has the potential to become a deployable product, particularly in application scenarios such as enterprise document management, academic support tools, educational platforms, and knowledge services.

In my view, the fine-grained citation generation depends on extracting the most relevant sentences from the long context and matching them to the generated answer. However, in some cases, whether the generated sentence-level citations fully represent the evidence behind a specific answer might still be a challenge. For example, in long documents, there could be multiple relevant sentences or paragraphs, and the model might misquote or overlook them, affecting the precision of the generated answer.

Optimizing RAG with Domain Expertise and Long-Context Capabilities

Existing LLMs struggle with the intricacies of specialized documents like telecom standards due to the domain-specific language and complex concepts not present in typical training data. Abbreviations like "SAP" having multiple meanings in telecom can confuse models, while unique protocols further complicate understanding.

"Leveraging Fine-Tuned Retrieval-Augmented Generation with Long-Context Support: For 3GPP Standards" addresses this by adapting LLMs for telecom contexts.

I have detailed this method, and now I have some new insights.

Overview

A fine-tuned RAG system built on the Phi-2 small language model (SLM), specifically designed to handle the complexities of telecom standards, particularly 3GPP documents.

The RAG system is meticulously designed to ensure that each stage of processing—from chunking and embedding to retrieval, re-ranking, and generation—contributes to the overall goal of delivering accurate and contextually relevant responses.

Semantic Chunking Strategy

Semantic chunking is the first crucial step in the RAG pipeline. Traditional fixed-size chunking fails with technical telecom documents, as it can break sentences and lose meaning.

The system uses a forward-looking semantic chunking strategy that determines chunk boundaries based on sentence similarity. Using the bge-small-en-v1.5 embedding model, it converts sentences to vectors and creates chunk boundaries when similarity between consecutive sentences falls below a threshold (90th percentile). This creates semantically coherent chunks for better information retrieval.

Embedding and Storage

After chunking, chunks are converted to vectors using the bge-small-en-v1.5 embedding model and stored in a Chroma vector database optimized for high-dimensional embeddings. These embeddings enable semantic similarity search during retrieval, with Chroma DB providing fast and accurate access to relevant chunks.

Retrieval with Re-ranking

The system uses a cross-encoder re-ranker model (ms-marco-MiniLM-L-6-v2) that analyzes query-chunk interactions for more accurate similarity scoring, unlike bi-encoders that process chunks independently.

Extending the Context Window with SelfExtend

Small language models like Phi-2 have limited context windows of around 2048 tokens, making it difficult to process lengthy telecom documents. The system addresses this using SelfExtend, which expands the context window to 8192 tokens during inference. SelfExtend uses a bi-level attention system: grouped attention for distant token relationships and neighbor attention for adjacent tokens. This approach extends the context window without needing additional fine-tuning, enabling longer sequence processing.

Fine-Tuning with LoRA

In the final generation phase, the system creates responses using retrieved chunks. The Phi-2 model is fine-tuned with Low-Rank Adaptation (LoRA), enabling efficient training on small datasets. This approach minimizes computational needs while adapting to telecom-specific requirements.

Prompt Engineering

The prompt combines the user's question with relevant context chunks and specific instructions for SLMs.

Commentary

Overall, this system's architecture is relatively simple. In my opinion, the highlight is that the shift from LLMs to more efficient SLMs, as demonstrated by the Phi-2 model.

It's worth noting that the semantic chunking method used by this system has two key limitations: it struggles with sentences that have low semantic similarity despite strong logical connections, and it has difficulty processing complex structures. I've discussed this in my previous article.

Finally, if you’re interested in the series, feel free to check out my other articles.