Understanding Large Language Model-Based Agents

An in-depth look at what LLM-based agents are, including their developmental history, principles, code implementation, and other insights.

In the era of large language models (LLMs), the development of agent technology has accelerated. Large language model-based agents represent an exciting field of research and have made considerable progress in the past year.

This article primarily introduces large language model-based agents and is mainly divided into the following sections:

The origin of agent technology.

The development history of AI agent technology.

The architecture of LLM-based agents.

Applications of LLM-based agents.

Implementing an LLM-based agent with simple code.

Discussion about agents.

Agent

From a philosophical perspective, an agent is a being with the capacity to act. In philosophy, an agent could be a person, an animal, or even an autonomous concept or entity.

The definition and nature of an agent can differ depending on the discipline or cultural context. Generally, an agent is an autonomous individual capable of exercising their will, making decisions, and taking action, rather than just responding passively to external stimuli.

Indeed, humans are considered the most complicated agents on this planet.

AI Agent

Since the mid-1980s, research on agents in the field of artificial intelligence has significantly increased. Based on this, Wooldridge et al. define artificial intelligence as a subfield of computer science, aiming to design and build computerized agents that exhibit intelligent behavior.

Essentially, an AI agent is not equivalent to a philosophical agent. Instead, it is the concretization of the concept of a philosophical agent within an AI environment.

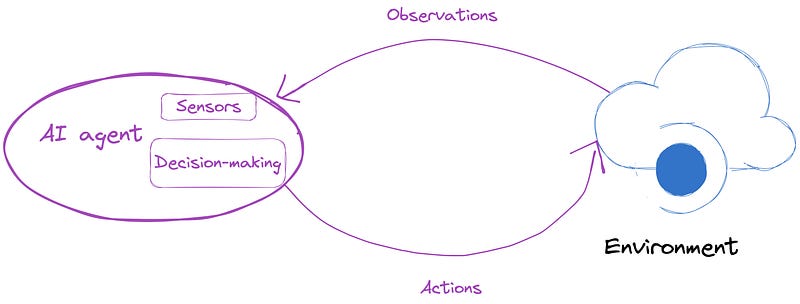

As depicted in Figure 1, an AI agent is an artificial entity that perceives its environment through sensors, makes decisions, and then responds accordingly.

The Development Stages of AI Agents

The history of technological evolution in AI agent research mainly includes the following stages.

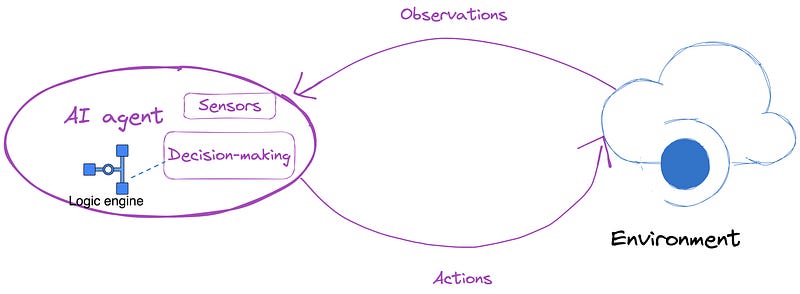

Symbolic Agents

In the early stages of artificial intelligence research, the main method used was symbolic artificial intelligence, which uses logical rules and symbolic representation to encapsulate knowledge and facilitate the reasoning process. The architecture of symbolic agents is shown in Figure 2:

A classic example of this approach is a knowledge-based expert system. This system primarily consists of a knowledge base, reasoning engine, and interpreter.

However, symbolic agents may face challenges in managing uncertainty and large-scale real-world problems. Additionally, due to the complexity of the symbolic reasoning algorithm, it’s challenging to find an efficient algorithm that yields meaningful results within a limited time.

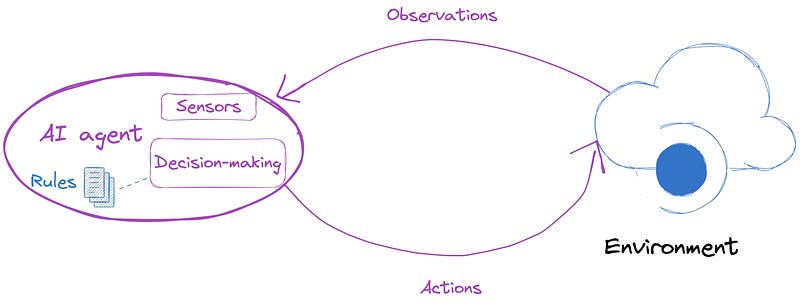

Reactive agents

Unlike symbolic agents, reactive agents do not employ complex symbolic reasoning. They primarily concentrate on the interaction between the agent and the environment, prioritizing rapid and real-time responses.

The design of such agents prioritizes input-output mapping over complex reasoning and symbolic operations. Consequently, they may lack sophisticated decision-making and planning capabilities. This is because reactive agents typically use predefined rule sets to guide their behavior, as shown in Figure 3:

A classic example of this approach is the sweeping robot. They employ sensors to detect dust and obstacles on the floor, then select the cleaning route and dodge obstacles. These agents simply make decisions based on the current situation, without the need for long-term planning.

Reinforcement learning-based agents

The primary focus of this field is how to enable agents to learn through interaction with the environment to achieve the maximum cumulative reward in specific tasks.

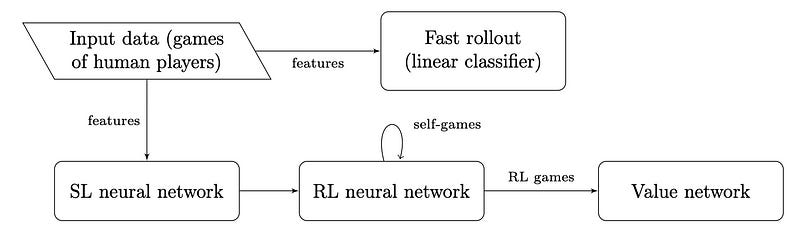

The emergence of deep learning has led to the integration of deep neural networks and reinforcement learning. This allows the agent to learn complex strategies from high-dimensional inputs, resulting in many significant achievements, such as AlphaGo, as shown in Figure 4.

However, reinforcement learning also encounters various challenges such as long training times, inefficient sampling, and stability issues, particularly when implemented in complex real-world environments.

Agents with transfer learning and meta learning

Transfer learning lightens the training load for new tasks while encouraging the exchange and transfer of knowledge between various tasks. Additionally, meta-learning emphasizes learning methods that allow agents to quickly determine the optimal strategy for new tasks from a limited number of samples.

However, if there is a significant disparity between the source task and the target task, the effectiveness of transfer learning may fall short of expectations, leading to negative transfer. Furthermore, meta-learning necessitates extensive pre-training and a substantial sample size, which complicates the establishment of a universal learning strategy.

LLM-based Agents

In recent years, large language models (LLMs) have seen significant success, demonstrating potential to reach human-like intelligence. This is mainly due to the use of extensive training datasets and numerous model parameters. As a result, a new field of research has emerged, using LLMs as the core controllers in agents to achieve human-level decision-making abilities.

This is the main point of the article, the following section will explain it in detail.

Architecture of LLM-based Agents

The architecture of LLM-based agents varies in form. However, from a commonality perspective, the core modules include memory, planning, and action.

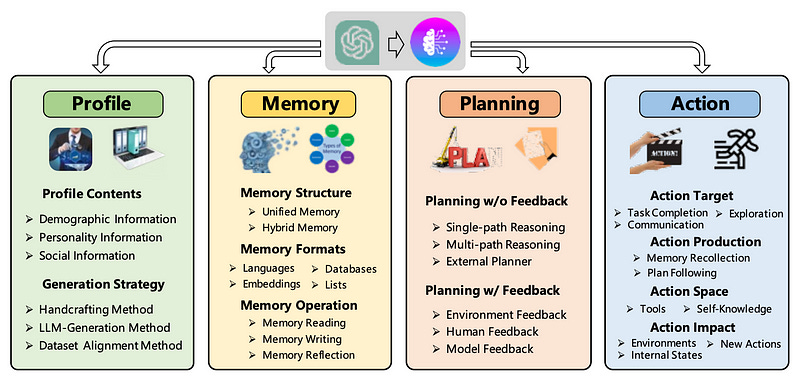

For instance, Wang et al. propose a unified framework depicted in Figure 5. This framework includes a profiling module, a memory module, a planning module, and an action module.

The profiling module identifies the agent’s role. The memory and planning modules position the agent in a dynamic environment, allowing it to recall past actions and plan future ones. The action module converts the agent’s decisions into particular outputs. Among these, the profiling module impacts the memory and planning modules, and these three modules together influence the action module.

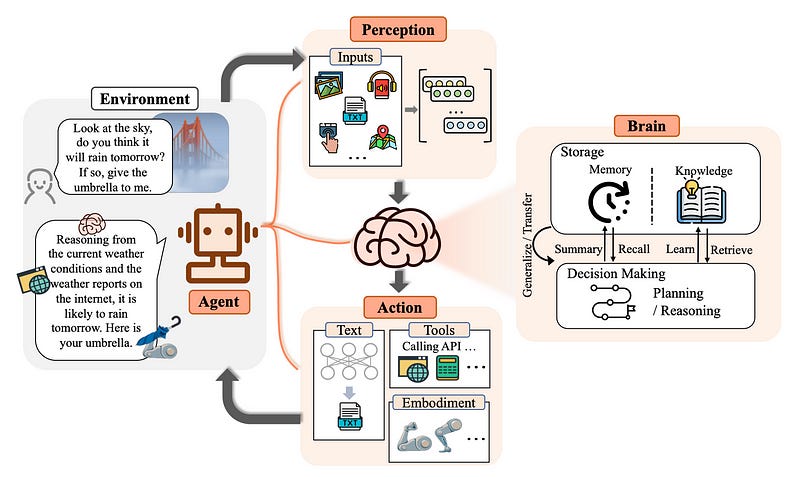

Furthermore, Xi et al. present a general conceptual framework of an LLM-based agent composed of three key parts: brain, perception, and action, as shown in Figure 6.

The brain module serves as a controller, handling basic tasks such as memory, thinking, and decision-making. The perception module interprets and processes multimodal information from the external environment, while the action module executes responses and interacts with the environment using tools.

The paper gives an example to illustrate the workflow: Suppose someone asks if it’s going to rain. The perception module translates this query into a format that the LLM can understand. The brain module then makes inferences based on the current weather and online weather reports. Finally, the action module responds and hands an umbrella to the person. Through this process, the agent can consistently receive feedback and interact with the environment.

Applications of LLM-based Agents

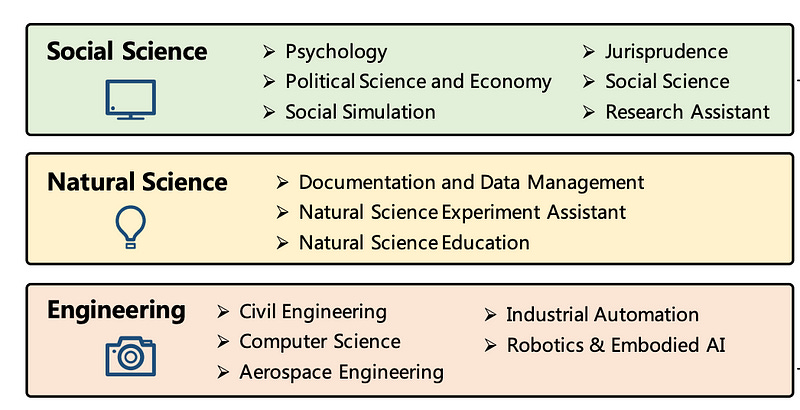

Based on different application areas, the application of LLM-based agent can be divided into three categories: social sciences, natural sciences, and engineering, as shown in Figure 7.

Additionally, according to application scenarios, the applications of LLM-based agents can be divided into: single agents, multiple agents, and human-computer interaction, as shown in Figure 8.

A single agent possesses diverse capabilities and can demonstrate outstanding task-solving performance in various application orientations. When multiple agents interact, they can achieve advancement through cooperative or adversarial interactions. Furthermore, in human-agent interactions, human feedback can enable agents to perform tasks more efficiently and safely, while agents can also provide better service to humans.

Implement an LLM-based Agent

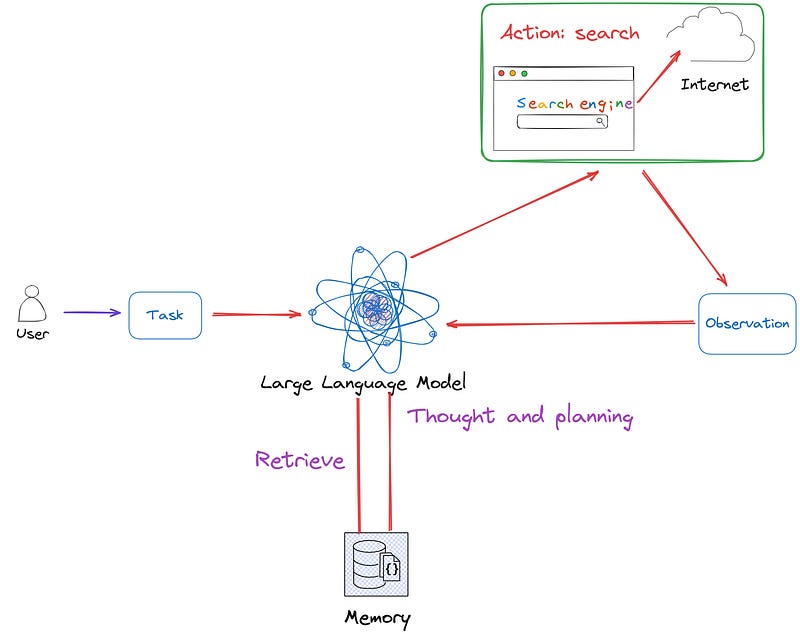

We use Langchain to implement an agent, the architecture is shown in Figure 9:

Environment Configuration

Create a Conda environment, install the corresponding Python libraries.

(base) Florian:~ Florian$ conda create -n agent python=3.11

(base) Florian:~ Florian$ conda activate agent

(agent) Florian:~ Florian$ pip install langchain

(agent) Florian:~ Florian$ pip install langchain_openai

(agent) Florian:~ Florian$ pip install duckduckgo-searchAfter installation, the corresponding version is as follows:

(agent) Florian:~ Florian$ pip list | grep langchain

langchain 0.1.15

langchain-community 0.0.32

langchain-core 0.1.41

langchain-openai 0.1.2

langchain-text-splitters 0.0.1

(agent) Florian:~ Florian$ pip list | grep duck

duckduckgo_search 5.3.0Library Import

import os

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

from langchain.agents import AgentExecutor, Tool, ZeroShotAgent

from langchain.chains import LLMChain

from langchain.memory import ConversationBufferMemory

from langchain_openai import OpenAI

from langchain_community.utilities import DuckDuckGoSearchAPIWrapperTools

Here we use the DuckDuckGo search engine as tools; of course, other tools can also be used.

search = DuckDuckGoSearchAPIWrapper()

tools = [

Tool(

name="Search",

func=search.run,

description="useful for when you need to answer questions about current events",

)

]Planning and Action

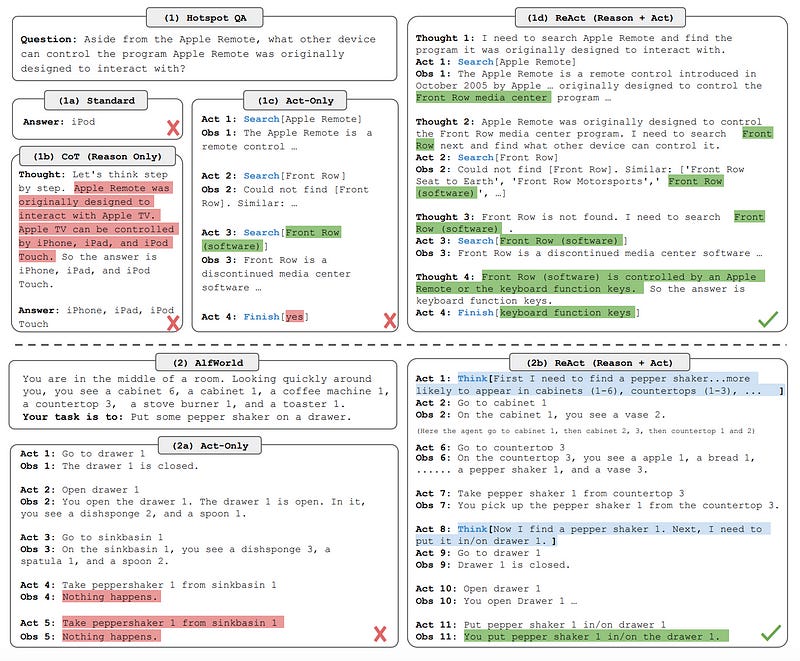

This is the core of the agent. In this case, we adopt the ReAct algorithm. Its objective is to explore the utilization of LLM in generating reasoning traces and specific task actions in an interleaved manner. Note that this is not the reactive agent previously discussed, but a method that can synergize reasoning and acting in LLMs.

As shown in Figure 10, We can see that the principle of ReAct involves not just performing actions, but also explaining its decision-making or reasoning process. This allows us to understand not just that a task is completed, but also why it was done in a particular way. If any issues arise, it becomes easier to identify the cause.

The issue with other prompting methods is their lack of transparency. We are unaware of how the agent resolved the question or if it faced any challenges during the process.

Below is the planning code of our agent.

prefix = """Have a conversation with a human, answering the following questions as best you can. You have access to the following tools:"""

suffix = """Begin!"

{chat_history}

Question: {input}

{agent_scratchpad}"""

prompt = ZeroShotAgent.create_prompt(

tools,

prefix=prefix,

suffix=suffix,

input_variables=["input", "chat_history", "agent_scratchpad"],

)The well-constructed prompt is as follows.

Have a conversation with a human, answering the following questions as best you can.

You have access to the following tools:

Search: useful for when you need to answer questions about current events

Use the following format:

Question: the input question you must answer

Thought: you should always think about what to do

Action: the action to take, should be one of [Search]

Action Input: the input to the action

Observation: the result of the action

... (this Thought/Action/Action Input/Observation can repeat N times)

Thought: I now know the final answer

Final Answer: the final answer to the original input question

Begin!"

{chat_history}

Question: {input}

{agent_scratchpad}First, the prompt will use our defined [search] tool, and the prompt has 3 variables at the end:

chat_historycontains the content stored in memory.inputrefers to the question input by the user.agent_scratchpadrepresents the prior thought process, encompassing thoughts, actions, action inputs, observations, etc. This variable updates throughout the agent's execution process.

Memory

memory = ConversationBufferMemory(memory_key="chat_history")Construct an Agent

Create an agent using the LangChain API.

llm_chain = LLMChain(llm=OpenAI(temperature=0), prompt=prompt)

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_executor = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, verbose=True, memory=memory

)Test

agent_executor.run(input="How many people live in canada?")

# To test the memory of this agent, we can ask a followup question that

# relies on information in the previous exchange to be answered correctly.

agent_executor.run(input="what is their national anthem called?")

agent_executor.run(input="what is their capital?")Overall Code

import os

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

from langchain.agents import AgentExecutor, Tool, ZeroShotAgent

from langchain.chains import LLMChain

from langchain.memory import ConversationBufferMemory

from langchain_openai import OpenAI

from langchain_community.utilities import DuckDuckGoSearchAPIWrapper

search = DuckDuckGoSearchAPIWrapper()

tools = [

Tool(

name="Search",

func=search.run,

description="useful for when you need to answer questions about current events",

)

]

prefix = """Have a conversation with a human, answering the following questions as best you can. You have access to the following tools:"""

suffix = """Begin!"

{chat_history}

Question: {input}

{agent_scratchpad}"""

prompt = ZeroShotAgent.create_prompt(

tools,

prefix=prefix,

suffix=suffix,

input_variables=["input", "chat_history", "agent_scratchpad"],

)

memory = ConversationBufferMemory(memory_key="chat_history")

llm_chain = LLMChain(llm=OpenAI(temperature=0), prompt=prompt)

agent = ZeroShotAgent(llm_chain=llm_chain, tools=tools, verbose=True)

agent_executor = AgentExecutor.from_agent_and_tools(

agent=agent, tools=tools, verbose=True, memory=memory

)

agent_executor.run(input="How many people live in canada?")

# To test the memory of this agent, we can ask a followup question that relies on information in the previous exchange to be answered correctly.

agent_executor.run(input="what is their national anthem called?")

agent_executor.run(input="what is their capital?")The running results are as follows, we can see the process of thought, planning and action in it.

> Entering new AgentExecutor chain...

Thought: I should use the Search tool to find the most recent population data for Canada.

Action: Search

Action Input: "Population of Canada"

Observation: Canada population density map (2014) Top left: The Quebec City-Windsor Corridor is the most densely inhabited and heavily industrialized region accounting for nearly 50 percent of the total population Canada ranks 37th by population among countries of the world, comprising about 0.5% of the world's total, with 40 million Canadians. Despite being the second-largest country by total area ... As of July 1, 2023, NPRs were estimated to represent 5.5% of the population of Canada. Among provinces, this proportion was highest in British Columbia (7.3%) and Ontario (6.3%) and lowest in Newfoundland and Labrador (2.4%) and Saskatchewan (2.5%). The 2.2 million NPRs now outnumber the 1.8 million Indigenous people enumerated during the 2021 ... Historical population of Canada. Statistics Canada conducts a country-wide census that collects demographic data every five years on the first and sixth year of each decade. The 2021 Canadian census enumerated a total population of 36,991,981, an increase of around 5.2 percent over the 2016 figure. It is estimated that Canada's population surpassed 40 million in 2023 and 41 million in 2024. Canada's population reaches 40 million. On June 16, 2023, Statistics Canada announced that Canada's population passed the 40 million mark according to the Canada's population clock (real-time model). Today's release of total demographic estimates and related data tables for a reference date of July 1, 2023, is the first since reaching that ... Canada's population was estimated at 40,528,396 on October 1, 2023, an increase of 430,635 people (+1.1%) from July 1. This was the highest population growth rate in any quarter since the second quarter of 1957 (+1.2%), when Canada's population grew by 198,000 people. At the time, Canada's population was 16.7 million people, and this rapid population growth resulted from the high number of ...

Thought: Based on the data, I can see that the population of Canada is estimated to be around 40 million as of October 1, 2023.

Final Answer: The estimated population of Canada as of October 1, 2023 is 40 million.

> Finished chain.

> Entering new AgentExecutor chain...

Thought: I should use the search tool to find the answer.

Action: Search

Action Input: "Canada national anthem"

Observation: O Canada, national anthem of Canada.It was proclaimed the official national anthem on July 1, 1980. "God Save the Queen" remains the royal anthem of Canada. The music, written by Calixa Lavallée (1842-91), a concert pianist and native of Verchères, Quebec, was commissioned in 1880 on the occasion of a visit to Quebec by John Douglas Sutherland Campbell, marquess of Lorne (later 9th ... Learn about the history and lyrics of Canada's national anthem 'O Canada', which has both French and English versions. The song was composed by Calixa Lavallée in 1880 and was proclaimed the official anthem in 1980. It replaced 'God Save the Queen', which is Canada's royal anthem. O Canada (French: Ô Canada) is the national anthem of Canada. The song was originally commissioned by Lieutenant Governor of Quebec Théodore Robitaille for t... National Anthem of Canada - O Canada (English only) - featuring new lyricsOther versions:Bilingual: https://www.youtube.com/watch?v=wBCuyeoSURoFrench only: h... Enjoy this virtual choir rendition of 'O Canada' arranged by George Alfred Grant-Shaefer . Make sure to subscribe for more virtual choir videos!After 100 yea...

Thought: I now know the final answer.

Final Answer: The national anthem of Canada is "O Canada".

> Finished chain.

> Entering new AgentExecutor chain...

Thought: I should use the Search tool to find the answer.

Action: Search

Action Input: "Capital of Canada"

Observation: Ottawa is the capital city of Canada.It is located in the southern portion of the province of Ontario, at the confluence of the Ottawa River and the Rideau River.Ottawa borders Gatineau, Quebec, and forms the core of the Ottawa-Gatineau census metropolitan area (CMA) and the National Capital Region (NCR). As of 2021, Ottawa had a city population of 1,017,449 and a metropolitan population of ... Ottawa, city, capital of Canada, located in southeastern Ontario.In the eastern extreme of the province, Ottawa is situated on the south bank of the Ottawa River across from Gatineau, Quebec, at the confluence of the Ottawa (Outaouais), Gatineau, and Rideau rivers.The Ottawa River (some 790 miles [1,270 km] long), the principal tributary of the St. Lawrence River, was a key factor in the city ... Skyline of Toronto. The national capital is Ottawa, Canada's fourth largest city. It lies some 250 miles (400 km) northeast of Toronto and 125 miles (200 km) west of Montreal, respectively Canada's first and second cities in terms of population and economic, cultural, and educational importance. The third largest city is Vancouver, a centre ... Learn about Canada's location, climate, terrain, natural resources, and major lakes and rivers. Find out the population distribution, ethnic groups, languages, and religions of Canada. The national capital, Ottawa, is prominently marked in the province of Ontario. Where is Canada? Canada is the largest country in North America. Canada is bordered by non-contiguous US state of Alaska in the northwest and by 12 other US states in the south. The border of Canada with the US is the longest bi-national land border in the world.

Thought: I now know the final answer.

Final Answer: The capital of Canada is Ottawa.

> Finished chain.Discussion

Agent and RAG

Here’s an example that illustrates the difference between RAG and agent using the input “I want to travel to Europe”.

RAG can fetch current and reliable information about European travel, subsequently generating relevant travel itinerary information. On the other hand, an agent uses a variety of tools and interacts with LLM to complete tasks. These tasks include formulating guides, booking tickets, planning itineraries, and more.

From this example, we can see:

Both LLM and RAG are primarily content-oriented. RAG enhances an LLM’s abilities by accessing external knowledge sources, operating on a knowledge level.

An agent is focused on completing tasks. It plans, breaks down, and iterates through tasks, not just generating content but also using tools to achieve its goals. This allows the agent to provide an end-to-end user experience.

If you’re interested in RAG technologies, feel free to check out my RAG articles.

RAG

This series primarily discusses Advanced RAG technology to enhance the performance of RAG.medium.com

Cooperation and communication of multi-agents

As the number of agents increases, the difficulty of cooperation and communication between agents will increase. This presents a promising research direction.

An agent capable of operating stably and performing tasks in a society composed of hundreds or even thousands of Agents is more likely to play a role in the future real world.

Regarding the Commercial Implementation of the Agents

As LLM-based agents are more complex than the LLMs themselves, it can be challenging for small and medium-sized enterprises or individuals to build them locally. Therefore, cloud vendors might want to consider offering intelligent agents as a service, also known as Agent-as-a-Service( AaaS).

Furthermore, whether LLM-based agents can truly be implemented requires rigorous security assessment to avoid causing harm to the real world.

Limitations of LLM-based Agents

LLM-based agents are not perfect and have the following deficiencies:

The LLM-based agents heavily depend on the generation ability of the LLM, so the quality of an agent also depends on whether the LLM is optimized properly. During the development process, it was found that the open-source, small-scale LLM(3B, 7B) struggles to understand prompts effectively. Therefore, to implement a competent agent, a higher-performing LLM such as GPT-4 is recommended.

The length of the LLM’s context window is usually limited, which restricts the ability of agents to solve more complex problems.

Currently, it’s quite burdensome and time-consuming for the agent to independently explore and complete the entire solution process, which can easily complicate the problem. Significant optimization is needed in this area.

Conclusion

This article introduces the concepts of agents, AI agents, and LLM-based agents, and discusses the implementation of an agent. It also shares some personal insights on the topic of agents.

In general, the advent of LLMs and agent technology has initiated a new era. Although LLM-based agents are not perfect at present, it is a very promising direction.

Finally, if there are any errors or omissions in this article, or if you have any questions, please point them out in the comment section.