Smarter, Not Harder: Why CAG Outshines RAG in Specific Scenarios — AI Innovations and Insights 39

Welcome to the 39th installment of this elegant series.

Vivid Description

Traditional RAG is like an ordinary chef who starts prepping only after seeing the order. They run back and forth to the fridge and pantry (the knowledge base), grabbing ingredients (information) on the fly — chopping as they go. It’s slow, and sometimes they grab the salt instead of the sugar (retrieval errors).

Cache-Augmented Generation (CAG), on the other hand, is like a high-efficiency chef. Before the kitchen even opens, they’ve already washed, chopped, and neatly organized all the ingredients they’ll need for today’s menu (preloading + KV caching). So when an order comes in (a question), they just grab what’s right in front of them and cook — fast, accurate, and smooth. The catch? The menu has to stay within a fixed range. If you need something that wasn’t prepared ahead of time, it’s not on the counter (context length limits).

Overview

Open-source code: https://github.com/hhhuang/CAG

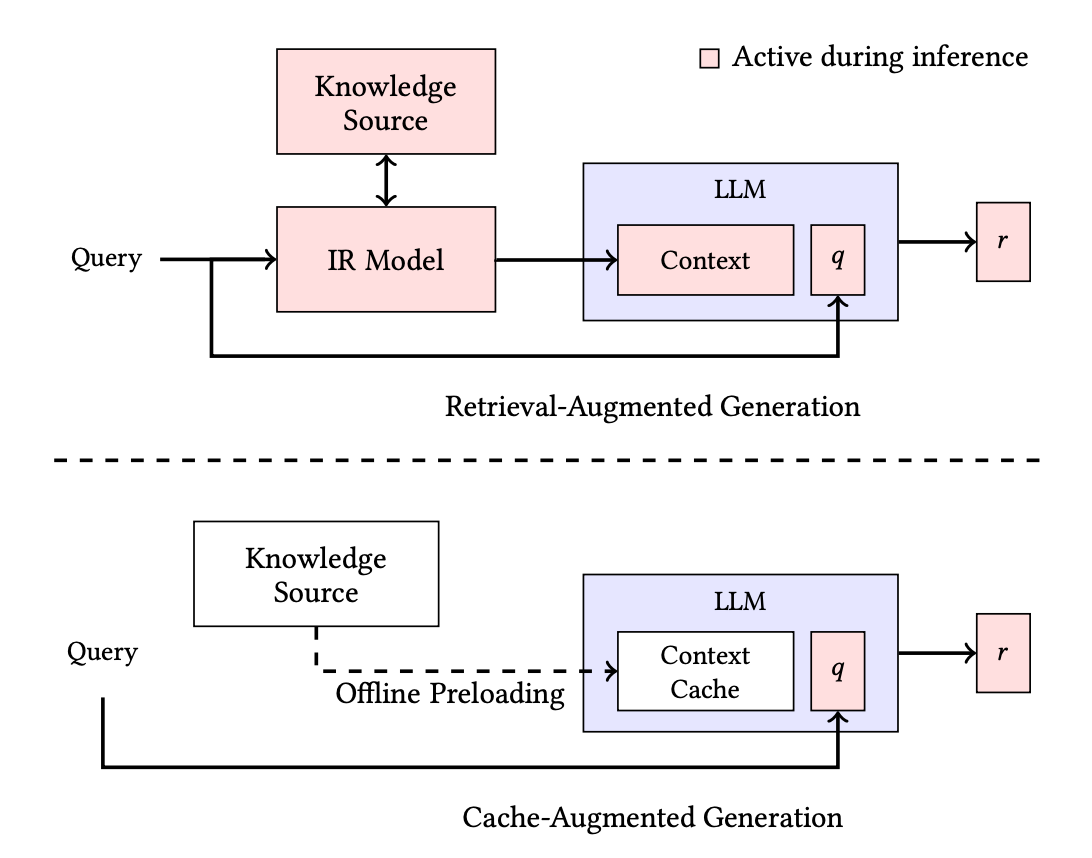

As shown in Figure 1, the top section illustrates how RAG works: when a query comes in, the system first runs an information retrieval step to pull in relevant content from the knowledge base. That retrieved data, along with the query itself, is then passed to the LLM — which adds some extra delay due to the retrieval step.

CAG, shown in the bottom section, takes a different approach. It preloads and caches the needed knowledge offline. So during inference, the LLM only needs to process the query — no lookup needed. That means faster responses and a smoother overall experience.

My Perspective

Overall, CAG shows a sharp sense of engineering practicality. Instead of chasing flashy architectural innovations in the RAG space, it zeroes in on the real, everyday pain points of RAG: retrieval latency, retrieval errors, and system complexity.

At a time when LLM context windows are expanding rapidly, CAG takes a clever, almost counterintuitive approach — rather than trying to improve retrieval, why not skip it altogether when the conditions are right?

However, I have a few concerns about CAG.

Dynamic Knowledge updates

One major question is around dynamic knowledge updates. While CAG mentions that caches can be reset, its static preloading approach could become a real weakness in scenarios where the knowledge base changes frequently. Figuring out how to support efficient incremental or hot updates — without having to rebuild the entire cache — is a key challenge that needs to be addressed moving forward.

Information Fidelity

CAG assumes that standard KV caching can effectively capture all the key information from a long preloaded document D. But as D grows dramatically in length — say, reaching millions of tokens — that assumption starts to raise some questions.

Can the standard KV cache still retain all that information accurately, or at least with minimal loss? Is there a risk that earlier content gets diluted or overwritten by later parts, making it harder for the model to access the full knowledge during inference?

This isn't just a technical detail — it could end up defining the upper limits of what CAG can achieve.

Cache Granularity and Structure

Right now, CAG seems to treat the entire knowledge base as a single monolithic block when caching. But is that really the most efficient approach?

If the knowledge base has some internal structure — like chapters or thematic sections — wouldn’t it make sense to explore a more fine-grained, structured caching strategy? For example, breaking it into chunks, creating links between them, or even loading only the relevant chunks dynamically based on the query.

This could ease the burden of handling extremely long contexts and improve overall efficiency. Of course, it also adds a layer of complexity — but it might be worth it.