Revisiting Chunking in the RAG Pipeline

Unveiling the Cutting-Edge Advances in Chunking

Chunking involves dividing a long text or document into smaller, logically coherent segments or “chunks.” Each chunk usually contains one or more sentences, with the segmentation based on the text’s structure or meaning. Once divided, each chunk can be processed independently or used in subsequent tasks, such as retrieval or generation.

The role of chunking in the mainstream RAG pipeline is shown in Figure 1.

In the previous article, we explored various methods of semantic chunking, explaining their underlying principles and practical applications. These methods included:

Embedding-based methods: When the similarity between consecutive sentences drops below a certain threshold, a chunk boundary is introduced.

Model-based methods: Utilize deep learning models, such as BERT, to segment documents effectively.

LLM-based methods: Use LLMs to construct propositions, achieving more refined chunks.

However, since the previous article was published on February 28, 2024, there have been significant advancements in chunking over the past few months. Therefore, this article presents some of the latest developments in chunking within the RAG pipeline, focusing primarily on the following topics:

LumberChunker: A more dynamic and contextually aware chunking method.

Mix-of-Granularity(MoG): Optimizes the chunking granularity for RAG.

Prepare-then-Rewrite-then-Retrieve-then-Read(PR3): Enhances retrieval precision and contextual relevance by generating synthetic question-answer pairs (QA pairs) and Meta Knowledge (MK Summaries) Summaries.

Chunking-Free In-Context Retrieval(CFIC): Eliminates the chunking process by utilizing encoded hidden states of documents.

For each topic, additional thoughts and insights are offered.

LumberChunker

Key Idea

LumberChunker’s key idea is to dynamically segment long-form texts into contextually coherent chunks using a large language model, optimizing information retrieval by dynamically segmenting text to maintain semantic coherence and relevance.

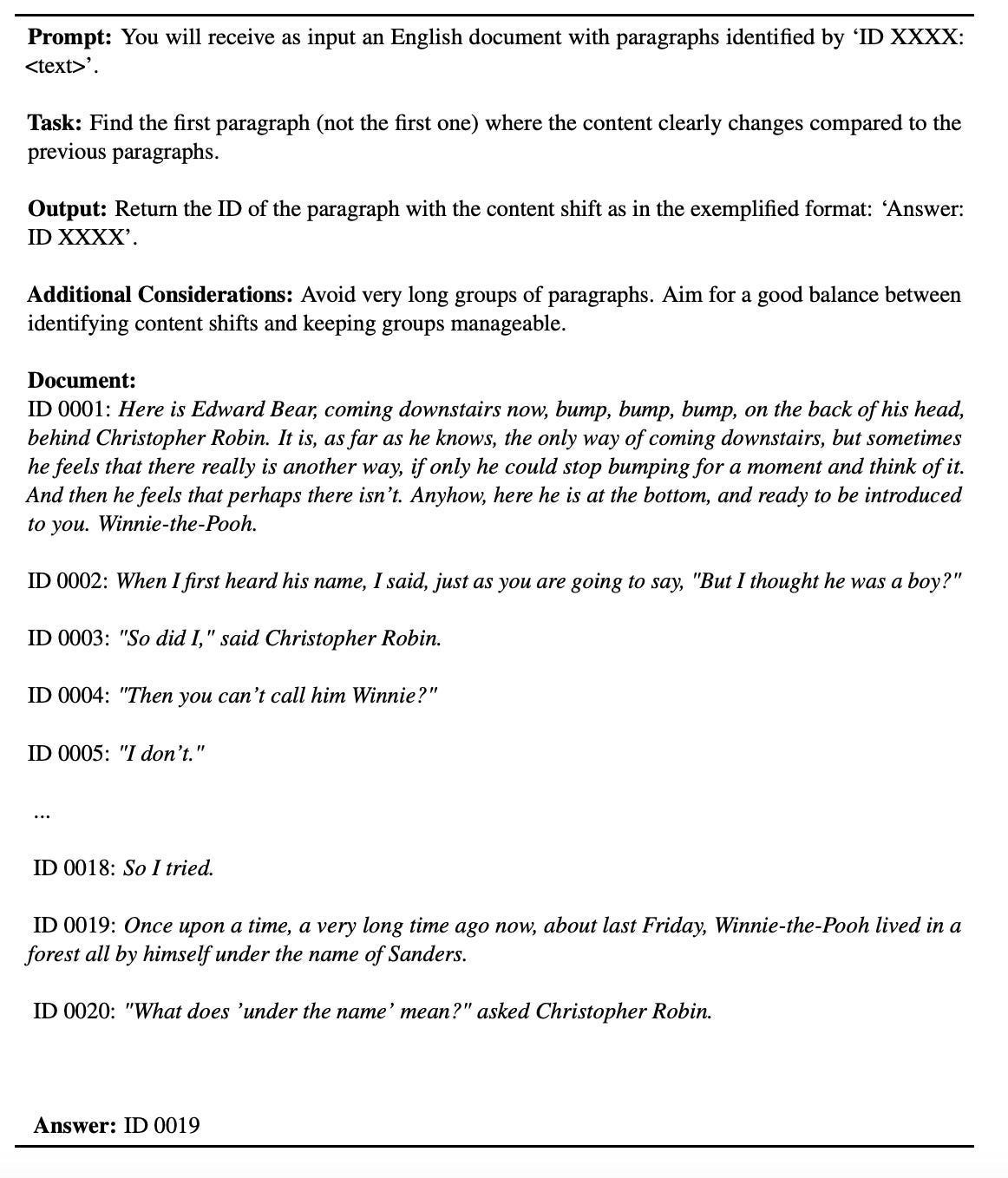

Figure 2 illustrates the LumberChunker pipeline, where documents are first segmented paragraph-wise, then paragraphs are grouped together until a predefined token count is exceeded, and finally, a model like Gemini identifies significant content shifts, marking the boundaries for each chunk; this process is repeated cyclically for the entire document.

Here’s a streamlined breakdown of its workflow:

Paragraph-wise Segmentation: The document is first divided into individual paragraphs, each assigned a unique ID. This initial step creates manageable units of text that serve as the foundation for further processing.

Grouping Paragraphs: These paragraphs are then grouped sequentially into a group (referred to as

Gi) until the total token count exceeds a set thresholdθ, which is optimally set around 550 tokens. This threshold is crucial for balancing the context provided to the model without overwhelming it.Identifying Content Shifts: The group

Gi is analyzed by the LLM (e.g., Gemini 1.0-Pro), which identifies the precise point where the content shifts significantly. This point marks the boundary between the current chunk and the next.Iterative Chunk Formation: After identifying the content shift, the document is iteratively segmented, with each new group starting from the identified shift point in the previous group. This ensures each chunk is contextually coherent.

Optimizing Chunk Size: The chosen threshold

θensures that chunks are neither too small, risking loss of context, nor too large, risking model overload. The balance around 550 tokens is found to be optimal for maintaining retrieval accuracy.

Since LumberChunker is open-source, the corresponding code can be found on GitHub.

Evaluation

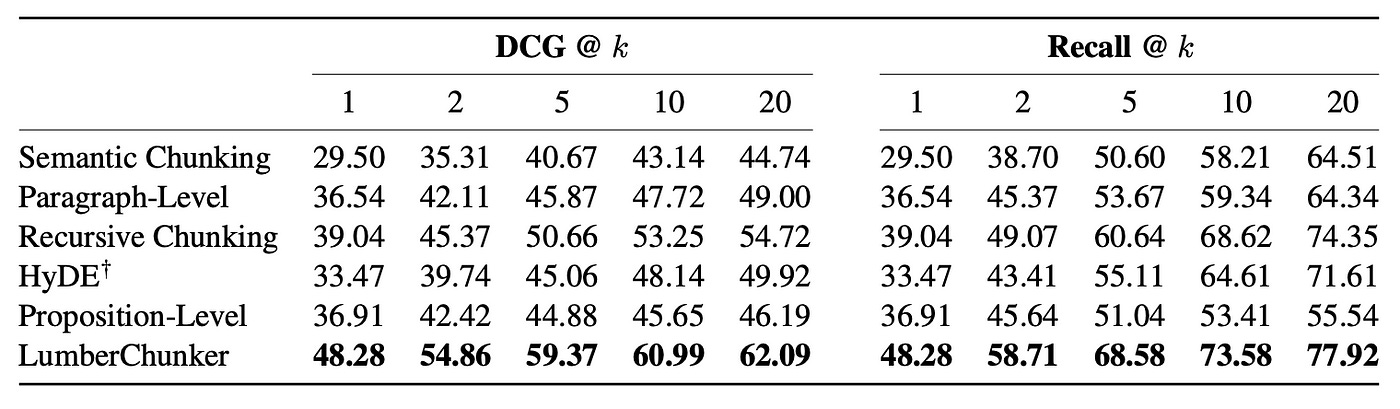

LumberChunker was rigorously evaluated using the GutenQA benchmark, a collection of 3000 question-answer pairs derived from 100 public domain narrative books.

Figure 4 highlights LumberChunker’s superior performance in retrieval tasks, with a 7.37% improvement in DCG@20 over the most competitive baseline.

It’s worth mentioning:

Recursive Chunking refers to ‘RecursiveCharacterTextSplitter from LangChain’.

Semantic Chunking refers to ‘5 Levels of Text Splitting,’ which was also introduced in the previous article (Embedding-based Methods section).

Proposition-Level refers to ‘Dense X Retrieval,’ which was also introduced in the previous article (LLM-based Methods section).

Compared to other chunking methods like semantic chunking, paragraph-level chunking, and recursive chunking, LumberChunker consistently outperforms these baselines, especially in retrieval tasks.

LumberChunker’s dynamic approach ensures that each chunk is contextually cohesive, offering a significant advantage over proposition-level chunking, which can be overly granular for narrative texts.

Computational Trade-offs in LumberChunker

The computational efficiency of LumberChunker compared to other chunking methods is shown in Figure 5. The key takeaway is that while LumberChunker significantly improves retrieval performance, it does so at a higher computational cost, especially with larger documents.

Recursive Chunking: This method is the fastest due to its simplicity and lack of reliance on LLMs. Even though its processing time increases with document size, it remains efficient because it doesn’t involve complex API requests.

HyDE: Despite using LLMs, HyDE maintains a constant processing time for all document sizes because it limits the number of LLM queries to a fixed number (30 per book).

LumberChunker, Semantic, and Proposition-Level chunking: they show significant increases in completion time with larger documents. Notably, both Semantic and Proposition-Level chunking can be optimized through asynchronous OpenAI API requests, greatly reducing completion times. However, LumberChunker’s dynamic LLM queries prevent such optimization. Despite LumberChunker’s superior retrieval performance compared to other baselines, there remains room for further improvement.

Thoughts and Insights about LumberChunker

By dynamically adjusting chunk sizes based on semantic shifts, LumberChunker offers a novel approach that addresses key limitations in existing chunking methods, particularly in handling long-form narrative texts.

However, its reliance on LLMs, while effective, introduces computational overheads, making it potentially less scalable for large-scale applications. Additionally, its performance in more structured texts, like legal documents, remains an open question.

Future improvements could focus on optimizing the computational efficiency of LumberChunker or exploring hybrid approaches that combine its strengths with other chunking techniques.

Mix-of-Granularity(MoG)

Overview

Consider a scenario where a medical RAG system retrieves information from both textbooks and medical knowledge graphs. The optimal chunk size for retrieving data from these two sources differs significantly due to their distinct data structures — textbooks have long, contextually rich passages, whereas knowledge graphs consist of short, interconnected terms. Using a fixed chunk size may result in irrelevant or insufficient data retrieval, thus hampering the performance of the LLM.