PMA: Adaptive and Parallel Documents Understanding in Multi-Agent Systems — AI Innovations and Insights 65

Welcome back, let's dive into Chapter 65 of this insightful series!

Why Traditional Multi-Agent LLM Setups Fall Short

Here's the problem: most multi-agent LLM systems stick to a rigid setup — fixed roles, tasks executed one after another. That might work for simple, predictable jobs, but it breaks down fast when things get complicated: ambiguous goals, new information mid-process, or uneven agent performance. The result? Redundant work, error chains, and context drifting out of sync.

What we actually need is a framework that can swap people in and out on the fly, let agents review and challenge each other, and run in parallel like a real professional team — constantly improving as they work.

Parallelism Meets Adaptiveness Architecture

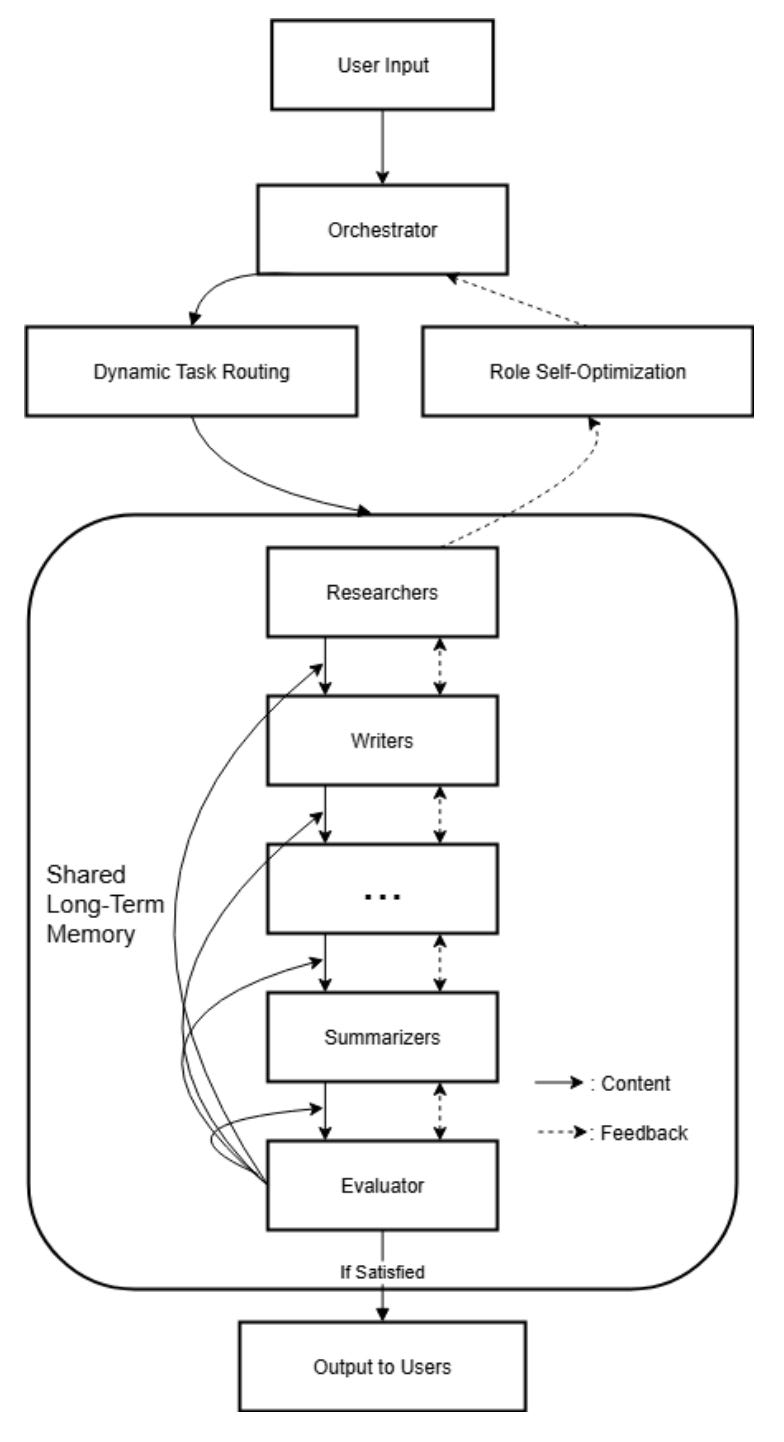

Figure 1 shows the Parallelism Meets Adaptiveness (PMA) Architecture:

Orchestrator – Breaks down the task, maps dependencies, and either calls the right specialist agent or kicks off a parallel competition.

Role Agents – Each one brings deep expertise in a specific area — from extracting risk disclosures, summarizing MD&A sections, spotting off–balance sheet arrangements, to handling compliance Q&A.

Shared Memory – Stores intermediate outputs and metadata to prevent duplicate work and keep terminology consistent across the team.

Evaluator – Scores each candidate result using the evaluation function E(o), then selects the best.

Feedback Bus – Monitors for inconsistencies and routes rework requests to the right place.

Think of it as a well-drilled team where the orchestrator is the project manager, role agents are your domain experts, shared memory is the team wiki, the evaluator is your quality control, and the feedback bus is the fast lane for fixing problems before they snowball.

Three Adaptive Collaboration Engines

PMA combines competitive parallelism, dynamic routing, and two-way feedback to make LLM teams both sharper in their strengths and quicker at catching each other's mistakes.

Parallel Competition: Let the best idea win. Multiple agents tackle high-risk or ambiguous tasks at the same time, with results scored and the best one selected.

Dynamic Routing: The right person, at the right time. Reassigns tasks in real time based on confidence, complexity, or workload.

Two-Way Feedback Loops: Fix only what's broken. Downstream agents can flag issues and send them back upstream for quick fixes — no need to rerun the whole process.

Instead of agents plodding along in a fixed order, PMA turns the workflow into a living system — quick to adapt, self-correcting, and always pushing for better results.

Deep Dive: The "Black Magic" Behind Each Module

Parallel Competition — Many Sprints, One Winner

When the orchestrator's confidence drops below a set threshold (θ), PMA fires off k parallel runs: multiple agents independently tackle the same subtask.

An evaluator scores each output based on factuality, coherence, and relevance — and, in regulated environments, regulatory alignment as well. The top-scoring output moves downstream, while the rest stay in shared memory for audits or rollbacks.

Why it works: lowers the risk of hallucinations and brings in more diverse lines of reasoning.

Example: In assessing liquidity risks from a company's earnings report, different agents focus on different angles — one looks at macroeconomic context, another at internal restructuring. The winning answer cites financial ratios and aligns perfectly with footnotes in the source document.

Dynamic Routing — Passing the Baton Mid-Race

Tasks aren't locked to fixed roles anymore. Agents can hand off work midstream based on confidence, complexity, or workload, ensuring the best-suited teammate takes over.

Why it works: reduces bottlenecks and makes better use of resources.

Example: A summarization agent hits a dense legal clause → instantly routes it to the compliance agent for expert handling.

Two-Way Feedback — Fix as You Go

Downstream agents can flag precisely where things went wrong and send them back upstream for targeted fixes.

Why it works: stops errors from snowballing and keeps the cost of corrections low.

Example: A QA agent spots a mismatch between debt disclosures and the balance sheet → pings the original agent to re-pull the data instead of redoing the whole process.

Real-World Results: SEC 10-K Analysis

The task: From U.S. public companies' SEC 10-K filings, extract key risk factors, summarize annual financial performance, and answer compliance-related questions.

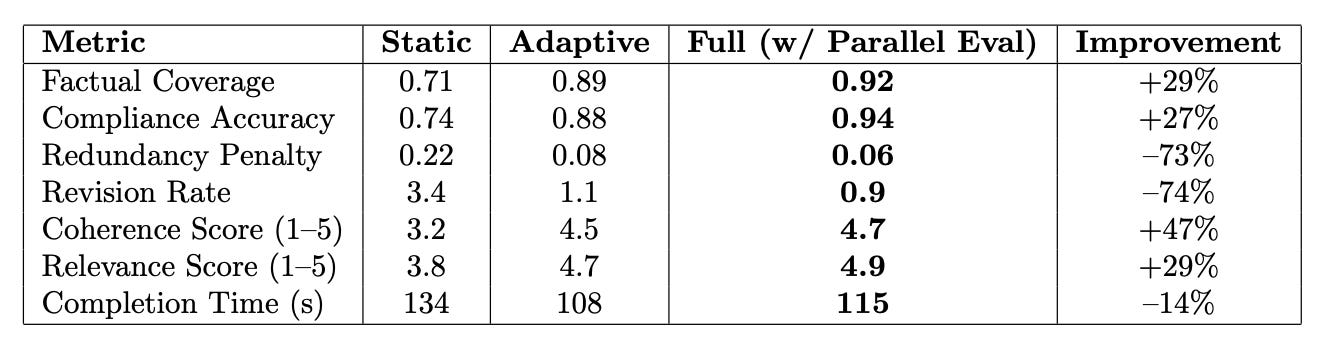

Three setups were tested:

Static baseline – Fixed roles, no adaptiveness.

Adaptive – Dynamic routing + two-way feedback.

Full PMA – Adaptive + parallel competition.

Figure 2 shows Full PMA outperforms other methods.

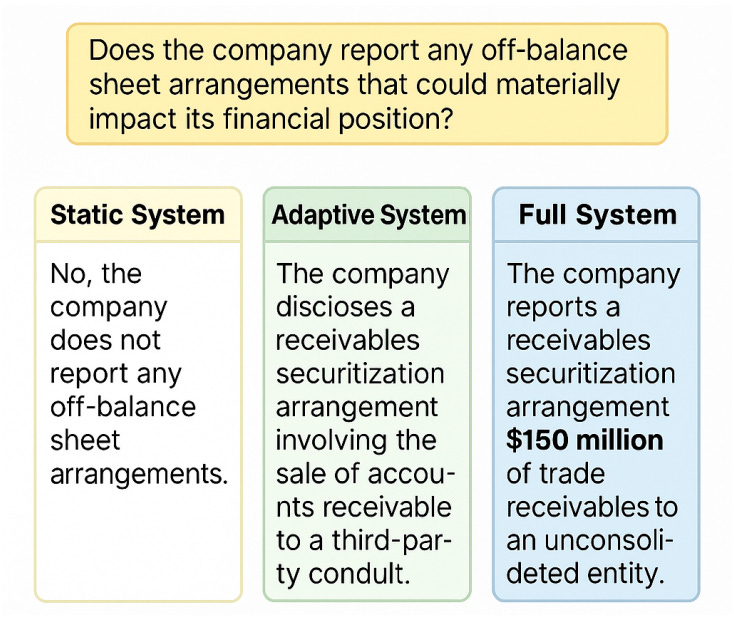

Figure 3 shows that:

The static system failed to detect the disclosure

The adaptive system surfaced a partial statement

The full system accurately identified and contextualized the $150 million receivables securitization, aligning with the gold-standard reference.

In other words, PMA didn't just "find" the right clue — it pulled the full thread, connecting numbers, context, and consequences into a complete, audit-ready insight.

Thoughts

At its core, PMA takes the classic idea of ensemble learning and cleverly lifts it from the model layer up to the architecture of multi-agent collaboration. By adding a competitive evaluation stage, it uses the determinism of system design to offset the uncertainty of any single large model's output.

But there's a catch: the ceiling of this framework is set entirely by the Evaluator's judgment. In other words, PMA shifts much of the problem's complexity into an even harder meta–challenge — designing a fair, all-knowing "AI referee."

That's the paradox for the future of multi-agent systems: raw execution power is easy to scale; precise judgment and decision-making remain the rare, hard-to-win prize.