Welcome to the 35th installment of this glamorous series.

Vivid Description

Think of HiRAG as a well-trained editorial team.

Reporters gather on-the-ground insights — that’s the local knowledge. Editors take a step back to organize those stories into broader themes — the global knowledge. And the editor-in-chief? They connect the dots between the front-line reporting and the big picture, highlighting what really matters — that’s the bridging layer.

When everyone works in sync, the final story comes out crisp, coherent, and compelling.

Overview

Open-Source Code: https://github.com/hhy-huang/HiRAG

As shown in Figure 1, existing graph-based RAG systems face two key challenges:

Semantically similar entities often have a distant structural relationship.

There's a disconnect between local and global knowledge, leading to a knowledge gap.

HiRAG is a RAG-based approach designed to help LLMs handle complex tasks by incorporating hierarchical knowledge.

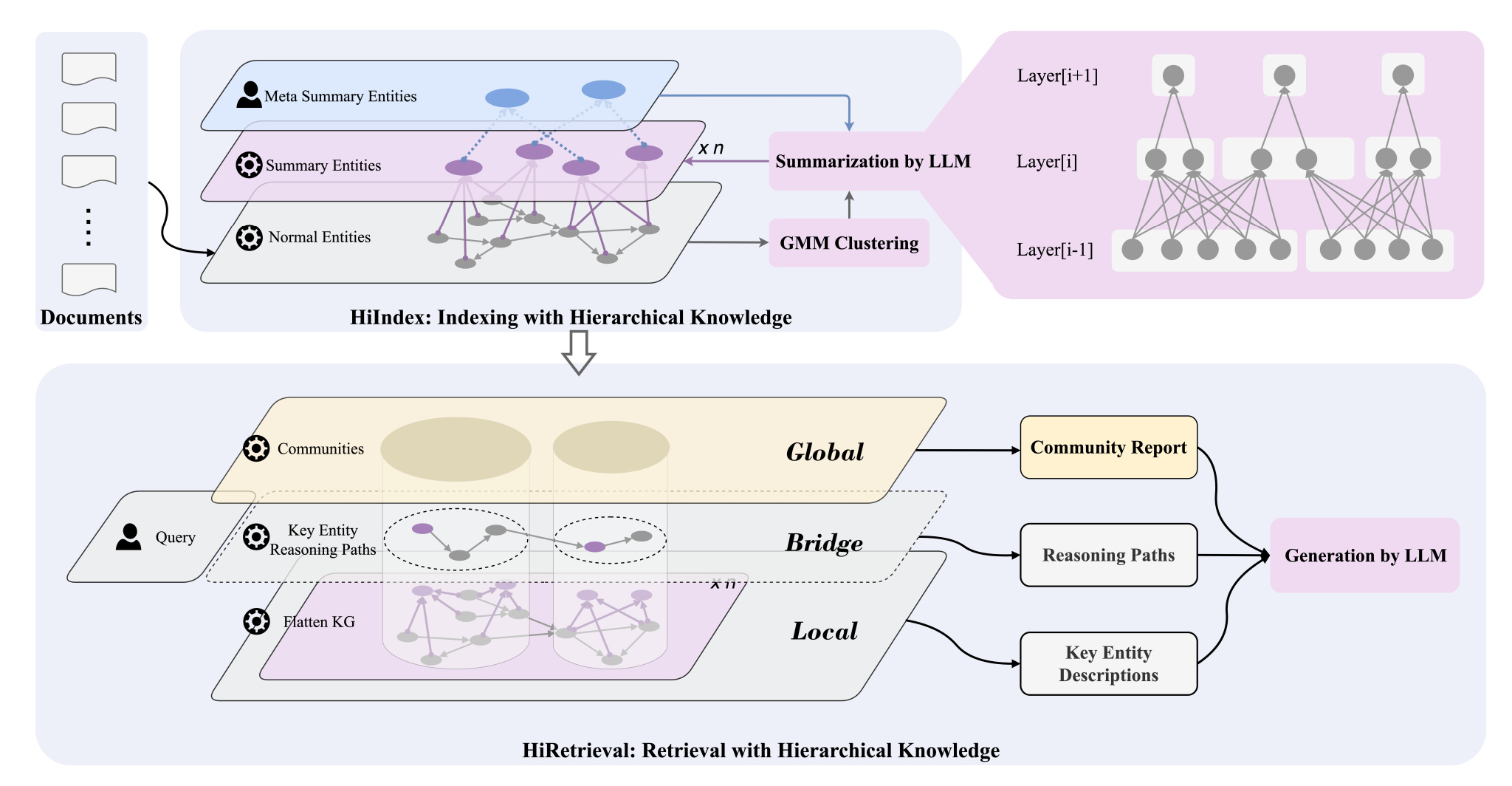

As shown in Figure 2, HiRAG consists of two main components:

HiIndex builds a multi-level knowledge graph. It clusters semantically similar entities using a Gaussian Mixture Model (GMM), and then summarizes each cluster with an LLM to create higher-level concepts. The summaries help strengthen semantic links between lower-level entities — for example, "BIG DATA" and "RECOMMENDATION SYSTEM" are connected through the concept of "DATA MINING".

HiRetrieval retrieves context on three levels: local entities, their surrounding clusters (or communities), and the reasoning paths that connect them. The bridging layer in HiRetrieval plays a key role in closing the gap between local and global knowledge, bringing the two into a more cohesive whole.

By organizing knowledge in this three-level structure, HiRAG provides LLMs with richer and more semantically coherent context — making them better equipped to tackle complex reasoning and understanding.

Thoughts and Insights

HiRAG is an attempt to rethink how knowledge is structured and semantically integrated — aiming to fix a key weakness in many existing RAG systems: the lack of meaningful structure.

That said, I have a few concerns:

High construction cost: HiRAG relies on LLMs for clustering and summarization. While this is done offline, maintaining the system in a large-scale, constantly evolving knowledge base could be resource-intensive.

Stability of the bridging layer: The paths used to connect local and global knowledge depend heavily on semantic similarity and the quality of GMM clustering. But GMM is notoriously sensitive to initial parameters and data distribution, which may lead to unstable or unreliable connections.

Robustness of hierarchical depth (k): The current method determines when to stop adding layers based on the change rate of cluster sparsity (with a threshold of less than 5%). However, it's unclear whether this stopping criterion holds up well across different domains or datasets — more validation is needed.