Graph RAG: An Approach to Answering Global Queries

Traditional RAG struggles to answer global queries directed at an entire text corpus, such as determining the main theme of a dataset. This is essentially a Query Focused Summary (QFS) task, rather than a straightforward retrieval task.

To address this, Graph RAG offers a solution. It employs LLM to construct a graph-based text index in two stages:

Initially, it derives a knowledge graph from the source documents.

Subsequently, it generates community summaries for all closely connected entity groups.

Given a query, each community summary contributes to a partial response. These partial responses are then aggregated to form the final answer.

Overview

Figure 1 shows the pipeline of Graph RAG. The purple box signifies the indexing operations, while the green box indicates the query operations.

Graph RAG employs LLM prompts specific to the dataset's domain to detect, extract, and summarize nodes (like entities), edges (like relations), and covariates (like claims).

Community detection is utilized to divide the graph into groups of elements (nodes, edges, covariates) that LLM can summarize at both the time of indexing and querying.

The "global answer" for a particular query is produced by conducting a final round of query-focused summarization on all community summaries associated with that query.

The implementation of each step in Figure 1 will be explained below. It's worth noting that as of June 10, 2024, Graph RAG is not currently open source, so it can't be discussed in relation to the source code.

Step 1 : Source Documents → Text Chunks

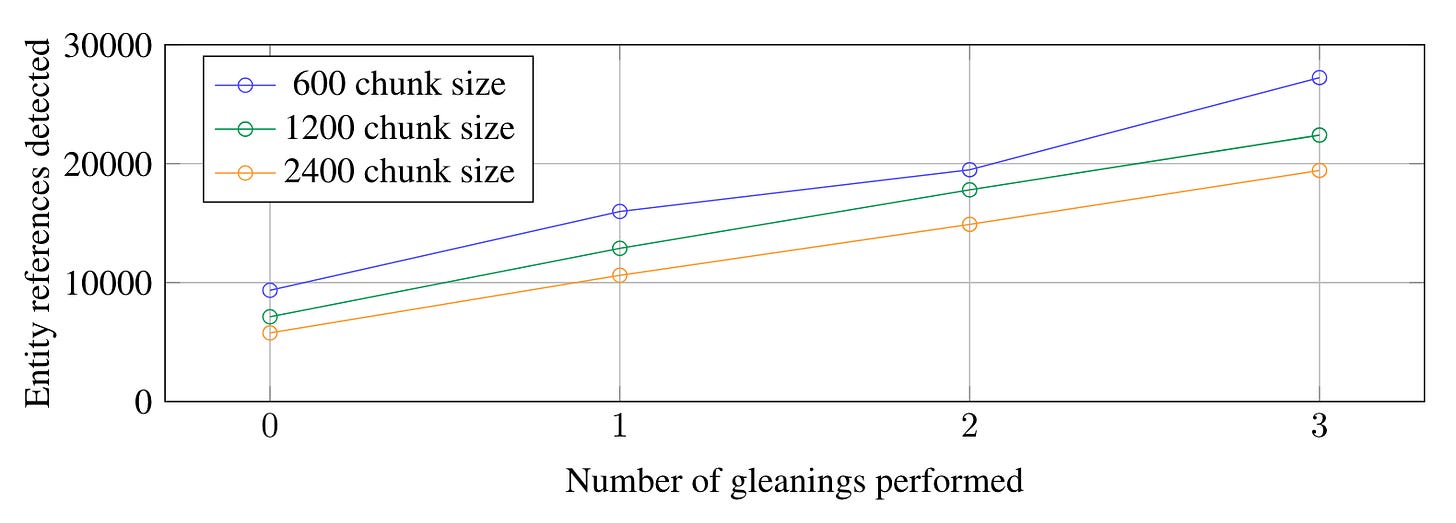

The trade-off of chunk size is a longstanding problem.

If the chunk is too long, the number of LLM calls decreases. However, due to the constraints of the context window, it becomes challenging to fully comprehend and manage large amounts of information. This situation can lead to a decline in the recall rate.

As illustrated in Figure 2, for the HotPotQA dataset, a chunk size of 600 tokens extracts twice as many effective entities compared to a chunk size of 2400 tokens.

Step 2: Text Chunks → Element Instances (Entities and Relationships)

The method involves constructing a graph by extracting entities and their relationships from each chunk. This is achieved through the combination of LLMs and prompt engineering.

Simultaneously, Graph RAG employs a multi-stage iterative process. This process requires the LLM to determine if all entities have been extracted, similar to a binary classification problem.

Step 3: Element Instances → Element Summaries → Graph Communities → Community Summaries

In the previous step, the extracting entities, relationships, and claims is actually a form of abstractive summarization.

However, Graph RAG believes that this is not sufficient and that further summarizations of these "elements" are required using LLMs.

A potential concern is that LLMs may not always extract references to the same entity in the same text format. This could lead to duplicate entity elements, consequently generating duplicate nodes in the graph.

This concern will quickly dissipate.

Graph RAG employs community detection algorithms to identify community structures within the graph, incorporating closely linked entities into the same community. Figure 3 presents the graph communities identified in the MultiHop-RAG dataset using Leiden algorithm.

In this scenario, even if LLM fails to identify all variants of an entity consistently during extraction, community detection can help establish the connections between these variants. Once grouped into a community, it signifies that these variants refer to the same entity connotation, just with different expressions or synonyms. This is akin to entity disambiguation in the field of knowledge graph.

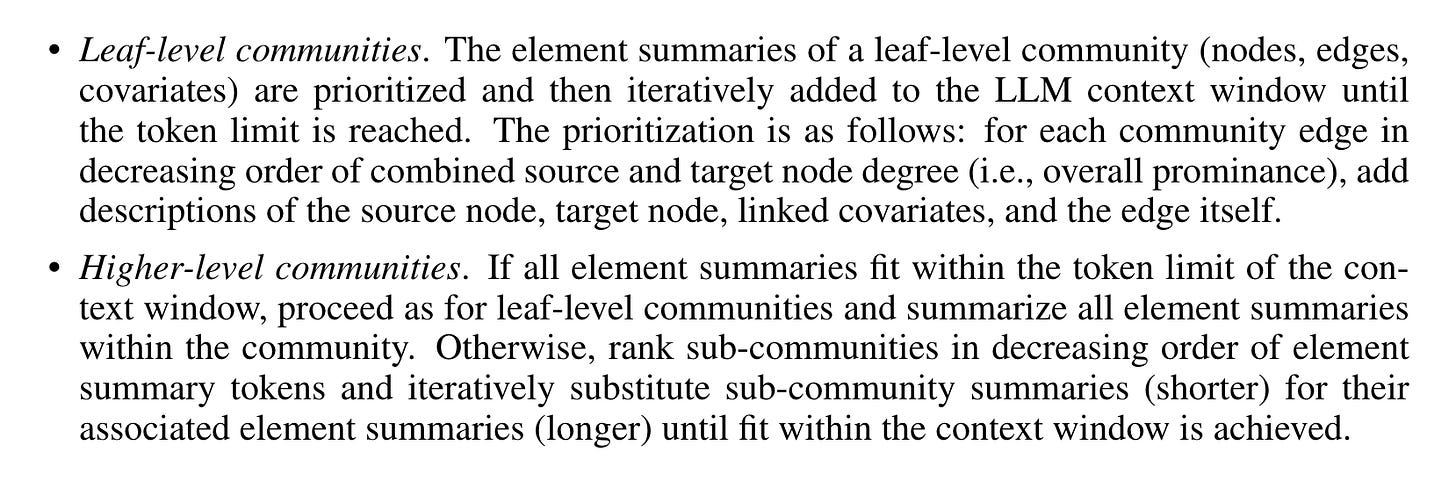

After identifying the community, we can generate report-like summaries for each community within the Leiden hierarchy. These summaries are independently useful in understanding the global structure and semantics of the dataset. They can also be used to comprehend the corpus without any problems.

Figure 4 shows the generation method of community summary.

Step 4: Community Summaries → Community Answers → Global Answer

We've now reached the final step: generating the final answer based on the community summary from the previous step.

Due to the hierarchical nature of community structure, summaries from different levels can answer various questions.

However, this brings us to another question: with multiple levels of community summaries available, which level can strike a balance between detail and coverage?

Graph RAG, upon further evaluation(section 3 in the paper of Graph RAG), selects the most suitable level of abstraction.

For a given community level, the global answer to any user query is generated, as shown in Figure 5.

Conclusion

This article presents Graph RAG. This method merges knowledge graph generation, RAG, and Query-Focused Summarization (QFS) to facilitate comprehensive understanding of the entire text corpus.