Welcome back, let’s dive into Chapter 60 of this insightful series!

Today, let’s take a look at three recent AI developments.

GLiNER2: An Efficient Multi-Task Information Extraction System with Schema-Driven Interface

Overview

Information extraction (IE) sits at the heart of many NLP applications. But in practice, it often require specialized models for different tasks or rely on computationally expensive LLMs.

GLiNER2 takes a smarter path.

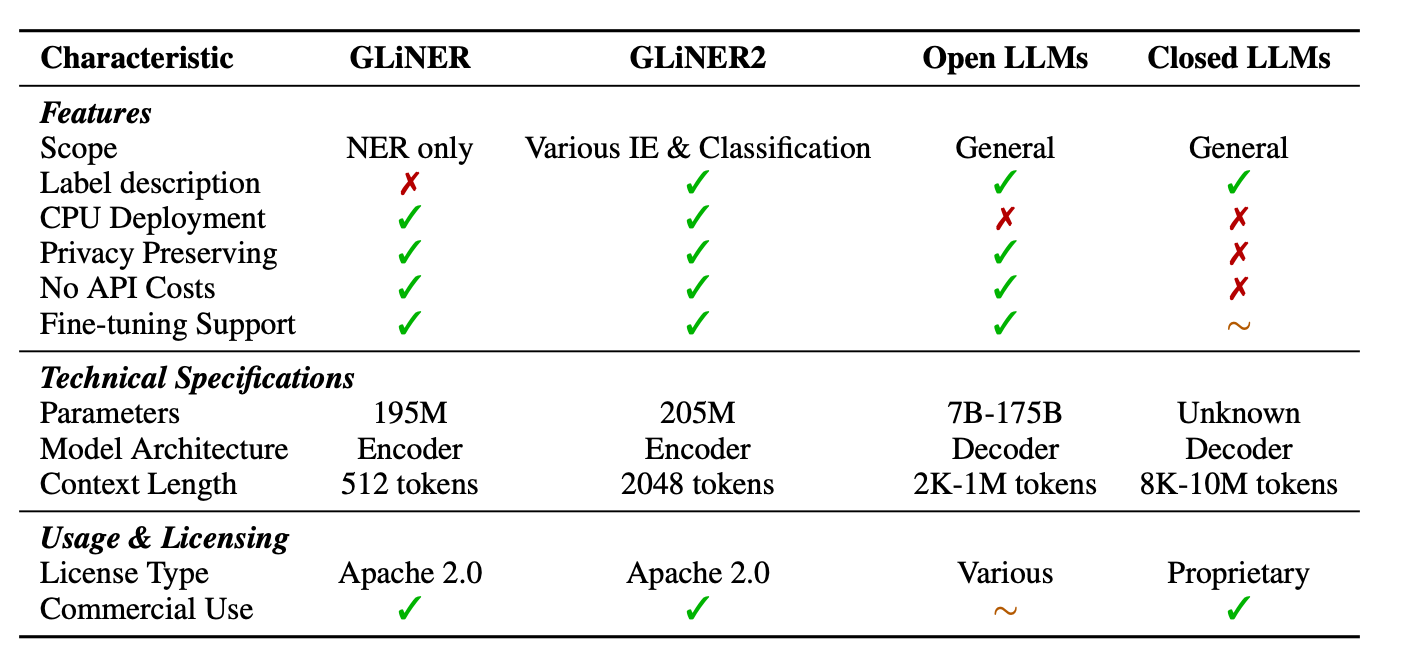

Instead of following the crowd and building on a decoder-heavy architecture, it opts for a leaner transformer encoder. The result? A compact model with just 205 million parameters that runs efficiently on CPUs—perfect for edge devices and resource-constrained environments.

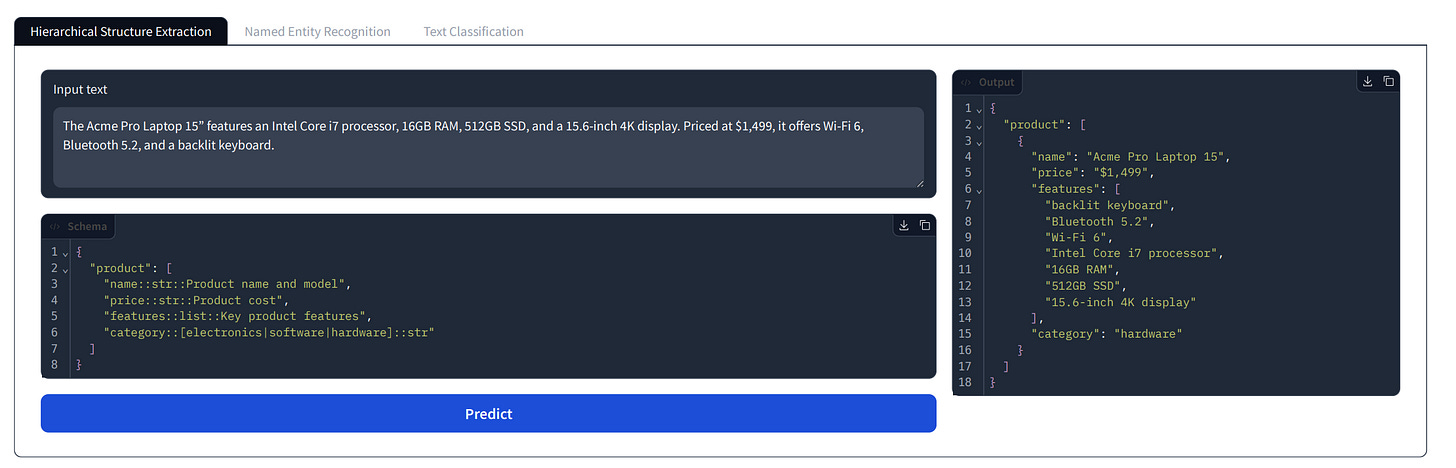

What really sets GLiNER2 apart is its ability to unify tasks that are usually siloed: named entity recognition (NER), text classification, and structured data extraction. It does this with a clever task prompting mechanism that allows it to handle all of these in a single forward pass. This mitigates the problem of "model islands" and fragmentation caused by the need to deploy a separate model for each task in the past.

Thoughts

Keep reading with a 7-day free trial

Subscribe to AI Exploration Journey to keep reading this post and get 7 days of free access to the full post archives.