From Retrieval to Reasoning: The Next-Gen AI Search Paradigm — AI Innovations and Insights 55

Welcome back, let’s dive into Chapter 55 of this insightful series!

As the volume of data and knowledge we interact with continues to explode, traditional search engines are increasingly falling short—especially when it comes to handling complex queries like cross-document reasoning or answering vague, open-ended questions.

While RAG systems offer some progress by combining document retrieval with LLMs, they’re often limited to single-turn, static responses. They struggle with more demanding tasks that require multi-step reasoning, tool use, or even basic error correction.

In addition, humans tend to break problems down, use tools, reflect on their thinking, and adjust along the way. Most current AI systems simply don’t think this way.

"Towards AI Search Paradigm" introduces a new approach: a multi-agent search framework designed to mimic human cognition. With the ability to reason, plan, act, and generate collaboratively, this architecture aims to unlock a smarter, more accurate, and more scalable way to retrieve and understand information.

Overview

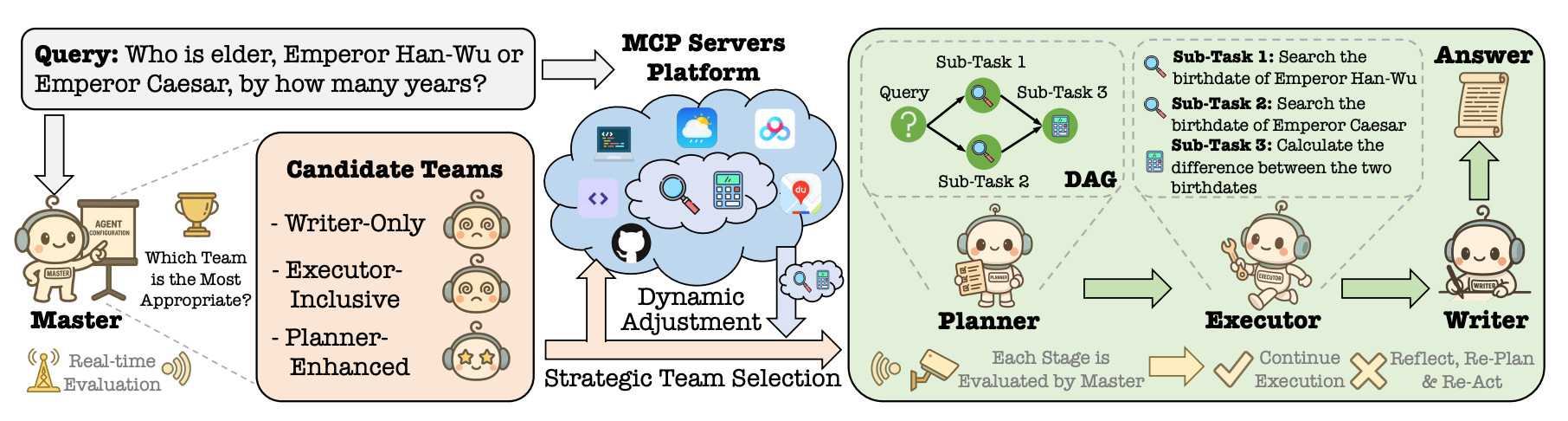

Figure 1 outlines the overall architecture of the AI Search Paradigm, designed to tackle complex information queries. Here’s how it works, step by step:

User Query: Everything starts with a user question—for example: "Who is elder, Emperor Han-Wu or Emperor Caesar, by how many years?"

Master Agent: This agent first assesses the complexity of the query. If it detects that the question requires multi-step reasoning, it delegates the task to a Planner.

Planner Agent: The Planner breaks the main question into smaller, manageable subtasks—such as finding each person’s birth year and then calculating the age difference. It also builds a Directed Acyclic Graph (DAG) to determine the logical order in which tasks need to be executed.

Executor Agent: Based on the plan, the Executor carries out each subtask in order. It uses external tools like search engines or calculators to gather the necessary information.

Writer Agent: Once all the pieces are in place, the Writer pulls everything together to generate a clear, coherent, and accurate natural language response.

Real-time Monitoring by Master: Throughout the process, the Master keeps track of progress. If something fails—say, a subtask returns incomplete data—it can trigger a rethinking cycle: reflect, re-plan, and re-execute.

This whole system is built around a collaborative "sense–plan–act–generate–reflect" loop, aiming to bring human-like reasoning and flexibility to complex search tasks.

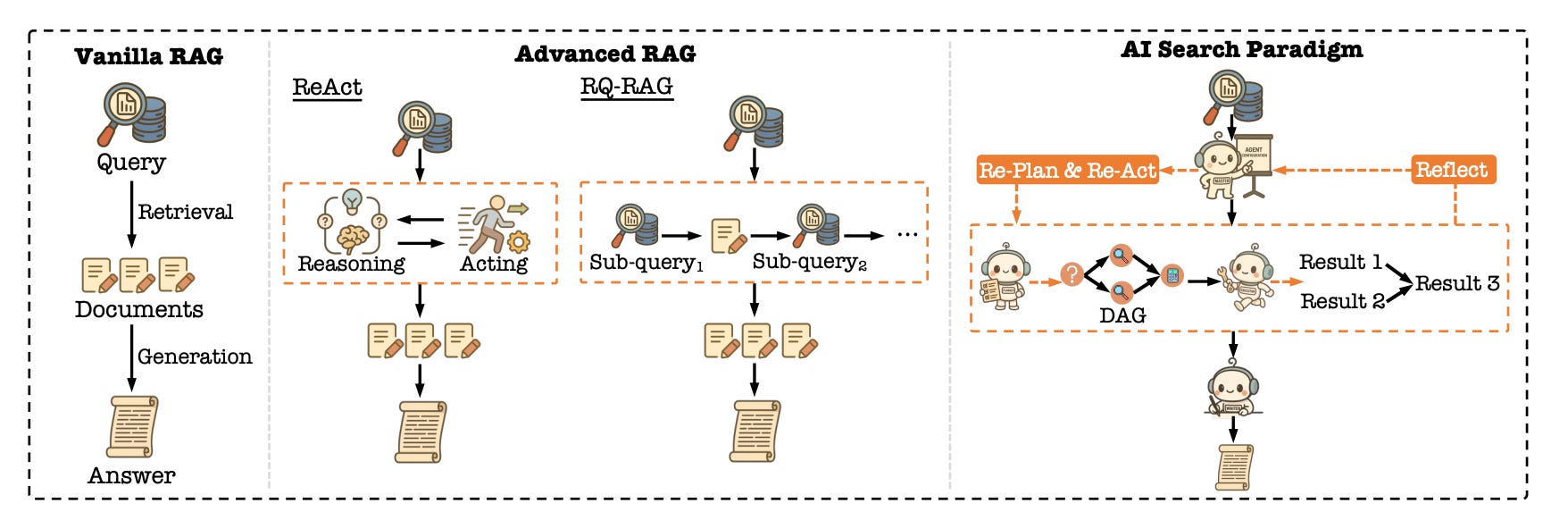

Figure 2 offers a comparison of RAG frameworks.

On the left, we see the standard Vanilla RAG setup — a straightforward one-shot retrieval followed by answer generation. It’s simple and fast, but not always flexible or deep.

In the middle, more advanced approaches like ReAct and RQ-RAG () take things a step further. These methods introduce reasoning-action cycles or break down the query into a series of smaller sub-queries, allowing for step-by-step reasoning.

On the right is the AI search paradigm, which introduces a coordinated multi-agent system. A Master agent oversees the entire process. It guides a Planner, who builds a roadmap — essentially a directed acyclic graph (DAG) of sub-tasks — based on the user’s query. The Planner also picks the right tools for each step along the way. A dedicated Executor carries out these tasks using the chosen tools. Throughout the process, the Master keeps track of progress, reflects on results, and triggers re-planning if something’s missing or off track. Finally, a Writer pulls it all together into a final, coherent answer.

Thoughts and Insights

RAG systems have become the mainstream solution in many real-world applications. But most of them still follow a fairly rigid pattern: slicing documents, retrieving chunks, and generating an answer in one go. That works fine for simple queries — but once the task involves multi-step reasoning, things start to break down.

"Towards AI Search Paradigm" takes a hard look at those limitations and proposes something more ambitious: a multi-agent collaboration model inspired by how humans think and solve problems. It’s a big leap — not just from one framework to another, but from using AI as a tool to treating it more like an intelligent agent.

But I have a few concerns.

One of the key issues with traditional tool-use pipelines is fragmentation. They're hard to scale and even harder to manage. The proposed MCP framework tackles this head-on, introducing a Kubernetes-style abstraction: a clean interface combined with smart capability scheduling. It’s a strong engineering layer that brings structure and flexibility.

The design includes multiple agents, dynamic tool retrieval, DAG-based task planning, and mechanisms for reflection and re-planning. It’s powerful — but not without trade-offs. These features come at a cost: higher runtime overhead and potential system instability. In environments with high traffic or tight latency constraints, putting this into production would require serious engineering work around compression and reliability.

One notable detail: the Planner agent generates the full DAG structure in a single shot using "chain-of-thought → structured-sketch prompting." It’s efficient, but also fragile — small variations in prompts or language can lead to planning errors or missing steps. It suggests using reinforcement learning to fine-tune the Planner, but robust performance on complex real-world tasks still needs more empirical proof.

Overall, the combination of LLMs, modular tools, and feedback loops forms a powerful triangle — just like how humans use language, tools, and reflection to solve problems. This kind of architecture has huge potential beyond search.

I wonder if there are any systems which use this architecture in production. Especially if there is any drift in the system over time.