Document Layout Analysis (DLA) is crucial for understanding the physical layout and logical structure of documents, which supports applications like RAG, document summarization, and knowledge extraction.

Traditional methods typically use separate models to address sub-tasks such as table/figure detection, text region detection, logical role classification, and reading order prediction. This fragmentation can lead to inefficiencies and increased complexity.

This article introduces a new study called DLAFormer, an end-to-end transformer-based approach that integrates all these sub-tasks into a single model, streamlining the process and improving overall performance.

Problem Definition

The document image inherently comprises diverse regions, encompassing both Text Regions and Non-Text Regions.

A Text Region serves as a semantic unit of written content, comprising text lines arranged in a natural reading sequence and associated with a logical label, such as paragraph, list/list item, title, section heading, header, footer, footnote, and caption.

Non-text regions typically include graphical elements like tables, figures, and mathematical formulas. Multiple logical relationships often exist between these regions, with the most common being the reading order relationship.

Consequently, three distinct types of relationships are defined:

Intra-region Relationship: This relationship groups basic text units, such as text lines, into coherent text regions. Each text region, composed of several text lines, is arranged in a natural reading sequence. For all adjacent text lines within the same text region, intra-region relationships are established. For text regions consisting of a single text line, the relationship of this text line is self-referential.

Inter-region Relationship: These relationships are constructed between all pairs of regions that exhibit logical connections. For instance, an inter-region relationship is established between two adjacent paragraphs and between a table and its corresponding caption or footnote.

Logical Role Relationship: Various logical role units are delineated, including caption, section heading, paragraph, title, etc. Given that each text region is assigned a logical role, a logical role relationship is established between each text line in the text region and its corresponding logical role unit.

By defining these relationships, various DLA sub-tasks (such as text region detection, logical role classification, and reading order prediction) are framed as relation prediction challenges. The labels of different relation prediction tasks are merged into a unified label space, allowing a unified model to handle these tasks concurrently, as shown in Figure 1.

Solution

Overview

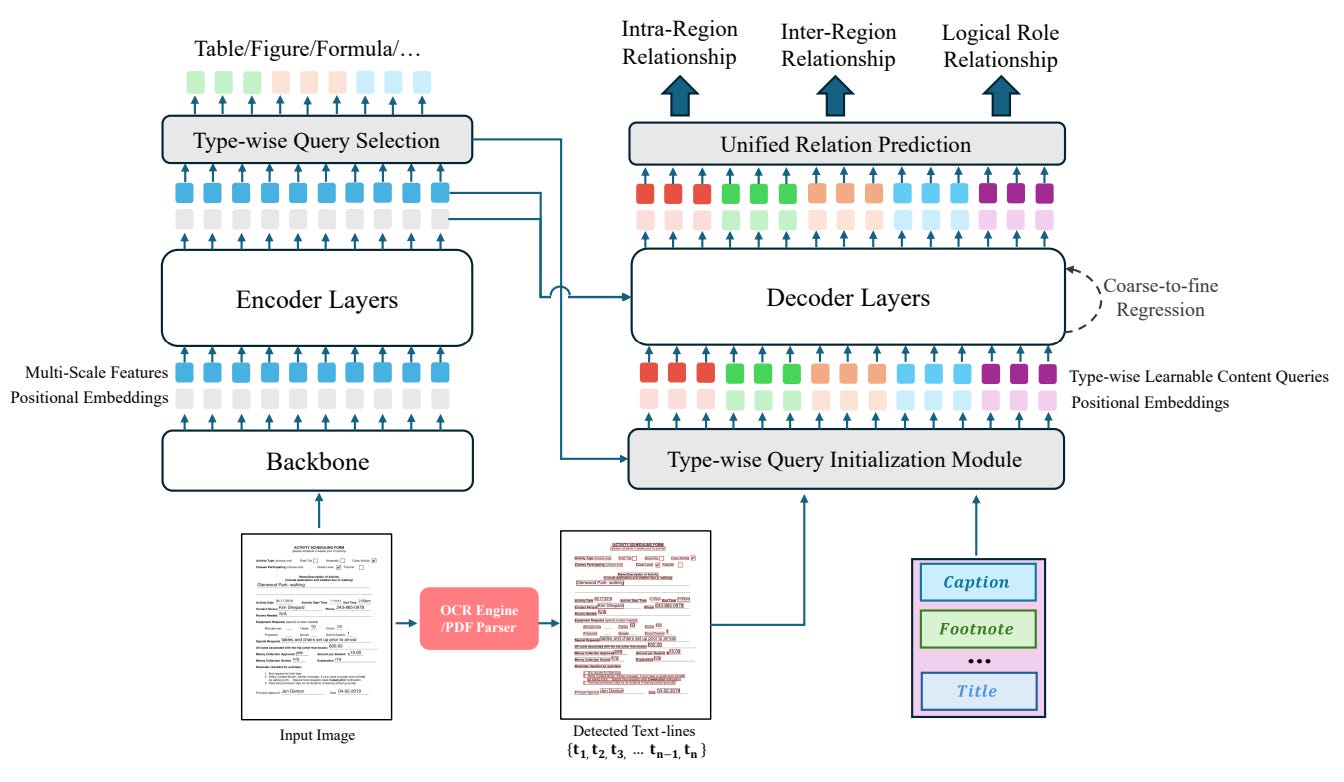

As shown in Figure 2, DLAFormer leverages a transformer-based architecture to handle multiple DLA tasks concurrently by treating them as relation prediction problems. It unifies these tasks into a single label space, allowing for an efficient and cohesive analysis.

This approach simplifies the model architecture and enhances the scalability and performance of DLA tasks.

Detailed Workflow

Encoder-Decoder Architecture: The model employs an encoder-decoder architecture inspired by DETR. The encoder processes multi-scale features extracted from document images, while the decoder refines these features and predicts the relations between them.

Type-wise Queries: To enhance the physical meaning of content queries, DLAFormer introduces type-wise queries that capture categorical information of diverse page objects. This allows the model to adaptively focus on different regions and tasks.

Coarse-to-Fine Strategy: The model adopts a coarse-to-fine strategy to accurately identify graphical page objects, improving the precision of detection.

Unified Relation Prediction: By consolidating the labels of different relation prediction tasks into a unified label space, DLAFormer uses a unified relation prediction module to handle these tasks concurrently. This approach reduces cascading errors and improves the efficiency of the training process.

Evaluation

Experimental results on two benchmarks, DocLayNet and Comp-HRDoc, demonstrate that DLAFormer outperforms previous methods that use multi-branch or multi-stage architectures. The model achieves superior results in tasks like graphical page object detection, text region detection, logical role classification, and reading order prediction.

Additionally, DLAFormer shows significant improvements in mAP for various categories such as formulas, tables, and figures, compared to traditional object detection methods, as shown in Figure 3.

Conclusion

This article presents DLAFormer, an innovative end-to-end transformer model for document layout analysis that integrates multiple DLA tasks into a unified framework. The proposed approach simplifies the training process, enhances scalability, and improves performance across various benchmarks.

From this paper, it's clear that integrating multiple related tasks into a single model can drastically improve efficiency and performance in complex analysis scenarios. The use of a transformer-based approach for DLA shows promise due to its ability to handle diverse and intricate document layouts effectively. However, the challenge remains in ensuring the model can scale and adapt to even more varied document types and tasks in real-world applications.