Welcome to the 36th installment of this glamorous series.

Vivid Description

Tackling complex queries is a lot like trying to find your way out of a maze.

Traditional RAG approaches rely on a single, possibly incomplete map—one round of retrieval—which can easily lead you into a dead end.

CoRAG, on the other hand, works more like a clever explorer. It keeps testing new paths (sub-questions), picks up clues along the way (retrieved information), and adapts its search strategy based on what it has already discovered and the obstacles it encounters (query reformulation). The flexible, step-by-step process allows CoRAG to explore the maze more thoroughly and gives it a much better shot at finding the right exit—the correct answer.

Overview

Open-Source Code: https://github.com/microsoft/LMOps/tree/main/corag

Most traditional RAG methods only retrieve information once before generating a response. But when it comes to complex questions, that single step often isn’t enough—especially if the retrieved content isn’t quite right.

CoRAG (Chain-of-Retrieval Augmented Generation) is a new kind of multi-step RAG method that lets LLMs tackle complex questions more like humans do—by breaking them down step by step and retrieving information along the way.

Unlike traditional RAG, which retrieves just once before generating an answer, CoRAG follows a “retrieve–generate–retrieve again” loop. This dynamic approach significantly boosts accuracy and reliability in multi-hop reasoning and knowledge-intensive tasks.

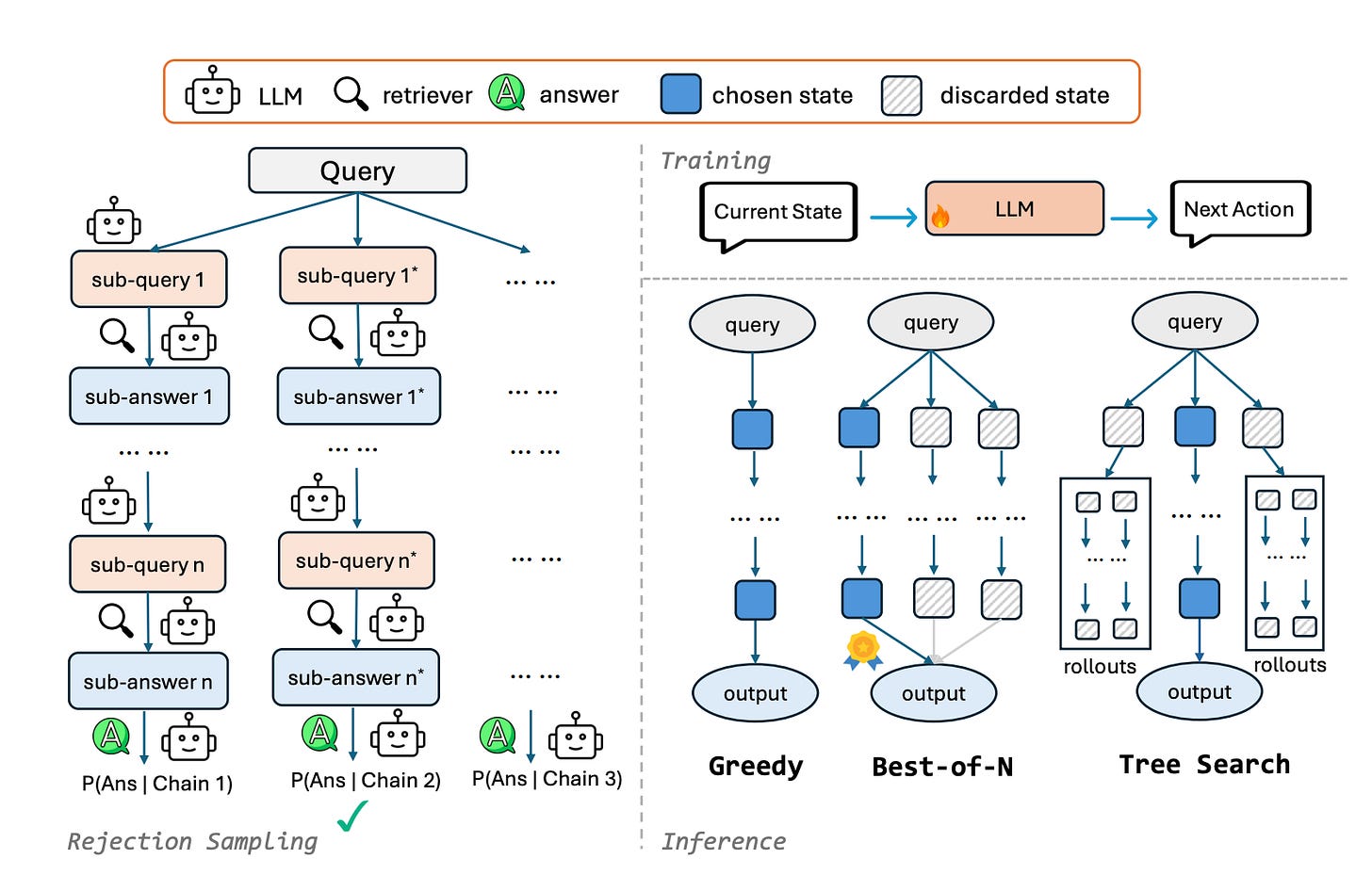

As shown in Figure 1:

Rejection sampling is used to turn QA-only datasets into richer retrieval chains. Each chain begins with the original question and unfolds into a series of sub-questions and answers.

An open-source LLM is fine-tuned to decide what to do next at each step based on the current state.

At inference time, different decoding strategies can be used to control the test-time compute.

Thoughts and Insights

CoRAG essentially turns retrieval from a static, one-off step into a dynamic, decision-driven process.

After reading the CoRAG paper, I realize something: the next generation of AI systems may need more than just memory and generation—they’ll also need the ability to actively build and adjust their own information, on the fly.

The core idea behind CoRAG is to use rejection sampling to create high-quality training data in the form of “retrieval chains.” These chains are built by sampling multiple sub-question sequences and picking the one that leads to the highest likelihood for the final answer.

The catch? Rejection sampling is both costly and unstable. In long-tail scenarios or when the answer is hard to match, it can lead to a few major issues:

Multiple rounds of sampling might still fail to produce a useful chain, making the training data unreliable.

Misleading chains can sneak through—just because they lead to a high-likelihood answer, even if the reasoning is flawed.