Breaking the Modality Gap: Has UniversalRAG Redefines Multimodal RAG? — AI Innovations and Insights 58

Most RAG systems today rely only on text. But real-world questions often need more than just written words. We might need an image, a chart, or even a video to get the full picture.

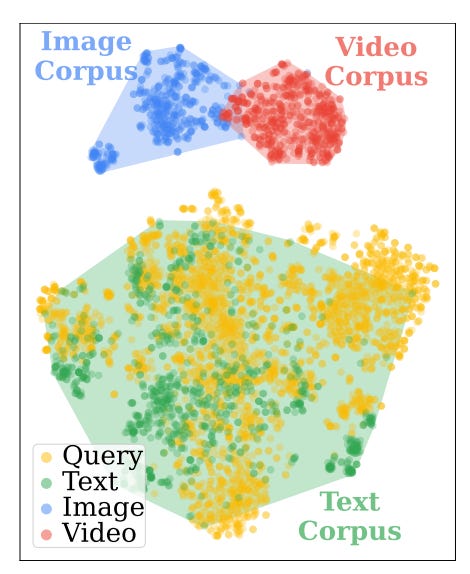

Some newer multimodal RAG methods try to handle this by forcing everything—text, images, and more—into a single shared space. But that shortcut creates a mismatch: the system ends up favoring results from the same modality as the query, rather than what's actually most relevant.

Overview

UniversalRAG takes a fresh approach. Instead of retrieving from just one type of data, it’s built to search across heterogeneous sources with diverse modalities and granularities.

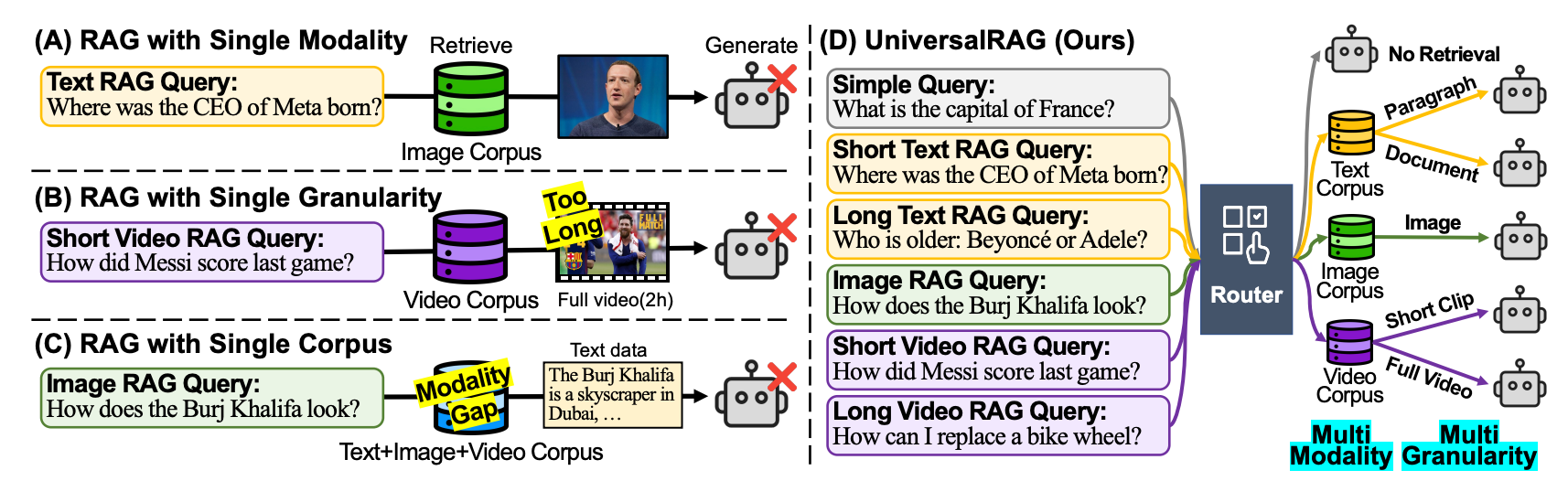

Figure 2 breaks it down nicely:

(A) When RAG is limited to a single modality, it falls short on queries that need other modalities of data.

(B) If it only supports one level of granularity, the results tend to be either too vague or too detailed.

(C) A unified corpus across all modalities leads to "modality gaps"—the system ends up favoring data that matches the query’s modality.

(D) UniversalRAG solves all of this by using a smart routing system that understands both modality and granularity, tapping into the right source at the right level for each query.

Core Ideas

UniversalRAG centers on two key ideas.

Modality-Aware Routing

Instead of forcing everything into a single representation, the system uses a smart "router" to figure out which modality—text, image, video—is best suited to answer the query. Each modality has its own embedding space and retriever, which helps avoid the errors that come from trying to align everything across modalities.

This routing can be trained (e.g. using DistilBERT or T5-Large) or handled in a more lightweight, prompt-based way (like querying GPT-4o).

Granularity-Aware Retrieval

Once the right modality is chosen, the system goes a step further by breaking the data down by granularity.

For example, text data might be split into paragraphs and full documents; video data could be divided into short clips and entire videos.

The idea is to match the query to the right level of detail—quick factual questions can be answered with a paragraph, while complex, multi-step reasoning might require digging into a full document.

Thoughts and Insights

The modality gap is a well-known and persistent challenge in today’s multi-modal RAG systems—it’s a structural issue that seriously impacts performance.

UniversalRAG tackles it head-on by moving away from the traditional “one-size-fits-all” unified retrieval approach and adopting a more modular, modality-aware architecture. The design philosophy behind UniversalRAG is forward-looking.

But I have one concern.

Right now, UniversalRAG feels more like “pick one modality and go” than “pull insights from multiple modalities and combine them.” That’s a limitation. For cross-modal questions like “What is this person in the image saying?” or “What do the reviews say about this product in the photo?”, you need text and images to work together. UniversalRAG doesn’t currently support joint retrieval or cross-modal reasoning.

If future versions could pull evidence from multiple modalities simultaneously—and reason across them—that would be even better.