An Innovative Compression Method with Advanced Content Restructuring

In my previous article, I introduced some cutting-edge algorithms for prompt compression. Today, let's explore a new algorithm.

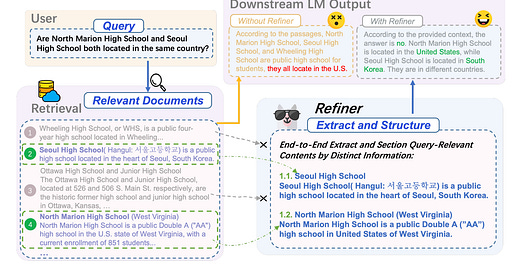

The common approach in Retrieval-Augmented Generation (RAG) involves retrieving semantically relevant document chunks and feeding them into the Large Language Model (LLM) for question-answering tasks. However, this method suffers from several shortcomings:

Scattered Information: When the necessary information is scattered across multiple chunks, LLMs often fail to effectively synthesize the data, leading to the "lost-in-the-middle" syndrome.

Contradictory or Irrelevant Content: Retrieved chunks may include contradictory or irrelevant information that distracts the LLM from generating accurate answers.

For example, consider an LLM tasked with summarizing a book. If it retrieves chapters in a jumbled order, the resulting summary would be confusing. Similarly, when document chunks are scattered, LLMs struggle to discern key facts.

Keep reading with a 7-day free trial

Subscribe to AI Exploration Journey to keep reading this post and get 7 days of free access to the full post archives.