The video contains a mind map:

This article is the 17th in this promising series. Today, we will explore an exciting topic: DeepSeek-V3: A Revolutionary, Low-Cost, High-Performance LLM

DeepSeek-V3 is a powerful open-source LLM that came out in December 2024.

I recently read its technical report, which is quite lengthy at over 50 pages. In this article, I'll provide a brief summary, and share some insights and thoughts.

Parameters

DeepSeek-V3 is a Mixture-of-Experts (MoE) language model with 671 billion parameters, with 37 billion activated per token, designed for efficient inference and cost-effective training.

Training Cost

Then there's the cost that everyone is concerned about.

DeepSeek-V3's pre-training used 14.8T tokens, taking 180K H800 GPU hours per trillion tokens. With 2048 H800 GPUs, it took 3.7 days for one trillion tokens.

The full training (including context extension and post-training) consumed 2.788M GPU hours over two months. At $2 per GPU hour, total training cost was $5.576M.

For a model of this scale, the cost is remarkably low. This achievement stems from the following optimization techniques, including FP8 and other efficiency measures.

Model Architecture

DeepSeek-V3 uses Multi-head Latent Attention (MLA) and DeepSeekMoE architecture, which were validated in DeepSeek-V2 for efficiency and strong performance.

Multi-head Latent Attention (MLA): By using low-rank compression, the size of the key-value (KV) cache is reduced during inference, while maintaining performance comparable to standard Multi-Head Attention (MHA).

DeepSeekMoE: Combines shared and routed experts to make better use of model parameters. It also introduces an auxiliary-loss-free load balancing approach that tackles the issues with traditional MoE imbalance.

Pre-Training

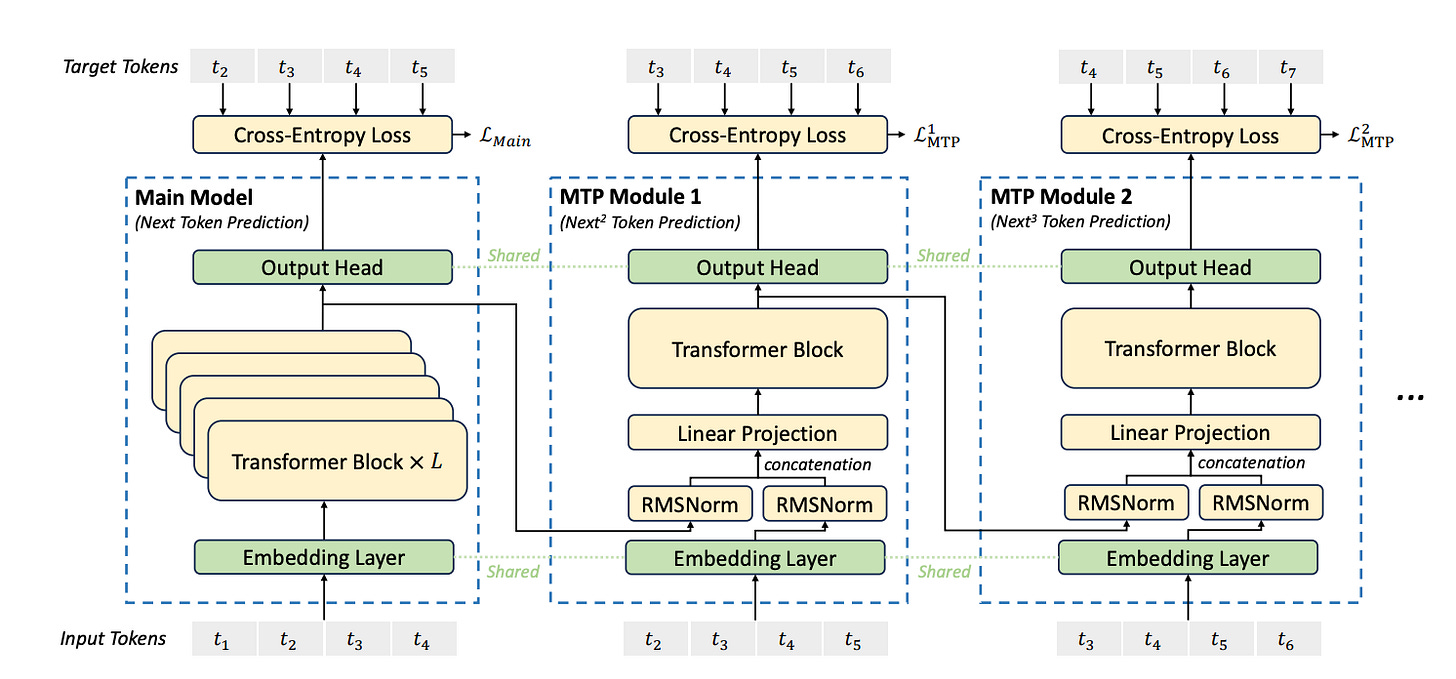

New Training Objective: Multi-Token Prediction (MTP)

The core idea of MTP is that during training, the model not only predicts the next token (like traditional language models), but also predicts several upcoming tokens in the sequence, thereby increasing training signal density and improving data efficiency.

MTP modules share embedding and output layer parameters during training, reducing memory usage, and can be used for speculative decoding during inference to accelerate generation.

Low-Precision Training: FP8 Framework

DeepSeek-V3 uses fine-grained quantization (such as grouping activation values into 1x128 tiles and weights into 128x128 blocks) to overcome the limitations of FP8’s dynamic range.

As far as I recall, this is the first time FP8 has been applied so extensively in a well-known LLM.

It also applies high-precision accumulation on CUDA Cores. Additionally, it compresses activations and optimizer states into low-precision formats (FP8 and BF16), which greatly reduces memory and communication overhead.

Training Efficiency Optimization

DeepSeek-V3 uses the DualPipe algorithm, an efficient pipeline parallelism technique that reduces pipeline bubbles and hides most of the communication overhead by overlapping it with computation during training. This ensures that as the model scales, it can still use fine-grained experts across nodes and maintain near-zero all-to-all communication costs, as long as the computation-to-communication ratio stays constant.

For infrastructure optimization, DeepSeek-V3 uses InfiniBand (IB) for cross-node communication and NVLink for intra-node communication, boosting the efficiency of large-scale distributed training. It also customizes all-to-all communication kernels across nodes to reduce latency.

Hybrid Parallel Training Framework

DeepSeek-V3 uses a hybrid architecture that combines 16-way Pipeline Parallelism (PP), 64-way Expert Parallelism (EP), and ZeRO-1 Data Parallelism (DP) to improve distributed training efficiency.

The model also carefully optimizes memory usage during training, removing the need for costly Tensor Parallelism (TP), which helps make large-scale training more affordable.

Long-Context Capabilities

After the pre-training phase, DeepSeek-V3 uses YaRN for context expansion and undergoes two additional training stages. During these stages, the context window is gradually extended from 4K to 32K, and then from 32K to 128K.

Post-Training

The post-training phase is carried out in two steps:

First, the model is fine-tuned with high-quality data using Supervised Fine-Tuning (SFT) to better align with human preferences and application needs.

Then, Reinforcement Learning (RL) is applied, combining both rule-based and model-based reward models along with optimization strategies like Group-Relative Policy Optimization (GRPO) to further improve the model's generation capabilities.

Additionally, knowledge distillation is used to distill reasoning abilities from the DeepSeek-R1 series to DeepSeek-V3, integrating long Chain-of-Thought (CoT) into the model.

Inference

LLMs usually use a two-stage process for inference.

In the pre-filling stage, DeepSeek-V3 operates on a minimum unit of 4 nodes (32 GPUs), leveraging Tensor Parallelism (TP) with Sequence Parallelism (SP), Data Parallelism (DP), and Expert Parallelism (EP) strategies to boost computational efficiency. The MoE module uses 32-way Expert Parallelism (EP32) and combines InfiniBand and NVLink to enable fast cross-node and intra-node communication.

In the decoding stage, DeepSeek-V3 expands to 40 nodes (320 GPUs) and allocates GPUs specifically to handle redundant and shared experts, optimizing efficiency through point-to-point communication and IBGDA technology.

To ensure load balancing, the model uses a redundant expert strategy that dynamically adjusts the distribution of experts.

This architecture ensures efficient inference with high throughput, maintaining both performance and stability.

Evaluation

DeepSeek-V3 achieves state-of-the-art performance among open-source models in various benchmarks, with results comparable to leading closed-source models like GPT-4.

For knowledge capabilities, DeepSeek-V3 outperforms all open-source models in knowledge benchmarks like MMLU and GPQA and approaches closed-source models like GPT-4o and Claude-Sonnet-3.5.

For math reasoning and coding, DeepSeek-V3 excels in math benchmarks (MATH-500) and coding tasks (e.g., LiveCodeBench), becoming a top model in these domains.

For multilingual capabilities, DeepSeek-V3 shows strong performance in Chinese factual knowledge, surpassing even GPT-4o and Claude-Sonnet-3.5.

Commentary

Overall, DeepSeek-V3 stands as one of the most advanced open-source LLMs, with innovations spanning from architecture design to training and deployment.

Below, I will share some thoughts and concerns about DeepSeek-V3.

Disaster Recovery in Large-Scale Distributed Inference Architecture: DeepSeek-V3's redundant experts and dynamic routing mechanism focus on load balancing rather than comprehensive disaster recovery optimization. For example, while redundant experts can help share the workload, if a rank fails (such as multiple GPUs going down simultaneously), the redundant resources may not be enough to cover all the failed tasks.

Training Stability: While FP8 low-precision computation significantly reduces memory and communication costs, its narrower dynamic range (especially with the E4M3 format) can introduce some errors during training. Although the tile- and block-wise quantization methods help mitigate some of these issues. However, I still have some doubts about how much this quantization approach will impact training stability.

Quality of Training Data: OpenAI invested significant human and material resources in data labeling. In later LLMs, it’s possible that some of the training data may be generated by ChatGPT. If this becomes a large portion of the data, it could lead to issues like data distribution imbalance, and the outputs might lack depth or creativity.

Finally, if you’re interested in the series, feel free to check out my other articles.