Advanced RAG 06: Exploring Query Rewriting

A key technique for aligning the semantics of queries and documents

In Retrieval Augmented Generation(RAG), we often encounter issues with user’s original queries, such as inaccurate wording or lack of semantic information. For instance, a query like “The NBA champion of 2020 is the Los Angeles Lakers! Tell me what is langchain framework?” could yield incorrect or unanswerable responses from the LLM if searched directly.

Consequently, it’s essential to align the semantic space of user queries with that of documents. Query rewriting technology can effectively address this problem. Its role within RAG is depicted in Figure 1:

From the positional perspective, query rewriting is a pre-retrieval method. Note that this diagram roughly illustrates the position of query rewriting in RAG. In the following section, we will see that some algorithms may improve the process.

Query rewriting is a key technique for aligning the semantics of queries and documents. For instance:

Hypothetical Document Embeddings (HyDE) aligns the semantic space of the query and document through hypothetical documents.

Rewrite-Retrieve-Read proposes a framework, different from the traditional retrieval and reading order, focusing on query rewriting.

Step-Back Prompting allows LLM to conduct abstract reasoning and retrieval based on high-level concepts.

Query2Doc creates pseudo-documents using a few prompts from LLMs. It then merges these with the original query to construct a new query.

ITER-RETGEN proposes a method of combining the outcome of the prior generation with the previous query. This is followed by retrieving relevant documents and generating new results. This process is repeated multiple times to achieve the final result.

Let’s delve into the details of these methods.

Hypothetical Document Embeddings (HyDE)

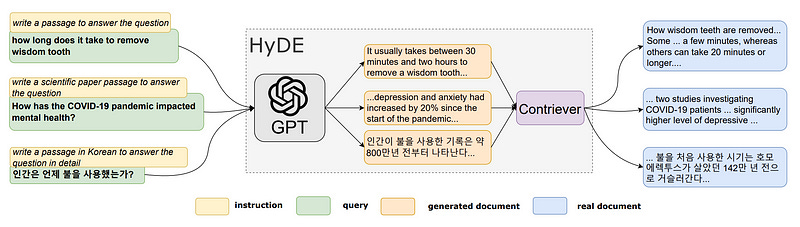

The paper “Precise Zero-Shot Dense Retrieval without Relevance Labels” proposes a method based on Hypothetical Document Embeddings (HyDE), the primary process is depicted in Figure 2.

The process is mainly divided into four steps:

1. Generate k hypothetical documents based on the query using the LLM. These generated documents may not be factual and could contain errors, but they should resemble a relevant document. The purpose of this step is to interpret the user’s query through LLM.

2. Feed the generated hypothetical document into an encoder, mapping it to a dense vector f(dk). It is believed that the encoder serves a filtration function, filtering out the noise within the hypothetical document. Here, dk represents the k-th generated document, and f denotes the encoder operation.

3. Compute the average of the following k vectors using the given formula,

We can also consider the original query q as a possible hypothesis:

4. Use vector v to retrieve answers from the document library. As established in step 3, this vector holds information from both the user’s query and the desired answer pattern, which can improve recall.

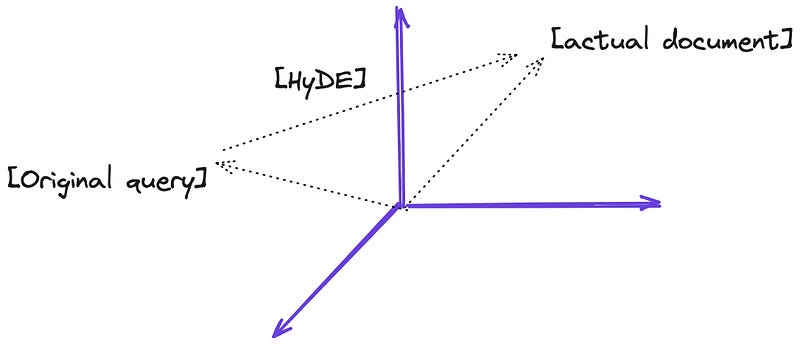

My understanding of HyDE is illustrated in Figure 3. The goal of HyDE is to generate hypothetical documents so that the final query vector v aligns as closely as possible with the actual document in the vector space.

v aligns as closely as possible with the actual document in the vector space. Image by author.HyDE is implemented in both LlamaIndex and Langchain. The following explanation uses LlamaIndex as an example.

Place this file in YOUR_DIR_PATH. The test code is as follows(The version of LlamaIndex I installed is 0.10.12):

import os

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from llama_index.core.indices.query.query_transform import HyDEQueryTransform

from llama_index.core.query_engine import TransformQueryEngine

# Load documents, build the VectorStoreIndex

dir_path = "YOUR_DIR_PATH"

documents = SimpleDirectoryReader(dir_path).load_data()

index = VectorStoreIndex.from_documents(documents)

query_str = "what did paul graham do after going to RISD"

# Query without transformation: The same query string is used for embedding lookup and also summarization.

query_engine = index.as_query_engine()

response = query_engine.query(query_str)

print('-' * 100)

print("Base query:")

print(response)

# Query with HyDE transformation

hyde = HyDEQueryTransform(include_original=True)

hyde_query_engine = TransformQueryEngine(query_engine, hyde)

response = hyde_query_engine.query(query_str)

print('-' * 100)

print("After HyDEQueryTransform:")

print(response)First, take a look at the default HyDE prompt in LlamaIndex:

############################################

# HYDE

##############################################

HYDE_TMPL = (

"Please write a passage to answer the question\n"

"Try to include as many key details as possible.\n"

"\n"

"\n"

"{context_str}\n"

"\n"

"\n"

'Passage:"""\n'

)

DEFAULT_HYDE_PROMPT = PromptTemplate(HYDE_TMPL, prompt_type=PromptType.SUMMARY)The code for class HyDEQueryTransform is as follows.

The purpose of the def _run function is to generate the hypothetical document, three debugging statements have been added to the def _run function to monitor the contents of the hypothetical document.:

class HyDEQueryTransform(BaseQueryTransform):

"""Hypothetical Document Embeddings (HyDE) query transform.

It uses an LLM to generate hypothetical answer(s) to a given query,

and use the resulting documents as embedding strings.

As described in `[Precise Zero-Shot Dense Retrieval without Relevance Labels]

(https://arxiv.org/abs/2212.10496)`

"""

def __init__(

self,

llm: Optional[LLMPredictorType] = None,

hyde_prompt: Optional[BasePromptTemplate] = None,

include_original: bool = True,

) -> None:

"""Initialize HyDEQueryTransform.

Args:

llm_predictor (Optional[LLM]): LLM for generating

hypothetical documents

hyde_prompt (Optional[BasePromptTemplate]): Custom prompt for HyDE

include_original (bool): Whether to include original query

string as one of the embedding strings

"""

super().__init__()

self._llm = llm or Settings.llm

self._hyde_prompt = hyde_prompt or DEFAULT_HYDE_PROMPT

self._include_original = include_original

def _get_prompts(self) -> PromptDictType:

"""Get prompts."""

return {"hyde_prompt": self._hyde_prompt}

def _update_prompts(self, prompts: PromptDictType) -> None:

"""Update prompts."""

if "hyde_prompt" in prompts:

self._hyde_prompt = prompts["hyde_prompt"]

def _run(self, query_bundle: QueryBundle, metadata: Dict) -> QueryBundle:

"""Run query transform."""

# TODO: support generating multiple hypothetical docs

query_str = query_bundle.query_str

hypothetical_doc = self._llm.predict(self._hyde_prompt, context_str=query_str)

embedding_strs = [hypothetical_doc]

if self._include_original:

embedding_strs.extend(query_bundle.embedding_strs)

# The following three lines contain the added debug statements.

print('-' * 100)

print("Hypothetical doc:")

print(embedding_strs)

return QueryBundle(

query_str=query_str,

custom_embedding_strs=embedding_strs,

)The test code operates as follows:

(llamaindex_010) Florian:~ Florian$ python /Users/Florian/Documents/test_hyde.py

----------------------------------------------------------------------------------------------------

Base query:

Paul Graham resumed his old life in New York after attending RISD. He became rich and continued his old patterns, but with new opportunities such as being able to easily hail taxis and dine at charming restaurants. He also started experimenting with a new kind of still life painting technique.

----------------------------------------------------------------------------------------------------

Hypothetical doc:

["After attending the Rhode Island School of Design (RISD), Paul Graham went on to co-found Viaweb, an online store builder that was later acquired by Yahoo for $49 million. Following the success of Viaweb, Graham became an influential figure in the tech industry, co-founding the startup accelerator Y Combinator in 2005. Y Combinator has since become one of the most prestigious and successful startup accelerators in the world, helping launch companies like Dropbox, Airbnb, and Reddit. Graham is also known for his prolific writing on technology, startups, and entrepreneurship, with his essays being widely read and respected in the tech community. Overall, Paul Graham's career after RISD has been marked by innovation, success, and a significant impact on the startup ecosystem.", 'what did paul graham do after going to RISD']

----------------------------------------------------------------------------------------------------

After HyDEQueryTransform:

After going to RISD, Paul Graham resumed his old life in New York, but now he was rich. He continued his old patterns but with new opportunities, such as being able to easily hail taxis and dine at charming restaurants. He also started to focus more on his painting, experimenting with a new technique. Additionally, he began looking for an apartment to buy and contemplated the idea of building a web app for making web apps, which eventually led him to start a new company called Aspra.embedding_strs is a list containing two elements. The first is the generated hypothetical document, and the second is the original query. They are combined into a list to facilitate vector calculations.

In this example, HyDE significantly enhances output quality by accurately imagining what Paul Graham did after RISD (see hypothetical document). This improves the embedding quality and final output.

Naturally, HyDE also has some failure cases. Interested readers can test these out by visiting this webpage.

HyDE appears unsupervised, no model is trained in HyDE: both the generative model and the contrastive encoder remain intact.

In summary, while HyDE introduces a new method for query rewriting, it does have some limitations. It doesn’t rely on query embedding similarity, instead emphasizing the similarity of one document to another. However, if the language model isn’t well-versed in the topic, it may not always yield optimal results, potentially leading to an increase in errors.

Rewrite-Retrieve-Read

The idea comes from the paper “Query Rewriting for Retrieval-Augmented Large Language Models”. It believes that the original query, particularly in real-world scenarios, may not always be optimal for retrieval by a LLM.

As a result, the paper suggests that we should first use an LLM to rewrite the queries. The retrieval and answer generation should then follow, rather than directly retrieving content and generating answers from the original query, as shown in Figure 4 (b).

Keep reading with a 7-day free trial

Subscribe to AI Exploration Journey to keep reading this post and get 7 days of free access to the full post archives.