Advanced RAG 03: Using RAGAs + LlamaIndex for RAG evaluation

Including principles, diagrams and code

If you have developed a Retrieval Augmented Generation(RAG) application for a real business system, you are likely concerned about its effectiveness. In other words, you want to evaluate how well the RAG performs.

Furthermore, if you find that your existing RAG is not effective enough, you may need to verify the effectiveness of advanced RAG improvement methods. In other words, you need to conduct an evaluation to see if these improvement methods are effective.

In this article, we first introduce evaluation metrics for RAG proposed by RAGAs(Retrieval Augmented Generation Assessment), a framework for the evaluation of RAG pipelines. Then, we explain how to implement the entire evaluation process using RAGAs + LlamaIndex.

Metrics of RAG evaluation

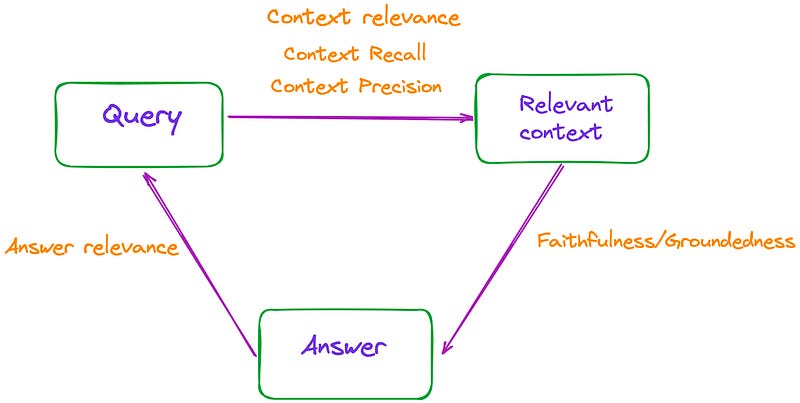

In simple terms, the process of RAG involves three main parts: the input query, the retrieved context, and the response generated by the LLM. These three elements form the most important triad in the RAG process and are interdependent.

Therefore, the effectiveness of RAG can be evaluated by measuring the relevance between these triads, as shown in Figure 1.

The paper mentions a total of 3 metrics: Faithfulness, Answer Relevance, and Context Relevance, these metrics do not require access to human-annotated datasets or reference answers.

In addition, the RAGAs website introduces two more metrics: Context Precision and Context Recall.

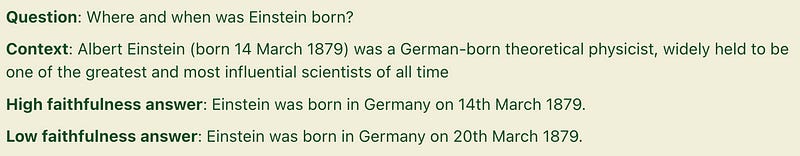

Faithfulness/Groundedness

Faithfulness refers to ensuring that the answer is based on the given context. This is important for avoiding illusions and ensuring that the retrieved context can be used as a justification for generating the answer.

If the score is low, it indicates that the LLM’s response does not adhere to the retrieved knowledge, and the likelihood of providing hallucinatory answers increases. For example:

To estimate faithfulness, we first use an LLM to extract a set of statements, S(a(q)). The method is by using the following prompt:

Given a question and answer, create one or more statements from each sentence in the given answer.

question: [question]

answer: [answer]After generating S(a(q)), the LLM determines if each statement si can be inferred from c(q). This verification step is carried out using the following prompt:

Consider the given context and following statements, then determine whether they are supported by the information present in the context. Provide a brief explan ation for each statement before arriving at the verdict (Yes/No). Provide a final verdict for each statement in order at the end in the given format. Do not deviate from the specified format.

statement: [statement 1]

...

statement: [statement n]The final faithfulness score, F, is computed as F = |V| / |S|, where |V| represents the number of statements that were supported according to the LLM, and |S| represents the total number of statements.

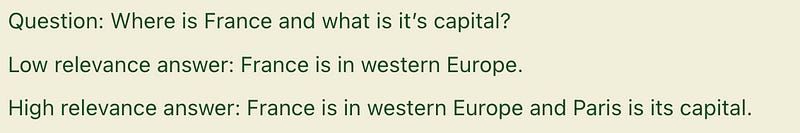

Answer Relevance

This metric measures the relevance between the generated answer and the query. A higher score indicates better relevance. For example:

To estimate the relevance of an answer, we prompt the LLM to generate n potential questions, qi, based on the given answer a(q), as follows:

Generate a question for the given answer.

answer: [answer]Then, we utilize a text embedding model to obtain embeddings for all the questions.

For each qi, we calculate the similarity sim(q, qi) with the original question q. This corresponds to the cosine similarity between the embeddings. The answer relevance score AR for question q is computed as follows:

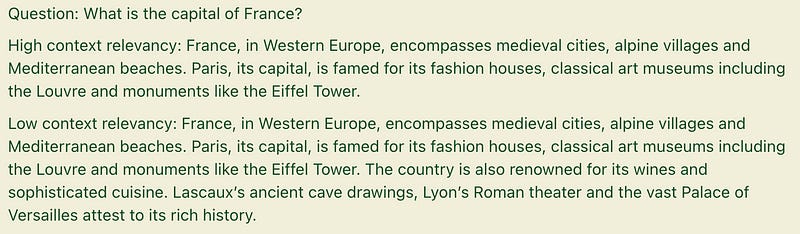

Context Relevance

This is a metric for measuring retrieval quality, primarily evaluating the degree to which the retrieved context supports the query. A low score indicates that there is a significant amount of irrelevant content retrieved, which may affect the final answer generated by LLM. For example:

To estimate the relevance of the context, a set of key sentences (Sext) is extracted from the context (c(q)) using LLM. These sentences are crucial for answering the question. The prompt is as follows:

Please extract relevant sentences from the provided context that can potentially help answer the following question.

If no relevant sentences are found, or if you believe the question cannot be answered from the given context,

return the phrase "Insufficient Information".

While extracting candidate sentences you’re not allowed to make any changes to sentences from given context.Then, in RAGAs, relevance is calculated at the sentence level using the following formula:

Context Recall

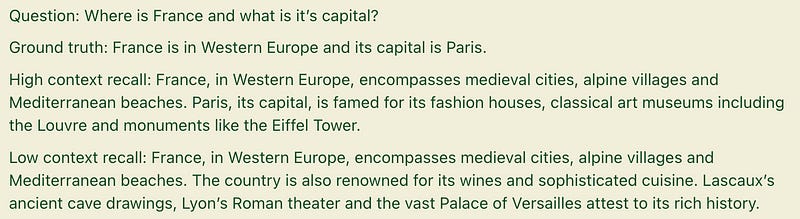

The metric measures the level of consistency between the retrieved context and the annotated answer. It is calculated using the ground truth and the retrieved context, with higher values indicating better performance. For example:

When implementing, it is required to provide ground truth data.

The calculation formula is as follows:

Context Precision

This metric is relatively complex, it is used to measure whether all the relevant contexts containing true facts that are retrieved are ranked at the top. A higher score indicates higher precision.

The calculation formula for this metric is as follows:

The advantage of Context Precision is its ability to perceive the ranking effect. However, its drawback is that if there are very few relevant recalls, but they are all ranked highly, the score will also be high. Therefore, it is necessary to consider the overall effect by combining several other metrics.

Using RAGAs + LlamaIndex for RAG evaluation

The main process is shown in Figure 6:

Environment Configuration

Install ragas: pip install ragas. Then, check the current version.

(py) Florian:~ Florian$ pip list | grep ragas

ragas 0.0.22It is worth mentioning that if you install the latest version(v0.1.0rc1) using pip install git+https://github.com/explodinggradients/ragas.git, there is no support for LlamaIndex.

Then, import relevant libraries, set environment and global variables

import os

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_KEY"

dir_path = "YOUR_DIR_PATH"

from llama_index import VectorStoreIndex, SimpleDirectoryReader

from ragas.metrics import (

faithfulness,

answer_relevancy,

context_relevancy,

context_recall,

context_precision

)

from ragas.llama_index import evaluateThere is only one PDF file in the directory, the paper “TinyLlama: An Open-Source Small Language Model” is used.

(py) Florian:~ Florian$ ls /Users/Florian/Downloads/pdf_test/

tinyllama.pdfUsing LlamaIndex to build a simple RAG query engine

documents = SimpleDirectoryReader(dir_path).load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()By default, the OpenAI model is used in LlamaIndex, the LLM and embedding model an be easily configured using ServiceContext.

Constructing an evaluation dataset

Since there are some metrics that require manually annotated datasets, I have written a few questions and their corresponding answers myself.

eval_questions = [

"Can you provide a concise description of the TinyLlama model?",

"I would like to know the speed optimizations that TinyLlama has made.",

"Why TinyLlama uses Grouped-query Attention?",

"Is the TinyLlama model open source?",

"Tell me about starcoderdata dataset",

]

eval_answers = [

"TinyLlama is a compact 1.1B language model pretrained on around 1 trillion tokens for approximately 3 epochs. Building on the architecture and tokenizer of Llama 2, TinyLlama leverages various advances contributed by the open-source community (e.g., FlashAttention), achieving better computational efficiency. Despite its relatively small size, TinyLlama demonstrates remarkable performance in a series of downstream tasks. It significantly outperforms existing open-source language models with comparable sizes.",

"During training, our codebase has integrated FSDP to leverage multi-GPU and multi-node setups efficiently. Another critical improvement is the integration of Flash Attention, an optimized attention mechanism. We have replaced the fused SwiGLU module from the xFormers (Lefaudeux et al., 2022) repository with the original SwiGLU module, further enhancing the efficiency of our codebase. With these features, we can reduce the memory footprint, enabling the 1.1B model to fit within 40GB of GPU RAM.",

"To reduce memory bandwidth overhead and speed up inference, we use grouped-query attention in our model. We have 32 heads for query attention and use 4 groups of key-value heads. With this technique, the model can share key and value representations across multiple heads without sacrificing much performance",

"Yes, TinyLlama is open-source",

"This dataset was collected to train StarCoder (Li et al., 2023), a powerful opensource large code language model. It comprises approximately 250 billion tokens across 86 programming languages. In addition to code, it also includes GitHub issues and text-code pairs that involve natural languages.",

]

eval_answers = [[a] for a in eval_answers]Metrics Selection and RAGAs Evaluation

metrics = [

faithfulness,

answer_relevancy,

context_relevancy,

context_precision,

context_recall,

]

result = evaluate(query_engine, metrics, eval_questions, eval_answers)

result.to_pandas().to_csv('YOUR_CSV_PATH', sep=',')Note that by default, in RAGAs, the OpenAI model is used.

In RAGAs, if you want to use another LLM (such as Gemini) to evaluate with LlamaIndex, I have not found any useful methods in RAGAs version 0.0.22, even after debugging the source code of RAGAs.

Final code

import os

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_KEY"

dir_path = "YOUR_DIR_PATH"

from llama_index import VectorStoreIndex, SimpleDirectoryReader

from ragas.metrics import (

faithfulness,

answer_relevancy,

context_relevancy,

context_recall,

context_precision

)

from ragas.llama_index import evaluate

documents = SimpleDirectoryReader(dir_path).load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

eval_questions = [

"Can you provide a concise description of the TinyLlama model?",

"I would like to know the speed optimizations that TinyLlama has made.",

"Why TinyLlama uses Grouped-query Attention?",

"Is the TinyLlama model open source?",

"Tell me about starcoderdata dataset",

]

eval_answers = [

"TinyLlama is a compact 1.1B language model pretrained on around 1 trillion tokens for approximately 3 epochs. Building on the architecture and tokenizer of Llama 2, TinyLlama leverages various advances contributed by the open-source community (e.g., FlashAttention), achieving better computational efficiency. Despite its relatively small size, TinyLlama demonstrates remarkable performance in a series of downstream tasks. It significantly outperforms existing open-source language models with comparable sizes.",

"During training, our codebase has integrated FSDP to leverage multi-GPU and multi-node setups efficiently. Another critical improvement is the integration of Flash Attention, an optimized attention mechanism. We have replaced the fused SwiGLU module from the xFormers (Lefaudeux et al., 2022) repository with the original SwiGLU module, further enhancing the efficiency of our codebase. With these features, we can reduce the memory footprint, enabling the 1.1B model to fit within 40GB of GPU RAM.",

"To reduce memory bandwidth overhead and speed up inference, we use grouped-query attention in our model. We have 32 heads for query attention and use 4 groups of key-value heads. With this technique, the model can share key and value representations across multiple heads without sacrificing much performance",

"Yes, TinyLlama is open-source",

"This dataset was collected to train StarCoder (Li et al., 2023), a powerful opensource large code language model. It comprises approximately 250 billion tokens across 86 programming languages. In addition to code, it also includes GitHub issues and text-code pairs that involve natural languages.",

]

eval_answers = [[a] for a in eval_answers]

metrics = [

faithfulness,

answer_relevancy,

context_relevancy,

context_precision,

context_recall,

]

result = evaluate(query_engine, metrics, eval_questions, eval_answers)

result.to_pandas().to_csv('YOUR_CSV_PATH', sep=',')Note that when running the program in the terminal, the pandas dataframe may not be displayed completely. To view it, you can export it as a CSV file, as shown in Figure 6.

From Figure 6, it is apparent that the fourth question, “Tell me about starcoderdata dataset,” has all 0s. This is because LLM was unable to provide an answer. The second and third questions have a context precision of 0, indicating that the relevant contexts from the retrieved contexts were not ranked at the top. The context recall for the second question is 0, indicating that the retrieved contexts do not match the annotated answer.

Now, let’s examine questions 0 to 3. The Answer Relevance scores for these questions are high, indicating a strong correlation between the answers and the questions. Additionally, the Faithfulness scores are not low, which suggests that the answers are primarily derived or summarized from the context, it can be concluded that the answers are not generated due to hallucination by the LLM.

Furthermore, we discovered that despite our low Context Relevance score, gpt-3.5-turbo-16k (RAGAs’s default model) is still capable of deducing the answers from it.

Based on the results, it is evident that this basic RAG system still has significant room for improvement.

Conclusion

In general, RAGAs provides comprehensive metrics for evaluating RAG and offers convenient invocation. Currently, the RAG evaluation framework is lacking, RAGAs provides an effective tool.

Upon debugging the internal source code of RAGAs, it becomes evident that RAGAs is still in its early development stage. We are optimistic about its future updates and improvements.

Additionally, if you’re interested in RAG , feel free to check out my other articles.

Finally, if you have any questions about this article, please indicate them in the comments section.