A New Approach to Optimizing Query Generation in RAG

In the fast-evolving field of natural language processing, large language models (LLMs) have demonstrated impressive performance across various language tasks. However, the challenge of generating incorrect information, known as "hallucinations," has become increasingly critical.

To address this challenge, Retrieval-Augmented Generation (RAG) systems leverage document retrieval to provide more accurate answers. Despite their promise, existing RAG systems still face challenges due to vague queries.

This article introduces a new study titled "Optimizing Query Generation for Enhanced Document Retrieval in RAG". This study aimes to enhance document retrieval in RAG by optimizing query generation.

Solution

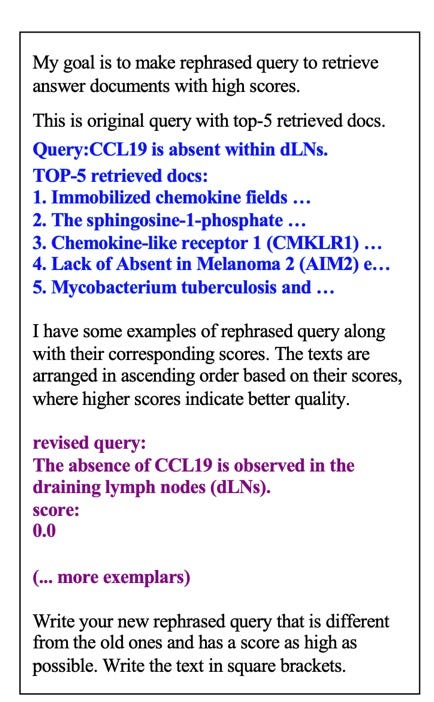

A method called QOQA (Query Optimization using Query Expansion) was proposed, as illustrated in Figure 1.

The corresponding prompt is shown in Figure 2.

The QOQA method optimizes query generation through the following steps, thereby improving the document retrieval accuracy in RAG systems.

Query Expansion and Reconstruction

The core idea of QOQA is to utilize large language models (LLMs) to rephrase queries. First, the system retrieves the top N relevant documents using the original query. These retrieved documents, together with the original query, form an expanded query. Then, LLMs generate rephrased queries based on these expanded queries.

Query-Document Alignment Score

To evaluate and optimize the generated queries, the researchers introduced a query-document alignment score. This scoring system includes three evaluation criteria: BM25 score, dense score, and hybrid score.

BM25 Score: Based on a sparse retrieval model, it evaluates the frequency and weight of query terms in the document.

Dense Score: Using a dense retrieval model, it evaluates the alignment between queries and documents through similarity in the embedding vector space.

Hybrid Score: Combines both BM25 and dense scores, optimizing the final score by adjusting the parameter α.

Optimization Process

During the optimization process, the QOQA method updates the query template, including the original query, retrieved documents, and the top K rephrased queries. In each iteration, LLMs generate new rephrased queries based on these scores and add them to the query bucket. Through multiple iterations, the system continuously optimizes the queries to ensure they outperform the original query.

Evaluation

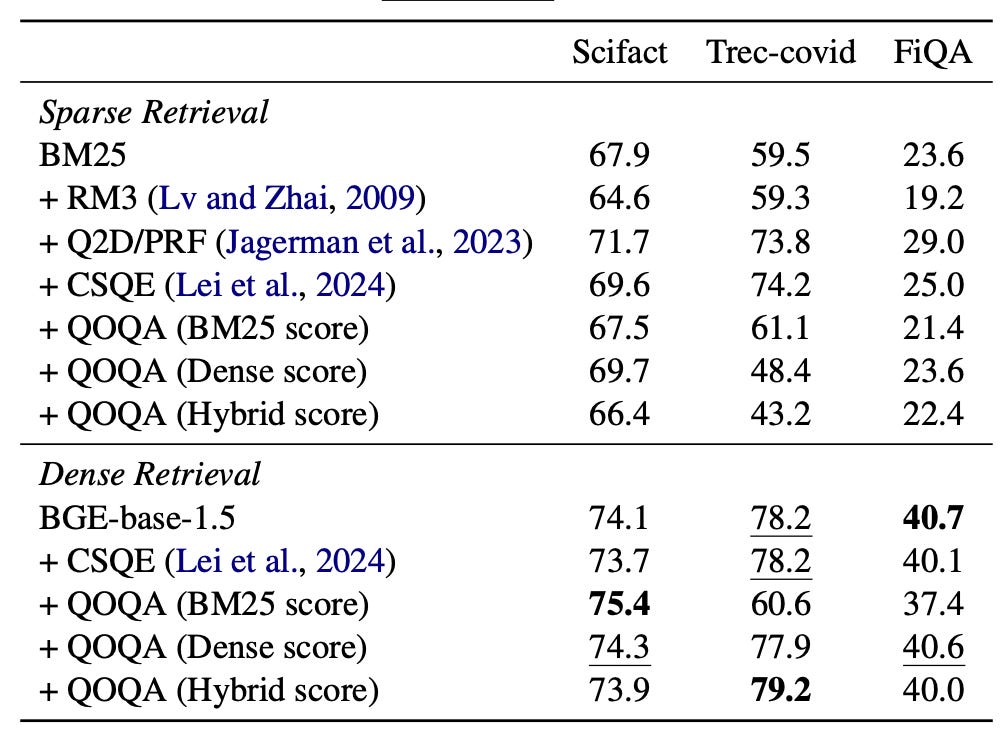

The experimental results showed that the QOQA method significantly improved document retrieval performance compared to baseline models. The performance of various document retrieval models across these datasets is summarized in Figure 3.

Additionally, case analyses demonstrated that queries generated using QOQA were more precise and concrete than the original queries, leading to the retrieval of more relevant documents. Examples from the SciFact and FiQA datasets are presented in Figure 4.

Conclusion

This article introduces a method to mitigate the hallucination problem in Retrieval-Augmented Generation (RAG) systems by optimizing query generation.

Utilizing a top-k averaged query-document alignment score, QOQA refined queries using LLMs to improve the precision and computational efficiency of document retrieval. This study highlights the importance of precise query generation in enhancing the reliability and effectiveness of RAG systems.