A Brief Introduction to Adaptive-RAG

This article first outlines the overall process for Adaptive-RAG. It then details the construction of a classifier.

Overall Process

Adaptive-RAG introduces a new adaptive framework. It dynamically chooses the most appropriate strategy for LLM, ranging from the simplest to the most complex, based on the query's complexity.

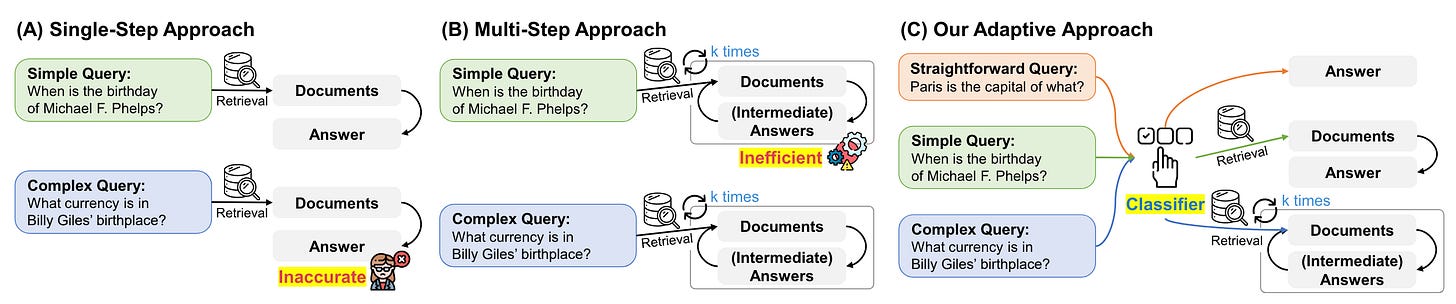

(A) represents a single-step approach where the relevant documents are first retrieved, followed by the generation of the answer. However, this method might not be sufficiently accurate for complex queries that require multi-step reasoning.

(B) symbolizes a multi-step process that involves iterative document retrieval and generation of intermediate responses. Despite its effectiveness, it's inefficient for simple queries as it requires multiple accesses to both LLMs and retrievers.

(C) is an adaptive approach that determines query complexity using meticulously constructed classifiers. This enhances the selection of the most suitable strategy for LLM retrieval, which could include iterative, single, or even no retrieval methods.

Construction of the Classifier

Construction of the Dataset

A key challenge is the lack of an annotated dataset for query-complexity pairs.

How to address this? Adaptive-RAG employs two specific strategies to automatically construct the training dataset.

The dataset provided by Adaptive-RAG reveals that the labeling of classifier training data is based on publicly labeled QA datasets.

There are two strategies:

Regarding the collected questions, if the simplest non-retrieval based method generates the correct answer, the label for its corresponding query is marked as 'A'. Likewise, queries that are correctly answered by the single-step method are labeled 'B', while those answered correctly by the multi-step method are labeled 'C'. It worth mention that simpler models are given higher priorities. This means, if both single-step and multi-step methods yield the correct result and the non-retrieval-based method fails, we will assign the label 'B' to its corresponding query, as illustrated in Figure 2.

If all three methods fail to generate the correct answer, it indicates that some questions remain untagged. In this case, allocation is performed directly from the public dataset. Specifically, we assign 'B' to queries in the single-hop dataset, and 'C' to queries in the multi-hop dataset.

Training Method

The training method involves using cross-entropy loss to train the classifier based on these automatically collected query-complexity pairs.

Selection of Classifier Size

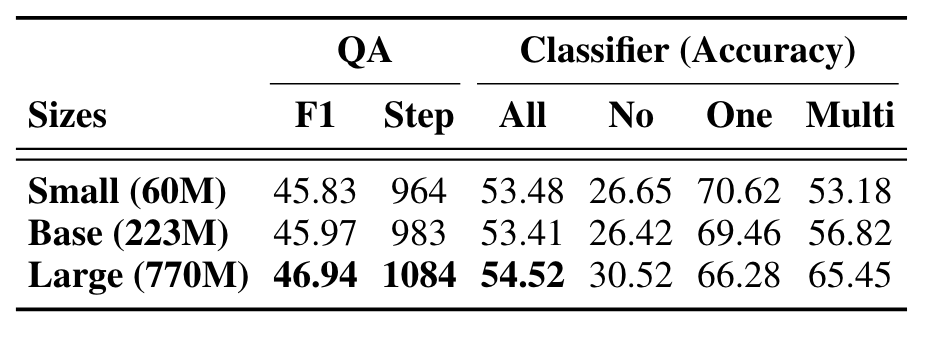

As illustrated in Figure 3, there is no significant performance difference between classifiers of varying sizes. Even a smaller model does not impact performance, thereby aiding in resource efficiency.